Note: This is a syndicated post. If you'd like to, you can view the original on my dev blog.

I remember reading a short story in high school about an aspiring writer who invested a great deal of time and money in procuring a fancy pen and an ornately bound notebook, purchasing a desk only a serious writer would sit at, furnishing his study with shelves full of old books he'd never read, and daydreaming about the prospect of writing. What was missing from this picture was any ink touching paper—he did anything but actually write.

The same thing often happens with beginners who want to get into software development—they install an assortment of IDEs and tools whose purpose they don't really understand, scour through listicles on the best programming languages for beginners to learn, and generally spend most of their time contemplating programming and trying to come up with ideas for projects instead of... well, programming.

Pick a Language, Any Language

Instead of wasting your time comparing different languages, just pick one and start coding. It really doesn't matter. Learn Java. Learn Python. Learn PHP, JavaScript, Go, Scratch, or any language you want. Anything will do when you're a beginner as long as you actually start somewhere. What you shouldn't do is waste time debating the merits and drawbacks of various languages when you don't even have experience yet—that's what you really need, after all; the language is just a means to an end.

YouTuber Michael Reeves puts it well in one of his videos:

Just pick one and start learning programming... All modern programming languages are wildly powerful, and they can do all kinds of shit.

I recall my programming fundamentals teacher imparting some very wise words in the second semester of my undergraduate studies when he heard me complain about us learning C++, which at the time was completely foreign to me and came with a boatload of frustrations. He said that the degree wasn't intended to teach us how to program in any particular language, and that languages are merely tools for doing a programmer's job. The hope was that we'd be able to pick up any language on demand after graduating from the program. Our goal was to learn how to think like computer scientists instead of confining ourselves to the narrow context of any particular language.

Languages are merely tools for doing a programmer's job.

Now, there is a caveat here worth mentioning: The language you select as a beginner will influence your initial perception of software development and dictate whether you actually end up enjoying coding or if you walk away disappointed.

There's a reason why many university courses expose beginners to Java, C++, or, in the case of MIT, Python: These three languages are very popular and span a number of software development domains, making them practical and useful to know. They're also more accessible to beginners because they're general-purpose languages.

Here's my advice: Don't pick a single language and refuse to part ways with it. The most obvious reason for this is because not every language is suitable for every problem. But in addition, limiting your worldview to just a single language paradigm can seriously stunt your growth as a developer.

Instead, I recommend using your favorite language as a way to learn programming fundamentals, algorithms, and data structures, and to gradually step out of your comfort zone. All of those things are language agnostic and easily transferable. Once you master those skills, you'll find that learning new programming languages will be a matter of getting used to a new syntax (and maybe a new way of thinking, like going from an OOP language to a functional one).

Stop Wasting Money on Courses You'll Never Finish

Udemy, Udacity, Lynda, Vertabelo Academy, Codeacademy Pro, et al. What do these things have in common? They're online platforms that target beginners who feel overwhelmed or disillusioned working professionals who are looking to make a career change as quickly as possible. These platforms are everywhere, but I consider them detrimental to learning how to actually code because they rarely ever provide materials that are of a higher quality than free content that's readily available online.

The biggest problem with these courses is the constant hand-holding—it's a disservice. Doing your own research, making mistakes, breaking your code, and learning how to debug all incredibly valuable experiences that will stick with you forever and ultimately make you a better programmer. Simply copying what an instructor does may help you develop muscle memory, but it won't provide you with the deep level of understanding that only first-hand experience can bring.

Have you ever noticed how Udemy's courses are always on sale? Or that these platforms have hints on practically every problem, or that the instructors outright tell you how to do X thing, or that they even do a Google search right there in the video instead of encouraging students to research these things for themselves? These are all red flags.

Many of these courses involve passive learning, even if they encourage you to type along and do things on your own machine as you watch them done on the instructor’s end. Why? Because you’re not actually discovering problems yourself and then searching for ways to solve those problems. That’s how people learn—through experience, curiosity, and failure.

With many of these platforms, there’s a thin veil of active learning. In reality, you’re doing one of three things:

- Typing out everything the instructor wrote by hand.

- Straight up copy-pasting their code or cloning a repo from their GitHub.

- Not even coding—just listening and hoping it all sticks (spoiler: it won't).

Then, a week later, you find yourself having to revisit a past exercise (or even the associated video) to recall how to do something. If that's the case, then you clearly didn’t learn anything.

Reality: Developers have to constantly search for things online, sifting through Stack Overflow posts, Reddit, YouTube tutorials, articles, and—dare I say it?—official documentation to find answers to their questions. But why bother to seek out these answers on your own when you can pay for an instructor to tell you how it’s done? That makes you feel all nice and safe inside.

In a similar vein, these platforms also shield you from the somewhat tricky (yet valuable) process of setting up a technology on your own machine. Instead, they offer you a nice little sandbox environment where you can code to your heart's content without ever understanding what happens between you writing your code and the computer interpreting and understanding that code. If you plan on seriously investing your time in learning and using a technology in the real world, why not take the time to understand how you can use it on your own computer?

I'll admit: I do understand the appeal of a sandbox environment. It allows you to focus on the important thing—learning the language—without getting bogged down in any of the impediments. Med students don't practice surgery on real patients, after all. But you also can't expect to operate on cadavers forever.

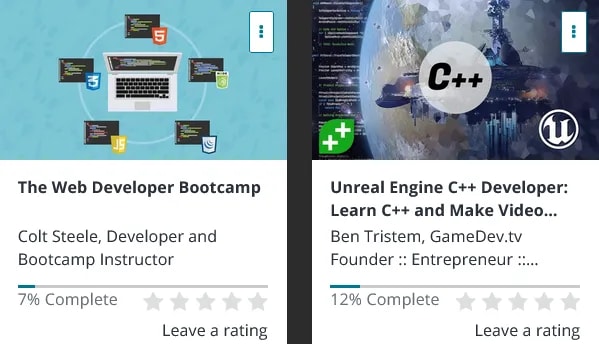

Here are two Udemy course I once purchased thinking that they were the key to achieving whatever goals I had at the time (web development and game development, apparently):

I quit about 10% through both of them because I was bored out of my mind. I am not the type of person who can tolerate sitting in front of lecture after lecture, followed by intermittent "now you try it" exercises that, for the most part, are very trivial. I need a context for anything I do—a genuinely relevant, interesting, and challenging project that I want to work on. To-do list apps don't cut it for me because they're not something I actually want to build. Making games your way isn't what I want to do when I'm learning game dev.

For example, I learned just about everything I know about HTML, CSS, and (some) JavaScript by making my personal website—having a vision for what I wanted and then researching all the necessary parts to bring it to life. I learned both web development and game development on my own time, on my own terms.

Going into the Artificial Intelligence for Games class at the University of Florida, I had absolutely zero knowledge of the Unity game engine or C#—the main technologies we'd be using. How did I learn them in such a short period of time? By working on a game that I came up with and that actually interested me. By Googling, reading tutorials, and actually going through the painstaking process of trying to make something work but hitting roadblocks along the way. And by working with other developers—something that online courses will rarely ever expose you to.

What do you want to make? Figure that out, and just start Googling. If you decide that taking a course is the right path for you, at least don't waste your money on purchasing one—there are plenty of free resources online to get you where you need to go.

Learn to Code by Reading Code

Becoming comfortable with reading other people's code is an essential skill for any developer. Much like how reading books can help you become a better writer by exposing you to different literary styles, techniques, genres, and overarching themes, reading code can make you a better programmer by exposing you to different solutions, architectures, design patterns, and language paradigms.

I distinctly remember my fear of reading other people's code when I only had about a year of experience with programming. You're not familiar with the code, so everything naturally seems foreign to you, and you become frustrated and question why the author used certain naming conventions or design patterns. If you feel like this, you may be diving head-first into a complex project instead of dipping your toes in the water.

Here are my recommendations for how to improve your code reading skills:

Join a site like Code Review on the Stack Exchange network. Beginners (and even advanced programmers) often post their code here for more experienced developers to review. This is an incredibly valuable resource for a budding programmer. I recommend that you read an original poster's code in its entirety, as slowly as you need to. Then, review the responses to see what kinds of feedback other developers provided. Understanding why they made those recommendations can help you avoid making similar mistakes in your own code. If you need to, you can compile a list of common errors that you see and the corresponding recommendations that are provided. With time, though, you shouldn't need such a reference—it will all become second nature through repeated exposure to the same kinds of problems.

Read tutorials. But with a caveat: Never ever follow just a single tutorial blindly, copy-pasting things without understanding what the code is really doing. Instead, open a few (2–3) tutorials on a single topic and review them thoroughly. This is good for two reasons: 1) You're less likely to be misled by inexperienced developers who are actually imparting bad practices, and 2) if there are any discrepancies between the two tutorials, you can research those differences to gain a better understanding of why certain decisions were made in one snippet of code but not in another.

I found the second tip to be especially useful when learning CSS from scratch. Initially, I was quite afraid to read other people's stylesheets because I never really understood what their rules were doing. So what did I do? I read W3Schools, MDN, CSS-Tricks, and other sites to find multiple ways to do the same thing in front-end. Then, for each line of CSS that was presented to me, I would enter the code locally, refresh my browser, and make a mental note of the visual change that occurred. If something was unclear, I would stop right then and Google that particular CSS property to see what exactly it does. All of this involved reading, and reading, and... more reading. It never stops—and it's an important skill to develop if you're serious about dev, regardless of what specialization you choose to pursue.

Get Comfortable with Setting up a Dev Environment

When it comes to working on large code bases, especially ones that are not your own, it's important that you know how to set up your local dev environment correctly, with all the right tools and dependencies. If you're new to this, consider the following exercise: Find an interesting project using GitHub's Explore section and clone it onto your own machine. Then, follow whatever directions they have in their README to set up your dev environment and successfully build the project locally.

There's immense value to be found in this exercise because it's something that all developers need to know how to do on the job. If you run into any problems, do a bit of digging around online; it's likely that others have encountered the same problem before and that they've reported it either on GitHub, StackOverflow, or Reddit. If you have absolutely no luck, then you can consider opening an issue in the repo asking for help.

A big part of this is learning how to ease your way into a terminal environment and relying less on traditional GUIs. In particular, knowing how to work with the Bash shell and its most popular commands will make your life a whole lot easier as a developer. If you want the best of both worlds, consider using Visual Studio Code as your IDE—it's free, has built-in support for terminals, features a marketplace of free extensions, and provides fluid integration with version control software like Git. All of these are things you'll eventually need to use as a programmer.

A Note on Git

Speaking of version control, if you're feeling lost with Git, then know this: There's no better way to "study" or learn Git than to actually use it for a real project. There are tons of online tutorials and videos that claim to help you understand Git in as little as 15 minutes, but they all ironically miss the key point: Git is an applied technology that's difficult to understand or appreciate when it's used in isolation. Things may appear to click when you watch a video, but unless you use Git yourself, you'll forget it the next day.

You absolutely need to associate Git with a hands-on project—ideally one that involves multiple collaborators—to understand why the feature branch workflow is the commonly accepted standard, or why you should never rebase public branches. This will also help you to get comfortable with stashing your work, switching branches, amending commits, and checking out specific commits. These are all things that used to scare me when I had little to no experience with Git, but once you master them, you'll appreciate why they're so important.

I also highly recommend that you avoid using a GUI client for Git, as they prevent you from mastering and remembering common Git commands. The command-line is your friend, not your enemy. If you struggle to remember what a certain command does, or how to achieve something in Git, feel free to reference the Atlassian docs—they're very well written. You'll also find many excellent answers to common Git questions on StackOverflow.

Know that Leetcode Is Not the Answer

Remember the SATs and ACTs? Most people would rather not. It's a time when you're cramming material and vocabulary that you'll likely never need to use in your future, just for the sake of validating your intelligence so you can get into college. I hated the entire process and only appreciated one or two math tricks I learned along the way.

Leetcode is the same. There's this pervasive (and arguably harmful) practice in the hiring industry where a programmer's aptitude is measured by their ability to solve coding puzzles on a whiteboard, and that has spawned a number of platforms like Leetcode, Hackerrank, and others dedicated to helping developers master the art of solving obscure puzzles. I've personally been fortunate enough to not have to go through this ordeal in my interviews, and I hope you don't either.

Have people told you that you should cram Leetcode if you want to get a good job in tech? Stop listening to them. That may be the case if you want to get into elite unicorns, but it's not for the majority of developer positions. The best thing you can do is to work on personal projects and gain a deeper understanding of the technologies you want to work with in the future, as well as a solid grasp of CS fundamentals (which will most certainly come up during an interview). If you find yourself memorizing things, then you're going about this the wrong way.

With all that said, I will admit that there are some rather interesting Leetcode problems that actually get you thinking and test your understanding of fundamental CS data structures. Here are my personal favorites:

- Reversing a linked list

- Merging two sorted linked lists

- Implementing a prefix tree from scratch

- Validating stack sequences

- Map sum pairs (prefix trees)

So, if you're curious about solving these problems, do give them a shot. But don't waste your time exclusively practicing Leetcode thinking that will somehow make you a better programmer (or a more qualified candidate for whatever position you're seeking). It's a very minor part of the overall picture.

Stop Beating Yourself Up

Failure sucks. A lot. But you need to get used to the feeling of frustration that programming generally brings because it's inevitable.

This is where online programming courses tend to disappoint—once the training wheels come off, and as soon as you’re confronted with an unfamiliar problem, the panic sets in. Because up until now, every problem you'd ever encountered had a solution: an instructor who would happily tell you exactly how to do something.

And then what happens? People turn to Quora, Reddit, or whatever other forums they frequent to vent and seek reassurance, to be told that coding isn’t that difficult and that they're not stupid and that they'll get better with time.

The truth? You’re not stupid. Learning to code is literally learning to speak a new language—there's a rough transition period at the start where you're mostly doing things by rote memorization and repetition, and when few things actually make intuitive sense. But there eventually comes that moment of enlightenment where you look back and realize what it is that you were doing this entire time. It does eventually click and become second nature.

Make no mistake, though: The process of getting there won't be easy. And once you do get there, you'll realize that you're not a programming wizard (is anyone?) and that you still have many knowledge gaps. Unfortunately, people are misled into believing that programming is somehow easier to pick up than any other skill that pays well, or that spending money on courses will guarantee their success. In reality, becoming a good developer requires years of practice, self-motivation, and hard work.

And, of course, you never stop learning.