After the hard work of packaging your services into the container, the format is complete, and you've successfully deployed them to a Kubernetes cluster, you still have the task of exposing those services beyond the cluster for external consumption. In this post, let's cover the ingress mechanism that Kubernetes and its community provides.

Overview

Services running in a Kubernetes cluster are by default isolated from the attention of any potential clients that exist outside the cluster. This is great for those services that don't need to be exposed beyond the edge of the cluster, but quite often most services are designed to be consumed by external clients. So given what we know about the makeup of services running in the cluster, how can we make them consumable by external clients? Can we use Kubernetes Service API for it?

The limitations of the Kubernetes Service

The service API can handle ingresses services running in a Kubernetes cluster, but there are some limitations with it.

Firstly, if our cluster is deployed on‑premise, then we will probably be forced to expose service objects using the NodePort type. And if we want to load balance service requests over the cluster's nodes, then we'd need to manually configure an external load balancer and cope with the operational overhead of cluster node failures and IP address changes. We might also have to endure some additional latency due to the various network hops as traffic arrives at the node is routed to the service's cluster IP before being forwarded to a Pod that may be running on a different node.

In addition, client IP addresses are lost in the routing exercise unless the external traffic policy is set to local. That might work for us, but it would immediately remove one of the benefits of the service API implementation, which is to load balance ingress traffic across each of the service's endpoints.

If you use the load balancer type in a public cloud setting, a cloud provider load balancer will get created for each different service. This may be enjoyable in some circumstances. But if you have lots of services, the ongoing costs could increase substantially.

Finally, the service API has a fairly limited scope of function when it comes to traffic routing. It functions Layer 4 of the OSI model. But what if we want to route traffic based on layer 7? What if we want to implement host or path‑based routing? This is not possible with the service API. For these reasons, Kubernetes has an ingress API that specifically caters to HTTP‑based ingress.

Limitations of the Service API:

- Manual configuration of load balancer when using NodePort type.

- Potential latency due to the network hops introduced by kuby-proxy.

- A load balancer per service can quickly escalate operational costs.

- The Service API cannot cater to advanced ingress traffic patterns.

What does Ingress mean?

When we talk about ingress, if you looked up the dictionary definition of ingress, it will say something like this. It's the act of going in or entering or having the right to enter.

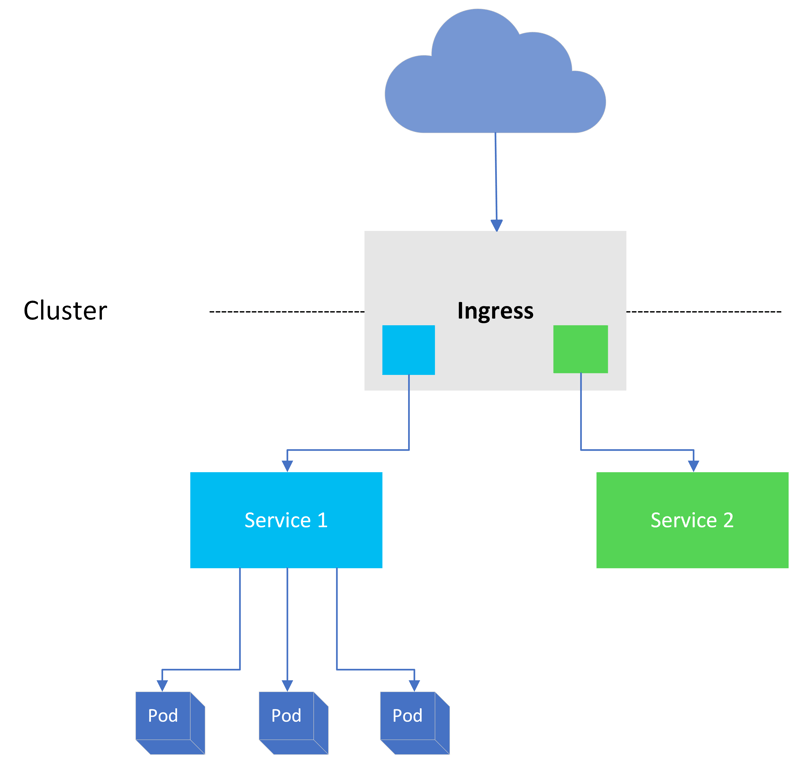

Now that's a generalized definition, so what does it mean in the context of a Kubernetes cluster? Building on the dictionary definition, we can say that ingress is about enabling traffic. More specifically, it's about opening a Kubernetes cluster up to receive external application client traffic. But that's not all. It's also about traffic routing. It's one thing to allow traffic into the cluster, but it needs to get to its intended destination, and ingress is about defining the routes that the traffic should take to get to the appropriate backend service.

And we're not done yet in answering our question because ingress traffic also needs to be routed reliably. Containers are ephemeral in nature, and Kubernetes is constantly trying to drive the cluster's actual state towards the desired state. This means that a client might be communicating with a pod running on one cluster node one minute and then an entirely different pod on another node the next time. Ensuring that a client receives the same consistent experience relies on Kubernetes ingress capabilities to provide a reliable and secure communication channel. If we put it all together and summarize the following Ingress's core responsibilities:

- Enabling traffic (Opening the cluster to receive external traffic).

- Traffic routing (Defining traffic routes to backend services).

- Traffic reliability (Ensuring reliable, secure communication).

Ingress API Object

Ingress is a Kubernetes API object that manages the routing of external traffic to the services that are running in a cluster. The Ingress object is one of the core resource definitions in the Kubernetes API. Ingress object is primarily concerned with layer 7 traffic, in fact, HTTP or HTTPS traffic. Now, if we want to define some characteristics associated with Ingress, then just like you would with the Service object, you define those characteristics in a manifest. Once it's been applied to the cluster via the API server, you'd expect Kubernetes to create the object and then start to act on what's defined using a controller, which moves the actual state towards the desired state.

Kubernetes does not have an in‑tree controller that acts on Ingress objects, however, and this is by design. Instead, it relies on a cluster administrator to deploy a third‑party Ingress controller to the cluster, such as:

- Apache APISIX ingress controller is an Apache APISIX-based ingress controller.

- Kubernetes NGINX Ingress Controller.

- AKS Application Gateway Ingress Controller is an ingress controller that configures the Azure Application Gateway.

- Ambassador API Gateway is an Envoy-based ingress controller.

- And more.

Ingress - a Kubernetes API object that manages the routing of external HTTP/S traffic to services running in a cluster.

Need for Third-party Controllers

Kubernetes rely on third‑party ingress controllers for a few reasons. Ingress controllers need to implement the Kubernetes controller pattern, but they also need to route external traffic to cluster workloads. Essentially, this is a reverse proxy function. And whilst it would have been possible for the Kubernetes community to build one from scratch, there are plenty of excellent tools that already exist that serve this purpose. In fact, it may have been tempting to choose the best breed proxy, package it up as a controller, and deploy it as the de facto ingress implementation.

The problem with that is that people's views and opinions on what's best for the breed will differ. And in providing choice, it's left to the cluster administrator to determine which controller best serves their needs. And of course, giving the community the ability to package their own ingress controllers helps public cloud providers and other vendors to build the necessary logic that is optimal for their own infrastructure.

So, if we generalize some reasons why we need third-party ingress controllers:

- Part of the ingress controller's function is to act as a reverse proxy for cluster workloads.

- Best of the breed is subjective, instead, we have the ability to choose a solution that works for us.

- Infrastructure providers can create ingress controllers optimized for their environments.

Summary

In this post, we discussed the Kubernetes Ingress concept and capabilities such as enabling traffic, traffic routing, and traffic reliability. Also, we have seen the limitations of the Kubernetes Service API, reviewed some reasons behind why third-party controllers are preferable, whilst the Service API can supply simple ingress needs, we need a more sophisticated means of dealing with more advanced requirements. In the upcoming post, we will explore how to overcome some of the limitations we listed by using third-party ingress controllers.