These are the notes from Chapter 12: Effective Troubleshooting from the book Site Reliability Engineering, How Google Runs Production Systems.

This is a post of a series. The previous post can be seen here:

“Be warned that being an expert is more than understanding how a system is supposed to work. Expertise is gained by investigating why a system doesn't work.” — Brian Redman

there’s little substitute to learning how the system is designed and built.

As once said by Confucious: “I hear and I forget. I see and I remember. I do and I understand.”

Practice is essential, go get' em!

Formally, we can think of the troubleshooting process as an application of the hypothetico-deductive method given a set of observations about a system and a theoretical basis for understanding system behavior, we iteratively hypothesize potential causes for the failure and try to test those hypotheses.

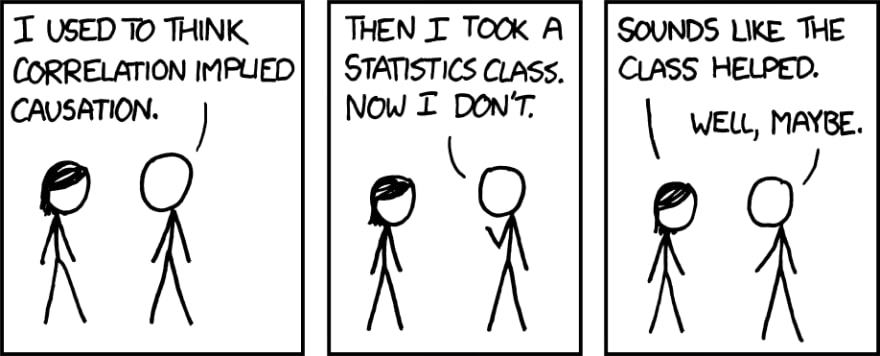

Finally, we should remember that correlation is not causation

However…

as systems grow in size and complexity and as more metrics are monitored, it’s inevitable that there will be events that happen to correlate well with other events, purely by coincidence.

Understanding failures in our reasoning process is the first step to avoiding them and becoming more effective in solving problems.

An effective report should tell you the expected behavior, the actual behavior, and, if possible, how to reproduce the behavior.

Ask "what," "where," and "why"

A malfunctioning system is often still trying to do something—just not the thing you want it to be doing. Finding out what it’s doing, then asking why it’s doing that and where its resources are being used or where its output is going can help you understand how things have gone wrong.

In many respects, this is similar to the “Five Whys” technique introduced by Taiichi Ohno to understand the root causes of manufacturing errors.

Once you’ve come up with a short list of possible causes, it’s time to try to find which factor is at the root of the actual problem. Using the experimental method, we can try to rule in or rule out our hypotheses. For instance, suppose we think a problem is caused by either a network failure between an application logic server and a database server, or by the database refusing connections. Trying to connect to the database with the same credentials the application logic server uses can refute the second hypothesis, while pinging the database server may be able to refute the first, depending on network topology, firewall rules, and other factors. Following the code and trying to imitate the code flow, step-by-step, may point to exactly what’s going wrong.

If you performed active testing by changing a system—for instance by giving more resources to a process—making changes in a systematic and documented fashion will help you return the system to its pre-test setup, rather than running in an unknown hodge-podge configuration.

Nowadays, if your company already adopted the immutable infrastructure way of managing the infra and its configurations, this advice may seem outdated if taken word for word.

Documenting your thought process and troubleshooting steps is still valuable to share later on with your teammates in the form of documentation or a runbook.

Publish your results.

It’s tempting and common to avoid reporting negative results because it’s easy to perceive that the experiment "failed." Some experiments are doomed, and they tend to be caught by review. Many more experiments are simply unreported because people mistakenly believe that negative results are not progress.

Do your part by telling everyone about the designs, algorithms, and team workflows you’ve ruled out. Encourage your peers by recognizing that negative results are part of thoughtful risk taking and that every well-designed experiment has merit. Be skeptical of any design document, performance review, or essay that doesn’t mention failure. Such a document is potentially either too heavily filtered, or the author was not rigorous in his or her methods.

Adopting a systematic approach to troubleshooting—as opposed to relying on luck or experience—can help bound your services’ time to recovery, leading to a better experience for your users.

If you liked this post, consider subscribing to my newsletter Bit Maybe Wise.

You can also follow me on Twitter and Mastodon.

Photo by Kyle Glenn on Unsplash