This post will be a short one in my ongoing series about TinyML and IoT (check out my previous post and video demo here).

In a nutshell, you will learn how to run the TensorFlow Lite “Hello World” sample on an MXChip IoT developer kit. You can jump directly to the end of the post for a video tutorial.

You may have not realized, but unless you are looking at real-time object tracking, complex image processing (think CSI crazy image resolution enhancement, which turns out to be more real than you may have initially thought!), there is a lot that can be accomplished with fairly small neural networks, and therefore reasonable amounts of memory and compute power. Does this mean you can run neural networks on tiny microcontrollers? I certainly hope so since this is the whole point of this series!

TensorFlow on microcontrollers?

TensorFlow Lite is an open-source deep learning framework that enables on-device inference on a wide range of equipment, from mobile phones to the kind of microcontrollers that may be found in IoT solutions. It is, as the name suggests, a lightweight version of TensorFlow.

When TensorFlow typically positions itself has a rich framework for creating, training, and running potentially complex neural networks, TensorFlow Lite only focuses on inference. It aims at providing low latency, and small model/executable size, making it an ideal candidate for constrained devices.

In fact, there is even a version of TensorFlow Lite that is specifically targetted at microcontrollers, with a runtime footprint of just a couple of dozens of kilobytes on e.g. an Arm Cortex M3. Just like a regular TensorFlow runtime would rely on e.g. a GPU to train or evaluate a model faster, TensorFlow Lite for micro-controllers too might leverage hardware acceleration built into the microcontroller (ex. CMSIS-DSP on Arm chips, which provides a bunch of APIs for fast math, matrix operations, etc.).

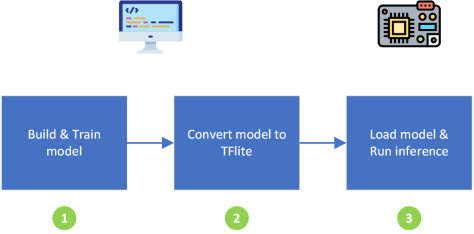

A simplified workflow for getting TensorFlow Lite to run inference using your own model would be as follows:

First, you need to build and train your model (❶). Note that TensorFlow is one of the many options you have for doing so, and nothing prevents you from using PyTorch, DeepLearning4j, etc. Then, the trained model needs to be convertedinto the TFlite format (❷) before you can use it (➌) in your embedded application. The first two steps typically happen on a “regular” computer while, of course, the end goal is that the third step is happening right on your embedded chip.

In practice, and as highlighted in the TensorFlow documentation, you will probably need to convert your TFlite model in the form of a C array to help with inclusion in your final binary, and you will, of course, need the TensorFlow Lite library for microcontrollers. Luckily for us, this library is made available in the form of an Arduino library so it should be pretty easy to get it to work with our MXChip AZ3166 devkit!

TensorFlow Lite on MXChip AZ3166?

I will let you watch the video below for a live demo/tutorial of how to actually run TensorFlow Lite on your MXChip devkit, using the Hello World example as a starting point.

Spoiler alert: it pretty much just works out of the box! The only issue you will encounter is described in this Github issue, hence while you will see me disabling the min() and max() macros in my Arduino sketch.

Live demo