AI/ML is a wildfire of a trend. It’s being integrated into just about every application you can think of. When compared to other technical innovations over the past years, like the blockchain, the infrastructure and tooling was being built (and still is) in parallel to discovering a meaningful application.

AI/ML on the other hand has seemingly jumped straight to real world application, leaving tooling and infrastructure largely lacking in comparison to adoption. We’ve built a lot of tools for developing and tuning models, but moving them into production is relatively new and the few MLOps tools that do exist were built before AI/ML became all the rage. At some point we have to stop and ask ourselves if we’re building long-term solutions or just bootstrapping our way to production. In my opinion, we’re standing at a crossroads. Specifically, a crossroads of DevOps and MLOps.

Like DevOps 10 years ago, MLOps is buzzy, and tech companies are racing to incorporate these practices into their workflows. However, the more I dig into it, the more I come to believe that MLOps is a momentary trend. I find it hard to believe that the future lies in further segmentation of XOps practices, but in a return to a unified approach under the well-established (and adopted) practices of DevOps.

The model handoff conundrum

A major stumbling block in the current AI/ML workflow is the model handoff process. Moving a model from a development stage (typically in a Jupyter Notebook) to an ML tool, then onto a development server, and finally to a production environment like Kubernetes is … let’s say challenging at best. Each of these transitions requires repackaging the model to suit the target environment.

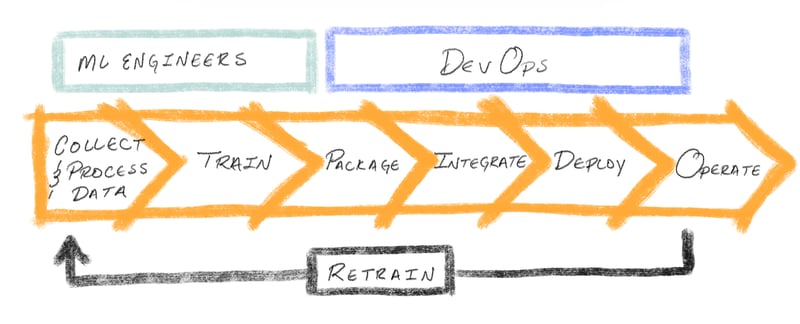

This is not just a pain for the team (usually DevOps) that owns everything to the right of training (see diagram below) but introduces significant risk and delay into the deployment cycle. If that’s not enough, the diversity of packaging mechanisms without a standardized procedure amplifies these issues, turning what we’ve come to enjoy as a straightforward process into a real “pain in the ops.”

So we decided to do something about it.

We had a crazy idea–Why not package models into a format that we’ve already standardized on?

Back in 2013, a little company called Docker made it really easy to start using containers to package up applications. A big key to their success was the OCI (you can learn about that here), an industry wide initiative to have standards around how we package up our applications. Because of OCI standards, we have hundreds (maybe thousands?) of tools that can be combined to manage and deploy applications. So why aren’t we using this for packaging up Notebooks and AI models as well? It would make deploying, sharing, and managing our models easier for everyone involved.

From Notebook to OCI-standard file type

As I said earlier, we set out to do something about how we’re packaging up our models, and what we did was build Kit. Kit is an open source MLOps project that packages your model, datasets, code, and configuration so data scientists and developers can use their preferred tools while collaborating effortlessly.

Our goal is to standardize the packaging, reproduction, deployment, and tracking of AI/ML models (How to turn a Notebook into a deployable artifact). We want models to be managed with the same agility as application code. Kit streamlines the deployment process and ensures models can be executed universally with minimal friction. Eg, when you hand a model to the DevOps team, it’s already good to go, meaning they can deploy it to prod! Your tools have been standardized, and best of all, you didn’t have to learn how to write a Docker or Kubernetes file.

The Kit CLI packages up all of the assets/resources included in your Notebook and turns them into a ModelKit, to make handoffs between ML engineers using Jupyter Notebooks and application teams easy, letting both teams work with the tools they already use and love. Checkout a video of the Kit CLI here.

KitOps' philosophy is centered around improving collaboration without requiring an overhaul of existing processes. By building on industry standards, we facilitate cross-team engagement, beyond merely data scientists, in the model development process. The compatibility of ModelKits and Kitfiles with current tools eliminates the need for significant modifications to established deployment pipelines or endpoints.

Unmatched model traceability and reproducibility

KitOps's approach to model traceability and reproducibility is different from the common practice of simply using a Docker file. To be clear, there’s nothing wrong with using Docker files, however, including your hyperparameters and data sets in a container are not a practical approach. Kit provides a unified package for everything you need to reproduce, integrate with, or test the model throughout its lifecycle in a modular package that gives users the flexibility to extract specific components of a ModelKit, such as the dataset or the model itself, or to retrieve the entire package.

Furthermore, the storage of ModelKits within an organization’s registry facilitates a detailed record of state changes, crucial for auditing purposes. And, since ModelKits are OCI-compliant, you can use the registries that you’ve already adopted (Jozu Hub, Docker Hub, Amazon ECR, Artifactory, Quay, etc). In addition, ModelKits are ideally suited for secure bill-of-materials (SBOM) initiatives, addressing the growing concerns regarding security and compliance in AI/ML model deployment. Something that is becoming increasingly important.

The inevitability of DevOps integration

We believe that KitOps signifies a critical moment for AI/MLOps and DevOps. By addressing the issues plaguing model deployment and collaboration, KitOps paves the way for an era where the delineation between MLOps and DevOps becomes increasingly indistinct. To be clear I’m not saying that the existing MLOps practices are not valuable; rather, I’m advocating that the foundational practices and tools being implemented in our DevOps workflows are flexible enough to incorporate the requisites of AI/ML deployment.

We're still early in the development process and are really interested in collecting community feedback.

You can learn more about KitOps at https://kitops.ml

And checkout the source code here: https://github.com/jozu-ai/kitops