Containers are hot stuff right now.

So it’s natural that you’re found your way here wondering what the business case and benefits of containers could be. I’m guessing you are assessing whether containers would make sense for your company? If I’m right — you’re in the right place.

By the end of this article you’ll know what containers are, what their benefits and drawbacks are and you’ll have some decision making criteria to assist you in your business case.

We've got quite a bit of ground to cover, so let's get to it.

Firstly, What Is a Container?

Before we get into pros and cons, we need to have a basic understanding of what a container is. We need to understand how containers operate and what areas of our company could be positively affected by the implementation of containers.

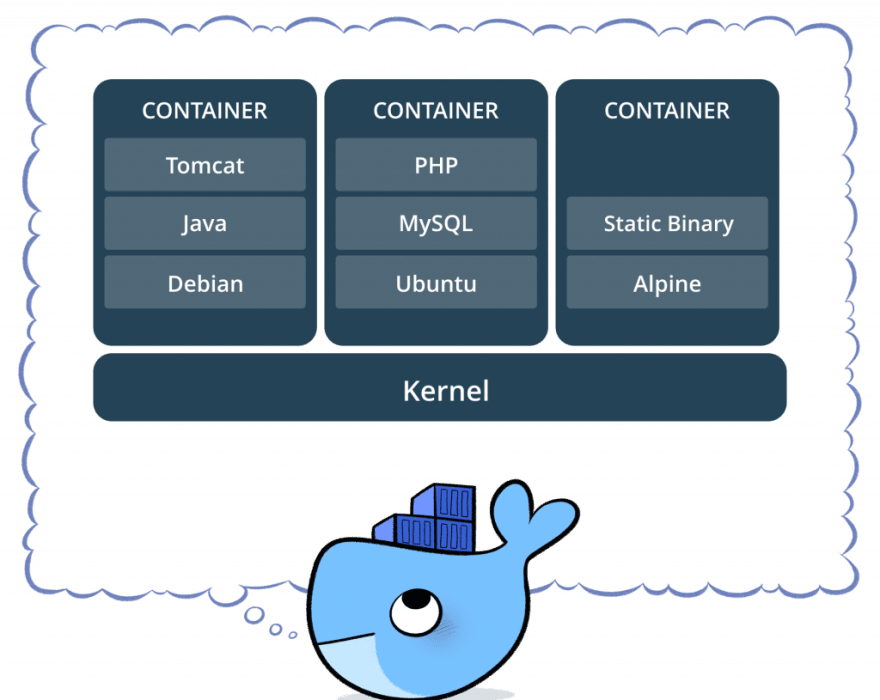

Docker, the current most popular container software on the market defines a container as:

A standardized unit of software.

Sounds pretty vague to me. Anyone working in software will have heard phrases similar to this, such as component, module, or app.

Aren't these all standardized units of software? What makes a container unique?

According to Docker:

Containers isolate software from its environment and ensure that it works uniformly despite differences for instance between development and staging.

Bingo.

This is the real value of containers: isolation. Containers are pretty much isolated processes running on a host machine. I say pretty much since there are some instances when a container isn't truly isolated. But we'll get into that a bit later on.

Why is isolation important? Because isolated software runs with the same behavior no matter where you put it.

This is useful when:

- Moving the software between environments, e.g., for testing purposes.

- Moving the software to a different running location, e.g., from on-site servers to the cloud (or even between cloud providers).

- Scaling the software to run compute power concurrently (horizontal scaling), e.g., to achieve high availability or performance requirements.

Simply put: containers allow software engineers to create small, isolated pieces of code that can run on any machine, anywhere, in a consistent fashion.

Don't worry if this is a little too abstract, because we are going to break it down.

But first, to ensure a balanced discussion, let's look at the alternatives to containers.

Container Alternatives

If you’ve been in a tech for a while, my previous statement about creating isolated software could have you raising your eyebrows. Because indeed there are other methods for isolation and portability that don’t require containers.

In order to see the benefits Containers make, we should review some of the alternatives.

Let's take a quick look at these options.

Alternative 1: Manual Configuration

Manual configuration is essentially the antithesis to containers. Rather than having your code run in the same way on all machines, you become susceptible to the most fickle errors of them all: human ones.

Manual configuration is simply using an individual, a human, to manage servers or applications. Servers that are manually configured (in what is often now considered a fairly old-school way) are maintained by an operations team or a systems administrator.

Even though it may be old school, we must consider manual configuration as an option because often, especially for small companies, it can be the most pragmatic solution.

As we'll soon see, containers can help companies looking to achieve scale, and without the right conditions, adding more complexity to a new project might come with additional risk beyond which makes sense to undertake.

Alternative 2: Virtual Machines (VM)

Next up, virtual machines.

For a long time, the tech industry standardized on the idea of virtual machines as a way to create the aforementioned isolation and combat the inconsistencies of manual configuration.

Simply put: virtual machines are small, isolated machines that run within another machine (called the host). I think it's easiest to think of virtual machines as a computer within a computer.

For a long time, I couldn't discern the difference between VMs and containers. The penny finally dropped when I understood containers aren't magic, nor are they just boxes, as they're often drawn.

Instead, they're a protected process running on a machine.

And because containers are processes, they can share the system resources of their host, unlike virtual machines that require an entire operating system within each virtual machine you want to run. This creates a trade-off between the near-perfect isolation of a virtual machine and the speed and lightweight benefits of a container.

Alternative 3: Serverless

Lastly, the newest entrant in the getting-code-onto-a-server market, serverless.

Serverless tackles the difficulties of matching application and infrastructure in a different way: by removing infrastructure from the equation completely.

But how does this work? Surely there must still be a sever?

In serverless, there are still servers that run our code, but this responsibility is passed to a cloud provider.

Instead of putting applications into the cloud as containers or virtual machines, we send small functions of code. These are then run individually based on demand. Serverless can be great for scaling and achieving lower costs, but it also comes with added complexity.

Serverless and containers are comparable, but they’re not direct competitors. By design serverless ties you to a cloud provider. While some tools such as Serverless Framework help you minimize the lock-in, you might be losing out on a lot of the benefits.

Companies who adopt serverless often benefit by going fully cloud-native and embracing all of a cloud provider's features without fear of lock-in. So, if cloud agnosticism is a goal, containers could be a better choice than serverless.

Benefits of Containers

Hopefully by now you have a good grasp of what a container is and what the alternatives are: manual configuration, VM's and serverless. With this base of knowledge, let's move onto looking in more detail at the specific benefits of containers.

Benefit 1: Deployment Flexibility

Containers don't have to just work for applications such as websites. Since they are simply processes, it's easy to scale them and use them for different purposes.

Containers also work for other ad-hoc and operational tasks, such as:

- Database schema updates

- Performance testing.

For instance, recently I launched a performance testing suite on a fleet of containers. Each container had a performance profile, and I could easily scale it up and down to mimic load for testing purposes.

These containers could just as easily be run on a local machine as they could on AWS, GCP, or any other cloud provider.

Benefit 2: Cloud Agnosticism (Portability)

When it comes to architecture decisions, there will always be a stakeholder who will ask...

"What if we want to move cloud providers? How hard will that be?"

With containers, the answer is: not too hard.

Containers themselves can run on open source software, such as Linux. They're shipped with a run file, meaning that you'll find their packaging instructions contained within the software, not the cloud provider.

So if you do choose to move your application to a different set of servers, you can do so with relative ease.

Alternatively, a solution such as serverless by its very nature is highly coupled to the cloud provider. This is worth considering if portability is a concern for you.

Benefit 3: Fine-Grained Architectural Implementation

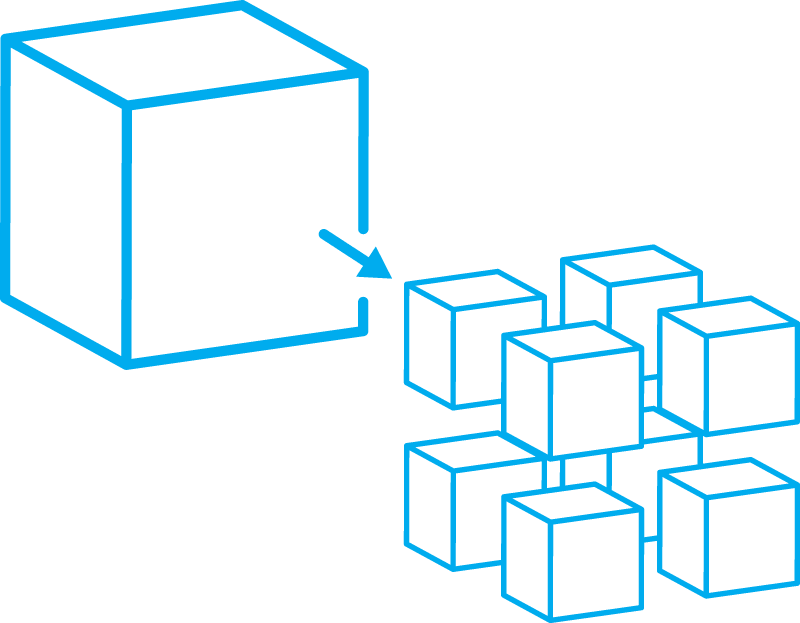

Often a container-based solution for an application goes hand in hand with a microservices-type architecture. In microservices, a large application is broken down into smaller parts that are typically worked on by separate teams and deployed in isolation.

When separated in such a way, you can scale them horizontally, which simply means that rather than making the machine bigger, you run two of them side by side. This can then give you a fine level of granularity when performance tuning an application, allowing you to scale up and down only where needed.

Microservice-type architecture brings additional advantages, as teams can work independent, drastically increasing velocity and speed of implementing change (at least in theory).

On the other hand, microservice architectures are difficult to get right and require expertise and team communication. Split them in the wrong place and you have a very complex, distributed application.

But with containers in place, you have the option of whether to run one giant monolithic-type architecture or break your application down into pieces. You can even put it back together again if you split up your application in the wrong way.

Benefit 4: Easier Employment of Talented Engineers

A pragmatic—albeit slightly dry—reason to choose containers: resourcing.

Containers are hot technology in the market right now. Most technology companies struggle to find good developers to work on their products and services.

So it makes sense to ensure that the technologies we run are desirable by the market and that we can find good staff to work our software.

Drawbacks of Containers

Okay, so now we've talked a lot of the merits of containers.

But to make sure we get a complete picture, let's take a look at all the ways containers can let us down, or worse: actually hurt our performance and ultimately, our company.

Drawback 1: Additional Complexity

Containers don't come alone. Unfortunately, oftentimes running containers means additional overhead in setting up systems to facilitate them. We will need somewhere to store our container images and likely, we'll want what is called a container orchestration tool.

On a practical level, container orchestration:

- Chooses how many containers to run at any given time.

- Tells our containers on what hosts they need to run.

- Starts, restarts, or destroys containers.

This additional tooling brings with it added complexity. If you were operating in a monolithic architecture scaling might be a simple case of making the host machine really big.

Not only this, but if you go ahead with containers, you'll need to start considering the other tooling you'll want to run. This can be both complex and time consuming.

Just to paint a picture of the current crowded orchestration market: a couple of the main technologies available are Swarm, by Docker, and Kubernetes, an orchestration tool built by Google.

In terms of cloud adoption, naturally Kubernetes comes as a managed service on Google's Cloud Platform (GCP), but cloud adoption is also possible on Microsoft's Azure, with AWS offering its own container orchestration solution called Elastic Container Service (ECS).

Most of these orchestration services are fairly new, and the moment you use a service such as ECS on AWS, you begin to couple yourself to that specific cloud provider and lose some of the original portability benefits of containers.

Drawback 2: The Learning Curve

Implementing new technology is rarely a breeze, despite what the 101 conference talks and the "Hello World" YouTube videos make us think. New technology means training your teams to use it, which means time away from their usual jobs, which may be difficult in your current situation. You should consider the cost that will go into learning something new.

The Big Question: To Container, or Not to Container?

I'm glad you've made it this far, as this is where things get really interesting. Now we're going to take everything we've learned about containers and consider whether or not they will work for your company.

In order to do this, here are the questions you should consider:

Do we have the risk tolerance for additional complexity? If you are at the start of a project, it may be worth opting for a more simplistic monolith architecture. With this in mind, you could also build, package, and deploy your project in a single container, deferring the complexity of microservices and distributed systems into the future.

What is your team's level of enthusiasm for containers? If you're thinking of doing a big switch to container technology, keep in mind that the switch will require plenty of enthusiasm from your team. It would be worth your while to check in with your teams before adopting new technologies.

Do you have the ability to train your teams on containers? If you don't give your teams adequate opportunities to learn new technologies, building in time for experimentation and failure, then your journey to implementing containers could be fraught with difficulty. So consider the amount of time you have available to dedicate to this venture.

Do you have an immediate need for high scale? Containers could be a good fit for you if you'll need high levels of scale in the near future. If your application is set to serve millions of requests and you'll want to scale independent parts of your application, containers might be the right choice.

Conclusion

And that's a wrap on our whirlwind tour of containers, their benefits, and their drawbacks.

Hopefully you've had a chance to reflect on whether or not they might work for you.

If you've got an immediate need for scale, the ability to take on additional complexity, and an enthusiastic team, then maybe it's time to get going with containers. If you're missing one of these areas, maybe you want to hold off and investigate containers a little more before making your decision.

Containers aren't a silver bullet, but they could be the solution to unlocking a big performance boost for your company.

Now, armed with your newfound knowledge about the benefits and risks of containers, you should be able to make an informed decision about whether containers are right for your company. And remember: no setup works for every situation. So keep an open mind, explore, experiment, and stay curious.