How is RAG used?

What's up with the context ?

Does RAG work out-of-the-box?

What do you need to configure to make it work?

RAG = Retrieval Augmented Generation

RAG, or Retrieval Augmented Generation combines the concepts of:

- Information Retrieval - searching for relevant documents within your database/index based on a query (question).

- Augmenting - adding the extra information (context) to the query you are going to make.

- Generative AI - a model (usually an LLM) takes in a query/request and generates an answer for it (in natural language).

Putting those together, when a user asks a question, you first retrieve relevant information that might help answer that question; then you give this information (maybe data entries from your database, some recent articles or news, etc) as context to the LLM.

So now you can generate an answer based not only on the initial question; but the additional information you retrieved as well - giving more accurate and relevant responses.

It's a simple question.

A basic example:

Query = "What is Iulia Feroli's job?"

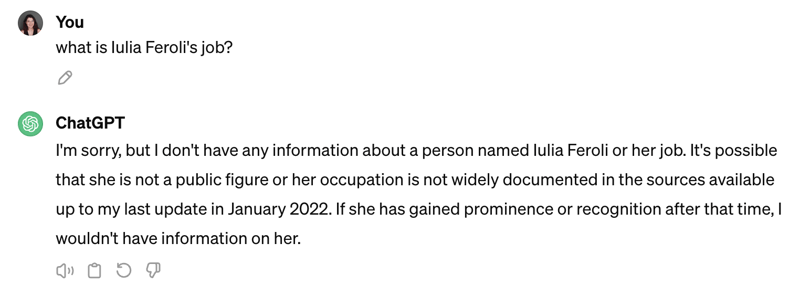

Sending this to a general LLM would only give the correct answer if some information about me was added to the training dataset. (which I very much doubt.) If not, the response would either be an incorrect guess (which we would call a "hallucination") or an "I don't know".

To test out the theory I asked chatGPT (see bellow) and it basically told me I'm not famous enough 🙄.

So we need a little more context...

Let's try the Information Retrieval route.

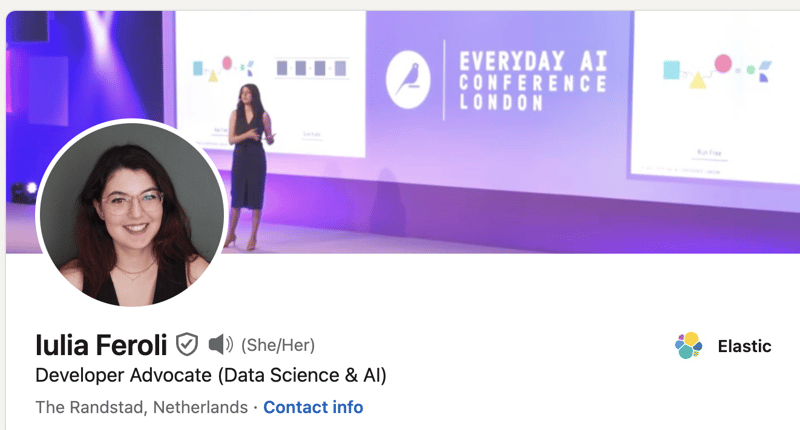

If I have a database or other source that might include some information about non-prominent, not-yet-recognizable figures; I can first run a search for Iulia Feroli over there. Let's pretend my database is LinkedIn. With a quick search I get:

Wow, she sure looks like a public figure!

In a real use case, your search experience for this step might be more complex. You could search for usernames in a database, through scans of PDFs, using misspellings (my name is not Lulia btw), or even semantic search if you look up "red haired speaker at that conference last week in Amsterdam?".

Context + Query --> Flattering Response

Now, to perform RAG, instead of just sending the query to the LLM, I can send along the context I retrieved and ask it to take that into account when generating the answer.

Then ideally we get back something like:

Iulia is an awesome developer advocate @elastic making content about NLP, search, AI, and python of course!

I say ideally because the results will still vary a lot depending on how you configure your RAG pipeline.

There are loads of out-of-the-box components you can use, so you don't have to code it all from scratch, but you do need to make some informed choices.

How will you do the information retrieval?

Obviously, I'd use elasticsearch, but going deeper... do you use "classic" or semantic search? Which embedding model works best with your data? How about the chunking and window size?Which LLM to choose for the generative AI part?

There are so many models available on HuggingFace and beyond to choose from, depending on the domain of your query; or even the extra training & customization you might want to apply.How to set it all up?

There are loads of great resources such as LangChain that allow you to create building blocks you can plug in and out to create your "chains".

And this is only scratching the surface!

I hope this gave you a good idea of what RAG is all about.

Some resources to get you further: