Welcome back to my series of Advent of Code solutions in MiniScript! Day 16 was... how to put this?

For the first time, I went to bed (after working on this for nearly an hour) without a solution. Ouch!

The challenge lays out a network of valves, some of which can be opened to relieve the pressure inside a volcano. Moving from one valve to another takes time, as does opening a valve; we have only 30 minutes to relieve as much total pressure as possible. In short, we have to find a path through a weighted graph that maximizes the score, but total path length is limited, and score provided by each node depends on how much time is left (i.e. length of the path up to that point) as well as its inherent value.

I tend to see most problems as search problems: depth first, breadth first, best-first (A*), and greedy are all variations of the same basic tree-search algorithm. Many problems fall into this category; any time you need to take a series of moves to find your way to a goal state, this is a great way to think of it. So I began trying to approach this one the same way.

The trouble is, there is no single goal state. We have to try all possible paths through the network in order to be sure we have found the best one. And there are just too many! This is a classic example of a combinatorial explosion. I left my code running all night (after completely failing to think of any more efficient way to approach it), and it still hadn't finished even the smaller sample problem.

Dynamic Programming to the Rescue

The right way to think of it is as a Dynamic Programming problem. Dynamic programming (DP) is just a fancy-shmancy term for: break a problem into smaller subproblems, and cache the results of those subproblems so you don't have to compute them again and again. This works great any time you can define your problem in such a way that recurring subproblems will actually happen.

For that to work, you have to ensure your state (the thing you're caching results for) doesn't depend in any way on how you got to that state. This is rather the opposite of how a standard tree-search algorithm works. In tree-search, your state would include your score so far, as well as a pointer to the parent node, so you can focus your search on the best estimated total score and also get a complete path back to the start when you're done.

With DP, it's different. Instead you must think: suppose I've already done some work, and scored some points. Now there are T minutes left, and X valves still available to open. What's the best additional score I could get with X valves in T minutes?

Then you start with the trivial cases. If there is no time left, or no valves left, then the best score you can get with that is zero. If there's only one valve left, the best you can do is to open that, and you get however many points that earns you in the time left.

If there's more than one valve left, then this is where DP recurses. (Of course you could also code this up with iteration instead of recursion, but recursion is pretty natural here and works fine.) Suppose there are two valves left, v1 and v2. We're going to either open v1, getting some points with that, and add the best score we could get with v2 in the remaining time; or we'll open v2, get some points with that, and add the best score we could get with v1 in the remaining time.

And here's the key bit: when defined that way, there are many, many cases where our bestScore function is being asked about some situation it's seen before. What's the best score you can get with valve JJ in 5 minutes? That will come up after a huge variety of other valve combinations that resulted in only JJ (and 5 minutes) being left. So we memoize them. ("Memoize" is computer science speak for basically "memorize" — that is, store the results in a cache so we can just look them up next time.)

This is the core of DP: asked to find the best score for a given state, it first checks the cache, and if a stored value for that state is found, return it and we're done. If not, then try each possible sub-state, calculating the score from that step alone plus the best score for that sub-state. Return the maximum of these as the result.

Code for Part 1

After reading the input file into a list of strings called lines, I parse the lines as follows. Each line becomes a little map containing "name", "rate", and "exits". Also, many of the valves have zero flow rate, and so should never be turned on; they're only there to form paths among the other valves. So I also keep a goodValves list of all the valves that should be considered for activation (plus valve "AA", which is always the starting point).

valves = {}

goodValves = []

for line in lines

print line

m = line.match("Valve {name} has flow rate={rate}; tunnels lead to valves {exits}")

if m == null then

m = line.match("Valve {name} has flow rate={rate}; tunnel leads to valve {exits}")

end if

m.rate = val(m.rate)

m.exits = m.exits.split(", ")

print m

valves[m.name] = m

if m.name == "AA" or m.rate > 0 then goodValves.push m.name

end for

Next, we're going to frequently need to know the travel time from one good valve to another. I chose to precompute these and keep them in a map of maps. So, for example, we'll be able to say travelTime["AA"]["DD"] to find the number of minutes it takes to go from valve AA to valve DD. To do this, I used that Search class I wrote after Day 12.

// Find the travel time from every good (rate > 0, plus "AA")

// to every other open valve.

travelTime = {}

pather = new search.Search

pather.neighbors = function(state)

result = {}

for e in valves[state].exits

result.push e

end for

return result

end function

for a in goodValves

timesFromA = {}

travelTime[a] = timesFromA

for b in goodValves

if b == a then continue // don't go to self

if b == "AA" and valves.AA==0 then continue // (don't bother going back to A)

timesFromA[b] = pather.findPath(a, b).len - 1

end for

end for

// ...and then remove AA, if it has no flow

if valves.AA == 0 then goodValves.removeVal "AA"

This much, I actually had working last night. What I failed on was the DP part. For that, I decided to make a little class to represent the state. But note that it's important to define this in a way that represents the remaining work still to do, not any work that was already done. So it has a list of still-closed valves, as well as the time left, but it does not have any notion of how we got here or how many points have already been earned.

State = {}

State.current = "AA" // current location

State.closed = null // list of valves still to open

State.timeLeft = 30

State.make = function(location, closed, timeLeft)

noob = new State

noob.current = location

noob.closed = closed

noob.timeLeft = timeLeft

return noob

end function

State.key = function

return self.current + self.closed.join("") + self.timeLeft

end function

It also includes a key method that returns a string key representing that state, for use in the memo map. MiniScript is perfectly capable of hashing objects (maps) as map keys, but doing so is slower than hashing a simple string. So, using this key method should be faster than storing states directly. This let me define my memo map:

// memo key: a State.key; value: best score (released pressure)

// from just the open valves in that time

memo = {}

So for example, if state is a state that represents valves CC, FF, and GG still closed with 7 minutes left, then memo[state.key] remembers the best additional score we could get from that state.

And with all that set up, the actual bestScore method is relatively straightforward.

// Get the best score possible for the given state

bestScore = function(state)

key = state.key

if memo.hasIndex(key) then return memo[key]

if state.timeLeft < 1 then return 0 // no time to do anything

timeLeft = state.timeLeft

// consider opening this valve

rate = valves[state.current].rate

if rate > 0 then

baseScore = rate * (state.timeLeft - 1)

timeLeft = timeLeft - 1

else

baseScore = 0

end if

whatsLeft = state.closed[:]

whatsLeft.removeVal state.current

// consider doing that, and then going to each of the other closed valves

score = baseScore

for dest in whatsLeft

travel = travelTime[state.current][dest]

if travel >= state.timeLeft then continue

dscore = baseScore + bestScore(State.make(dest, whatsLeft,

timeLeft - travel))

if dscore > score then score = dscore

end for

// and now we have our best score! remember it, and return it.

memo[key] = score

if memo.len % 1000 == 0 then

print "Check " + memo.len + ": " + key + " has score " + score

end if

return score

end function

Note the bail-outs at the top: if we've already got an answer for this state in the memo table, then just return that. This is the crucial part that makes DP work. Also, if we are out of time, we bail return 0; that's what makes the recursion terminate.

After that, it's just a matter of trying each possible action in this state: try opening the valve and doing no more; and also try taking each of the exits to some other good valve, after opening this one. Of all those tries, remember the best score, and return that — after storing it in the memo table for future use — as the result of the function.

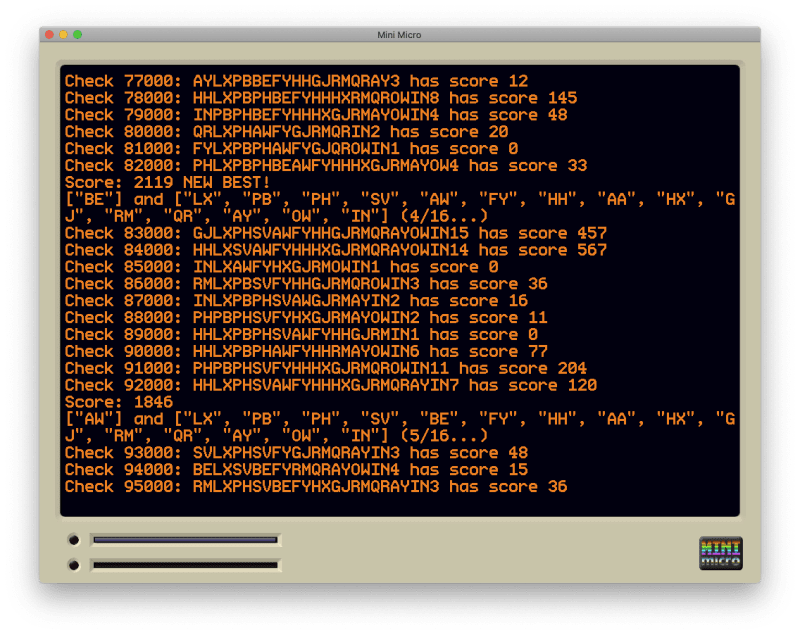

(I also included some debug output every 1000 entries, which of course isn't necessary for it to work, but which I find highly comforting!)

After all that, the main program is trivial:

startState = State.make("AA", goodValves, 30)

print bestScore(startState)

We literally just define the start state, and print the bestScore that can be achieved from that state.

This runs very efficiently. On the sample data, it memoizes (computes) 402 states and finishes pretty much instantly. On the full input file, it took maybe 10 seconds to run, and computed 113,523 states before producing the right answer.

(Here's the complete program all in one place.)

import "aoc"

import "search"

if 0 then fname = "input.txt" else fname = "example.txt"

lines = file.readLines(fname)

if not lines[-1] then lines.pop

valves = {}

goodValves = []

for line in lines

print line

m = line.match("Valve {name} has flow rate={rate}; tunnels lead to valves {exits}")

if m == null then

m = line.match("Valve {name} has flow rate={rate}; tunnel leads to valve {exits}")

end if

m.rate = val(m.rate)

m.exits = m.exits.split(", ")

print m

valves[m.name] = m

if m.name == "AA" or m.rate > 0 then goodValves.push m.name

end for

// Find the travel time from every good (rate > 0, plus "AA")

// to every other open valve.

travelTime = {}

pather = new search.Search

pather.neighbors = function(state)

result = {}

for e in valves[state].exits

result.push e

end for

return result

end function

for a in goodValves

timesFromA = {}

travelTime[a] = timesFromA

for b in goodValves

if b == a then continue // don't go to self

if b == "AA" and valves.AA==0 then continue // (don't bother going back to A)

timesFromA[b] = pather.findPath(a, b).len - 1

end for

end for

// ...and then remove AA, if it has no flow

if valves.AA == 0 then goodValves.removeVal "AA"

State = {}

State.current = "AA" // current location

State.closed = null // list of valves still to open

State.timeLeft = 30

State.make = function(location, closed, timeLeft)

noob = new State

noob.current = location

noob.closed = closed

noob.timeLeft = timeLeft

return noob

end function

State.key = function

return self.current + self.closed.join("") + self.timeLeft

end function

// memo key: a State.key; value: best score (released pressure) from

// just the open valves in that time

memo = {}

// Get the best score possible for the given state

bestScore = function(state)

key = state.key

if memo.hasIndex(key) then return memo[key]

if state.timeLeft < 1 then return 0 // no time to do anything

timeLeft = state.timeLeft

// consider opening this valve

rate = valves[state.current].rate

if rate > 0 then

baseScore = rate * (state.timeLeft - 1)

timeLeft = timeLeft - 1

else

baseScore = 0

end if

whatsLeft = state.closed[:]

whatsLeft.removeVal state.current

// consider doing that, and then going to each of the other closed valves

score = baseScore

for dest in whatsLeft

travel = travelTime[state.current][dest]

if travel >= state.timeLeft then continue

dscore = baseScore + bestScore(State.make(dest, whatsLeft,

timeLeft - travel))

if dscore > score then score = dscore

end for

// and now we have our best score! remember it, and return it.

memo[key] = score

if memo.len % 1000 == 0 then

print "Check " + memo.len + ": " + key + " has score " + score

end if

return score

end function

startState = State.make("AA", goodValves, 30)

print bestScore(startState)

Part Two

The second part of the challenge added another participant: a trained elephant, who can also go around opening valves. This reduces your available time to 26 steps, but now there are two workers operating in parallel.

After a bit of thought, I realized that we can look at it this way: I'm going to do some valves, and the elephant's going to do some valves. There will be no overlap between those sets of valves; no reason for me to visit valves the elephant's going to open, or vice versa. They are disjoint sets.

For any particular division of labor, finding the best possible score is no harder than what we did before. It's just bestScore(my set) plus bestScore(elephant's set).

So, it's just a matter of finding all the different ways we can divide up the valves between me and the elephant. That's quite a lot -- but it's thousands, not millions.

Only the main program needed to be changed:

// Find all ways to divide up the valves among me and the elephant.

// (Note that it doesn't matter who does which, so we only need to

// go up to half the number of good valves.)

totalBest = 0

for n in range(1, goodValves.len/2)

combos = goodValves.combinations(n)

for i in combos.indexes

setA = combos[i]

setB = goodValves[:]

for v in setA; setB.removeVal v; end for

print setA + " and " + setB + " (" + i + "/" + combos.len + "...)"

score = bestScore(State.make("AA", setA, 26)) +

bestScore(State.make("AA", setB, 26))

print "Score: " + score + " NEW BEST!" * (score > totalBest)

totalBest = max(totalBest, score)

end for

end for

print "Total best: " + totalBest

I'm using the list.combinations method (in my AoC module) to find all the different ways we can form setA (my valves) by taking n of the goodValves. Then, we find setB (the elephant's set) by simply removing each of mine from a copy of the full set.

I added a print statement on every division of labor, which also reports which combo we're on out of the total number of combos (for a given my-set size n). Again, this isn't necessary, but it shows progress that was especially comforting, because this did take quite a while. (I didn't time it, but it was somewhere around 20 minutes.)

And actually, that extra output really illustrates the value of dynamic programming! In the early iterations, we're seeing a lot of states we've never seen before, so it takes a while to find the best score. You can see it checking thousands of new states (and taking a second or two) for each set of inputs.

But towards the end, most of the sub-states are ones that have already been checked. The "every thousand checks" output appears now and then, but for most of the combos, it is silent, indicating that it needed to do relatively little computation. And by this point it was cranking through many sets per second.

Altogether, the code had to check about 3.5 million unique states to solve this challenge. That's a lot, but it's doable. Without DP, the number of states to compute would have been much, much higher, and as I can testify, even letting the code run all night would not be enough!

Final Thoughts

Oh, this is where I usually report my ranking, isn't it?

Fine, then. I placed 6955th on Part 1, and 3982nd on Part 2. Certainly the worst I've done all year, but as it's the first time I ever gave up and went to bed, this isn't surprising.

More importantly, what are the lessons learned?

I've used dynamic programming before, but (as you can tell) it's not my go-to tool, and I sometimes struggle to see how it applies. I think working through this problem has made that clearer and stronger for me. The key is to cast your problem in such a way that you can define a smaller, remaining problem that doesn't depend on how you got to that state in the first place. If you can do that, then you can add a memo map, do a simple recursion over the sub-states, and quickly get an answer.

I'm going to keep an eye out for problems like this in the future, and try to be quicker in recognizing them. So, while I did poorly in the contest, I feel like it's made me a better programmer — and in any way that matters, that's a win!