For more content like this subscribe to the ShiftMag newsletter.

Motivation

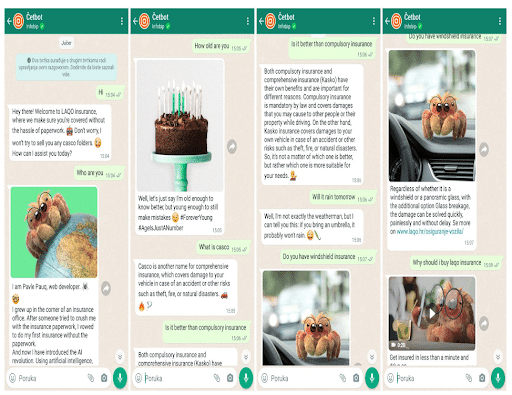

With the arrival of ChatGPT and other LLMs (Large Language Models), chatbots have experienced a revolution. Talking to a chatbot has become much more like talking to a human. The motivation behind the project was to enable clients to easily create their own business chatbot powered by LLM.

These chatbots should:

- Help end users solve their problems faster.

- Represent the brand’s values.

- Intelligently transfer to human agents when needed.

Today we enable our clients to build LLM chatbots in just 5 minutes through Infobip’s Answers platform.

Challenges

Mainstream adoption of LLM-s is growing, but it is still recent and comes with some new challenges.

Missing data

LLMs, specifically ChatGPT, are trained allegedly with data up to September 2021. Clients may care about more recent data and about data specific to their business.

How to embed the knowledge in the chatbot that it was not trained with?

One technique that has proven good is in-context learning.

Given a user question, we retrieve and provide small sections of relevant document chunks to the LLM chatbot. The chatbot should generate a response using the information in these chunks.

Hallucinations

ChatGPT is an autoregressive probabilistic model.

Given an input, ChatGPT predicts the token (word piece) that should come next, feeds it back with the input, and repeats the process until the end token is predicted. Since it is a probabilistic model, it may output something that is misleading or incorrect.

Working with digital insurance company LAQO, this was something we had to keep an eye on. In their industry, it is important to keep responses as accurate as possible as they may have legal consequences.

An example of hallucination could be a chatbot telling the user that LAQO covers some costs which are, in fact, not covered by the insurance type.

There is currently no solution for hallucinations; ev en the most powerful models like GPT-4 hallucinate.

Some things that could be done:

- Model parameters like temperature can be adjusted to lessen the chance of hallucination

- Well-crafted prompts with instructions specifically for the client’s business tend to help with the hallucinations. Usually, we can do better than generic prompts, which are available with popular LLM frameworks.

- Chatbot is constrained to only respond to topics from retrieved data chunks

Prompts

Writing prompts or, in other words, instructions for LLMs is tricky. Having a good understanding of how an LLM works helps a lot. Being patient and willing to experiment with different ways of stating an instruction may help even more.

Different LLMs have different quirks. ChatGPT and similar LLMs are trained to follow instructions, but they are not perfect. Using simple and clear instructions helps.

Response format

Sometimes, LLM may produce a response in a format that is not desired. It may even “leak” prompt/system message details that are like “internal” instructions. Following instructions is something that is being constantly worked on for LLMs.

Context window

Current LLMs typically have a small context window which is the amount of information (tokens) we can feed into LLM and an LLM can generate in a single request. Using multiple LLM requests may be one strategy to deal with a limited context window, but this comes with increased costs and latency.

Retrieval

Retrieval is about finding small (3-4) chunks of data relevant to the question. There are many libraries or databases that can be used for the problem.

It becomes tricky to find relevant chunks when there is a chat history.

The retrieval system should consider a follow-up question but also previous questions. Exact search (slower, more accurate) makes sense over approximate search (faster, less accurate) for smaller documentation.

Cost

LLM’s are a costly business. Even open-source solutions may require a lot of expensive hardware to operate at scale.

Latency

Making multiple calls to an LLM will make end-users wait longer for a response, which may impact customer satisfaction.

LORA

In-context learning is a great technique, but we are also experimenting with “fine-tuning” open-source models with proprietary data.

LORA and many variants of this technique make the fine-tuning process much more accessible in terms of costs and time. So far, we had great results fine-tuning image generation models (Stable Diffusion). We were able to teach the Diffusion model to generate images about items specific to brands.

Next steps

We’re working on creating a multimodal and multipersonality chatbot agent who will be able to also handle transactions. More details to come.

This article was written by Danijel Temraz , Principal Engineer at Infobip and Martina Ćurić , Staff Engineer at Infobip.

The post Hallucinations, prompts, cost, and other challenges we faced when creating an LLM-powered chatbot appeared first on ShiftMag.