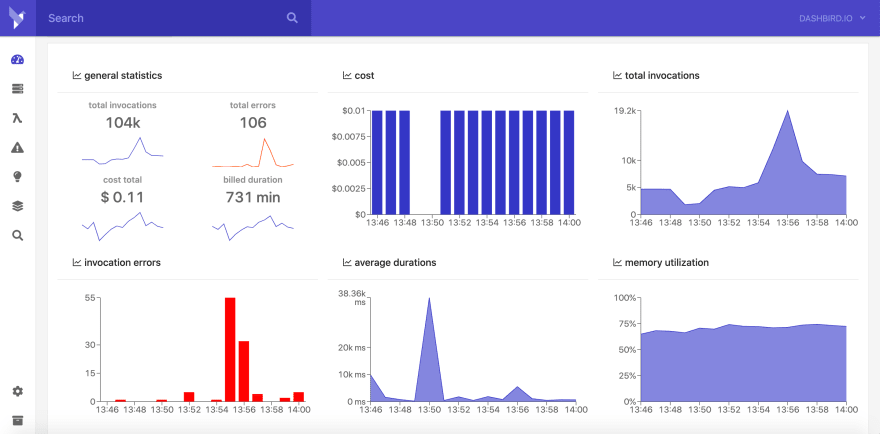

We've talked about how serverless architecture is a great option for companies that are looking to optimize costs. Just like with all app building and developments, monitoring the performance of your implementation is crucial and we, the folks at Dashbird, understand this need all too well --- this is why we've spent the better part of the past year and a half to create a monitoring and observability solution for AWS Lambda and other Serverless services.

One of those important features for serverless users is the cost monitoring solution that Dashbird offers. I'll walk you through how it works and how you too can use this to your advantage.

Firstly, you'll need a Dashbird account. If you don't have one yet, fear not, you can sign up for free to get you started.

Before we jump straight in, let's first take a second to discuss how AWS Lambda pricing works. A quick recap:

- You get one million invocations for free and 3.2M seconds of compute time, every month.

- You only pay for the invocations you use on top of those.

- For every individual invocation, you pay $0.0000002 but it's important to note that duration plays an important factor too as you'll have to pay about $0.0000166667 for every Gb/s after that.

I know those numbers have enough zeros to make the AWS Lambda promise of cutting down your server bills seem amazing but the truth is, they add up really quickly. Because of the nature of the serverless architecture, you'll split every functionality into smaller, little functions that do one thing and return the result so the number of executions will be high. Development environments and production environments are two entirely different beasts when it comes to cost too.

How to keep Lambda costs down?

There are a few ways to get those costs down from reducing cold-starts and optimizing memory utilization to picking a runtime that better fits your needs.

Reducing cold starts impact

We've already established the way AWS Lambda works and is priced so it's going to be of no surprise when I say that cold starts are going to be expensive since you'll have to pay for the time it takes to spin up those containers.

There are a couple of ways to address these but let's first look at how you go about seeing which functions have cold start issues and how much of an impact they really have.

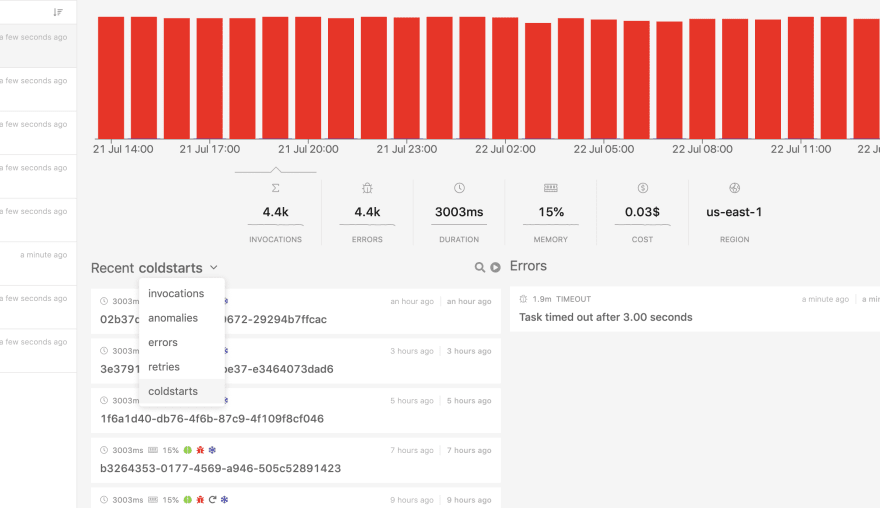

Going into Dashbird, you jump into the Lambda view and look on the right side of the screen. From here you can filter to view only the cold starts.

The little anomaly icon you see next to each function is a cold-start.

Now that we have a simple way to identify our cost increasing cold-starts, we need a way to address them.

Use a runtime that boots up fast

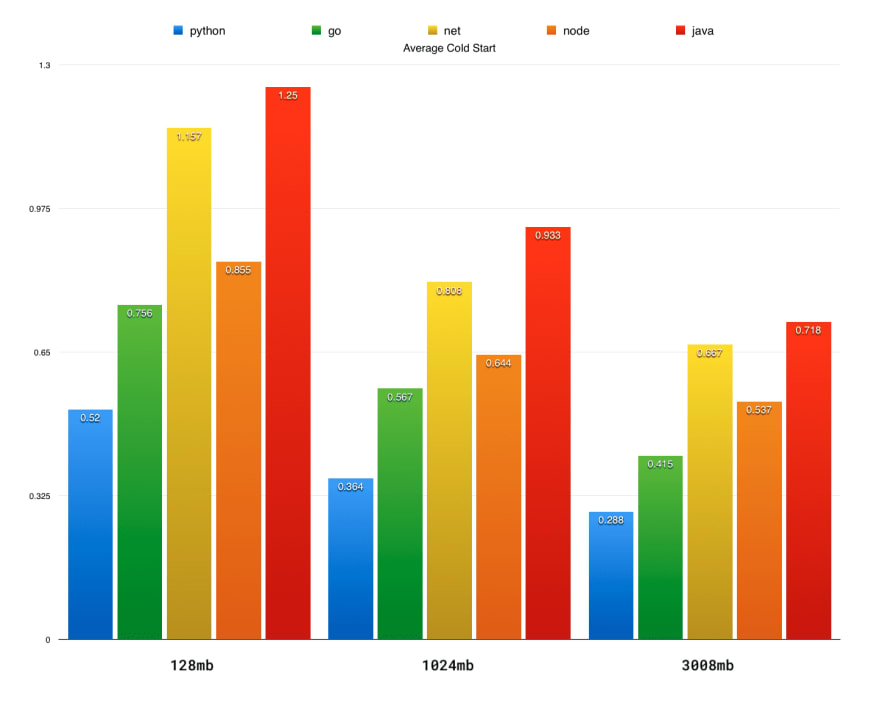

One of the most popular ways of dealing with cold-starts is by using a runtime that has faster bootup and right now the fastest way to do that is by using Python. Based on a simple benchmark Nathan Malishev did a while back, it's clear that Python is the winner.

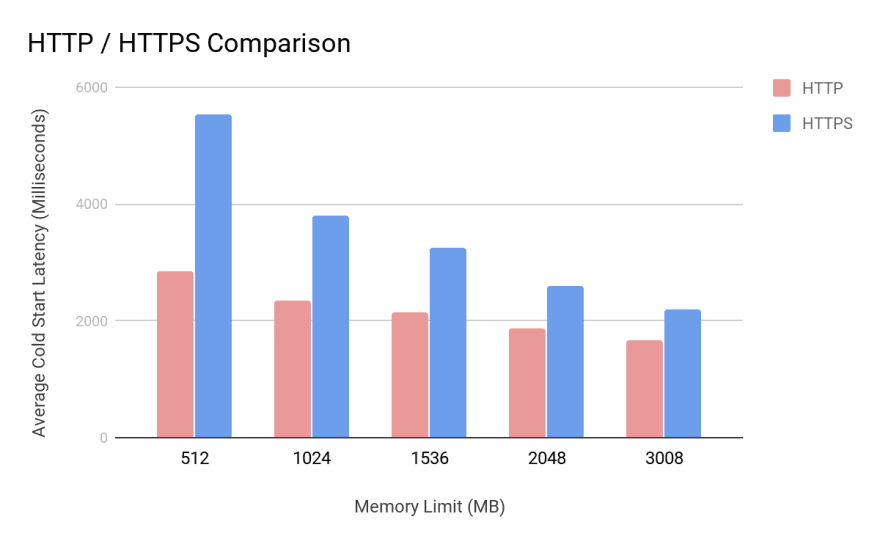

Use HTTP calls instead of HTTPS

Serkan Özal has looked into this claim and using a simple function that accessed DynamoDB, he concluded that using HTTP instead of HTTPS is a lot faster, especially when using Lambdas with lower memory settings since the transaction is much simpler.

Bump up the memory limit

This might seem counterproductive to the already established goal of saving money on your Lambdas, but since you get charged by execution, it might make sense to increase the memory of your function thus having it execute a lot faster. Lambda containers also spin up considerably faster when the function has more memory so while this is probably not a universal solution to the cold-start issue there are some cases where this might make a lot of sense.

Downgrade the memory of your functions

Sometimes we overestimate the memory needs of a function and instead of upgrading by small memory increments we throw caution to the wind and go big. Since AWS Lambda has a linear pricing model, 1024mb is 8 times more expensive than 128mb and 3008mb is 24 times more expensive than 128mb. So you can imagine a scenario where you could cut Lambda cost down 10x or even more just by making small memory adjustments.

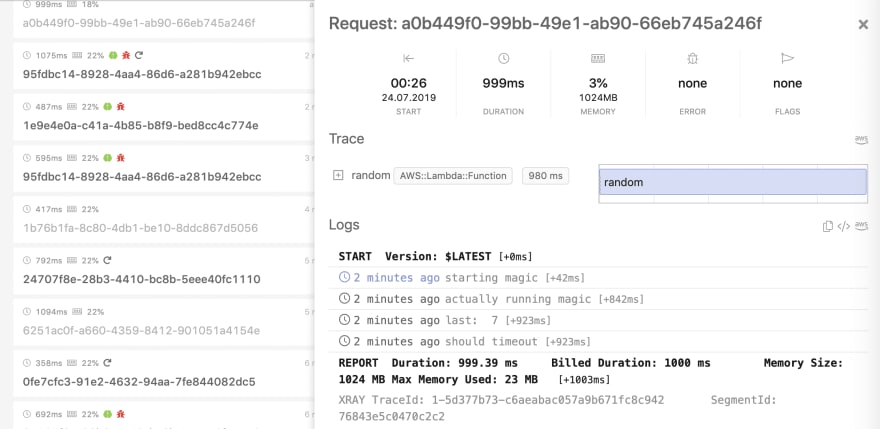

Let's jump back to our Dashbird Lambda panel to look at the execution speeds of our functions. After a few seconds, I see a function that looks suspicious.

As you see in the photo, our function uses only 3% of the 1024mb of RAM allocated. Before jumping into my AWS console to take drastic measures, let's look at multiple invocations of the same function over several days to make sure that we're looking at a one-off or some sort of exception to the rule.

I quickly filter out this function and compare the other invocations I see there, and I can easily tell that this function is using up to 6% of the memory allocated --- I'm now comfortable reducing the memory of that function to 128MB saving me about $25 a month on this function alone. Now imagine doing this for 10, 25, or 100 functions. It adds up.

Decrease Lambda execution time

We've talked about how Python is great at reducing boot-up times for your containers but as it turns out, for overall execution speed for warm containers Python might not be the best choice.

Yun Zhi Lin wrote an excellent benchmark on this very subject where he went through all the runtimes available for AWS Lambda and compared the execution duration of each one. As it turns out, .Net Core 2.0 wins the race by a good margin with Node and Python coming in second and third.

We hope this has been of help in shedding some light on the different ways to cut AWS Lambda cost and while these might be some of the most popular ways for developers to scale down those AWS bills, they are not the only ones. You might also want to check out our article on how to leverage Lambda cache to cut costs.