In the last several years, Google’s Kubernetes project has generated huge buzz. The project has grown and evolved into a titan of the cloud infrastructure world.

While it's a great project and serves many purposes, it remains a complex beast. Even with the managed Kubernetes services from major cloud providers, teams have to maintain complex, interwoven architectures using an ever-expanding cosmos of plugins and paradigm shifts. With such complexity inherent with its flexibility, Kubernetes requires its own set of skills in order to implement, maintain, upgrade, and operate this diverse orchestration ecosystem.

The Simplicity of PaaS

These intensive, skill-based requirements may be appropriate for some business models. However, if you want to spend your time building applications rather than managing servers and security, then you may want to consider a Platform as a Service (PaaS) rather than Kubernetes. These providers maintain the infrastructure, security, observability, and overall well-being of the environment so you can focus on the business-critical applications.

One of the draws of Kubernetes is its capability to provide a uniform environment experience. An application built to fit within Kubernetes should be portable by design, ephemeral in nature, and able to live in any container-driven world, agnostic to the infrastructure (take a look at Twelve Factor Apps for more guidelines on building apps). So if an application team wanted to leave behind the complexity of a Kubernetes managed infrastructure in favor of curated PaaS, what would that look like?

Today we’ll take a look at this simple Kubernetes driven Flask application. We’ll see how it runs, learn its requirements, and explore how we can convert it to Heroku.

The Application Design

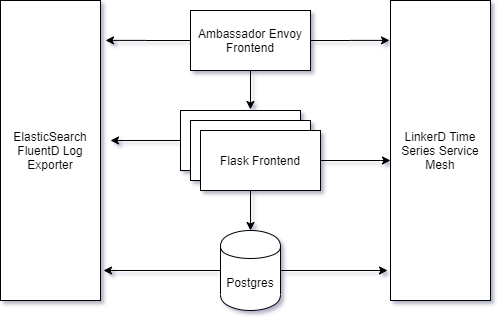

This application is a simple Flask frontend that pulls a list of actors from a Postgres database then displays them on a web site. As you can see, the infrastructure to monitor, log, serve, and generally provide up-time make up even more components than the app itself.

To deploy the application in Kubernetes we need the pre-requisite infrastructure components, several YAMLs for the application itself, and a Helm chart for the database.

- GitLab CI/CD

- Ambassador Edge Stack

- LinkerD Service Mesh

- FluentD Log Collector

- Elasticsearch Document Database

- Certificate Generation

- Ingress Definition

- Exposed Ports & Application Deployment

- PostgreSQL Helm Customizations

- And finally the application itself

There are a lot of necessary steps just to get the application serviceable. Let’s look at the comparable requisites in Heroku.

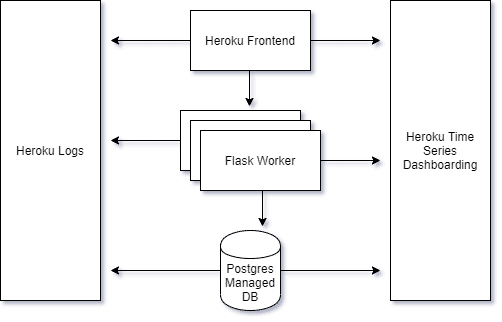

Because the PaaS vendor has made the technology decisions for you, you do have to abide by that vendor's decisions and opinions. However, in return you get tightly-integrated service offerings that work out of the box. What previously took weeks of research and implementation of service meshes and logging exporters has been reduced to just minutes and a few commands.

To deploy the same application in Heroku, we just need two steps: spin up the database and the application.

Now let's look at how we would convert this application from Kubernetes to Heroku. We'll look at four steps:

- Set up the infrastructure

- Convert the application

- Deploy the app

- Update the app

1. Setting Up the Infrastructure

We saw the many components for Kubernetes that are necessary to ensure operability in prod. Each of these come with an install guide and recommendations, but the pattern is usually very similar:

- Download the control binaries.

- Validate kubectl can reach the appropriate clusters.

- 'kubectl apply -f https://dependency/spec.yaml' or use the binary to create resources.

- Verify deployment completed in appropriate security contexts and is functioning as desired.

Heroku’s requirements are much more straightforward: Make an account, download the heroku cli, and you're done! Remember that a big draw to PaaS is that the validation and install (all those steps you had to do yourself with Kubernetes) are already done for you.

These steps provide the core infrastructure services for your app in either environment, such as TLS and traffic management, scaling, observability, and repeatability.

2. Converting the Application

Now that we have established how each of the environments look for our sample app, we can look at how we need to tweak the application to work in its new PaaS home. Because many of the components have been implemented on our behalf, there is some necessary transforming before we can deploy the app.

These steps will ensure that we can deploy the application as a container, view application logs in stdout, remotely log into the container during runtime, and customize parameters for the application from the infrastructure.

First, we have to change the Dockerfile. For our Kubernetes Dockerfile that defines our application at init time, we were largely free to design it as we wish:

FROM python:3

WORKDIR /usr/src/app

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

RUN touch /tmp/healthy && echo "healthy" >> /tmp/healthy && cat /tmp/healthy

ENV FLASK_ENV=development

ENV FLASK_APP=myapp.py

COPY myapp.py .

CMD [ "flask", "run", "--host=0.0.0.0" ]

The image is simple: use a maintained base image, set a work directory, add the python requirements, set some default environment variables, add the application code, and start the server.

In a later step we’ll use this Dockerfile to build our application, push it to a Docker registry, and have Kubernetes pull the image to its local servers to start the app.

In Heroku, due to our desire to use Python-specific base images, we have to add a few packages for Heroku’s container management interfaces. Our new Dockerfile looks like this:

FROM python:3

WORKDIR /usr/src/app

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

RUN apt-get update && \

apt-get install -y \

curl \

openssh-server

ADD ./.profile.d /app/.profile.d

RUN rm /bin/sh && ln -s /bin/bash /bin/sh

RUN echo "healthy" >> /tmp/healthy && \

cat /tmp/healthy

ENV FLASK_ENV=development

ENV FLASK_APP=myapp.py

COPY myapp.py .

CMD python3 myapp.py

Here we’re defining the same image as before, but with a few tweaks.

- First, we have a new apt-get series of installations to add curl & ssh to our image. We also add a script (heroku-exec.sh) to define the ssh environment:

[ -z "$SSH_CLIENT" ] && source <(curl --fail --retry 3 -sSL "$HEROKU_EXEC_URL")

- Then, we have to explicitly declare our default shell by linking /bin/bash to /bin/sh.

-

Next, we have to change the way the image is instantiated by specifying the flask runtime parameters in the myapp.py file so that we may use Heroku-defined environment variables to allow the app to take traffic once deployed.

if __name__ == "__main__": port = int(os.getenv("PORT", 5000)) app.run(debug=True, host='0.0.0.0', port=port)

One final consideration is the database. With Kubernetes we can utilize its fungible capacity to host clustered or replicated databases to protect against corruption or node failure. We must use a Helm chart, design the database architecture from scratch, or use an external hosting solution for our database.

However, this isn't necessary when using hosted services. With Heroku, we simply need to add the hosted database via our dashboard. The replication and resiliency become the provider’s concern to manage.

(Remember that in both database implementations, replication and resiliency are not replacements for tested backups! Always backup and validate your core data.)

With this, we have our application container images and databases ready to be deployed.

3. Running the Application

Assuming we have the core infrastructure installed, the databases provisioned for our cluster, and appropriate role-based access control (RBAC) configurations associated with our service accounts, we can now focus on the application deployment.

We do not have an explicit CI/CD pipeline defined in our example. So to manually deploy our application to Kubernetes we must do the following using the kubectl binary:

docker login

docker build -t myorg/myapp:0.0.1 -t myorg/myapp:latest .

docker push myorg/myapp:0.0.1; docker push myorg/myapp:latest

kubectl apply -f ./spec/

As we can see, once we deploy the core infrastructure and validate the YAMLs, it’s not that hard to manually roll code to our Kubernetes clusters.

By taking a look at Heroku’s deployment scheme, we have their own custom binary with which we can interact with their APIs.

heroku login

heroku container:login

heroku container:push web -a myapp

heroku container:release web -a myapp

Here we trust Heroku’s tagging and deployment of our applications rather than employ our own tagging and release functionality—though it is largely the same amount of manual release rigor.

It's also fair to call out that deployment and release pipelines built into either platform may entirely obfuscate all this effort; however, that, too, must be designed, built, and maintained in either environment.

At this point, we have defined processes for deploying the applications in both environments.

4. Updating the Application

When deploying an already existing application into Kubernetes, you would use a different command rather than apply:

docker build -t myorg/myapp:0.0.2 -t myorg/myapp:latest .

kubectl rollout deployment/myapp -n myapp --record --image=myapp:0.0.2

With Heroku we simply follow the same process again:

heroku container:push web -a myapp

heroku container:release web -a myapp

With this last step, we have a process to iteratively deploy our application to our environments.

Conclusion

Shakespeare wrote “A rose by any other name would smell as sweet.” Similarly our application works the same as it would in any containerized environment, Capulet or Montague, since it’s not the application itself that has to conform but rather its surroundings.

Outside of our cheesy analogy, we explored what a simple application requires to run in the complex and highly customizable Kubernetes environments, as well as what is required to get the same level of functionality from Heroku, our managed infrastructure provider. In the end, both were simple to deploy to and run with, but Heroku proved to be much less intensive in its prerequisites to get started.