❔ About

While I was working on endoflife.date integrations, the need for offline copy started to raise:

Offline copy of data

#2530

Offline copy of data

#2530

I really like the idea but to avoid repeated calls of the API for every product I would like data on, I would like to be maintain a local copy of the data and then only download updates each time I start my application (or after a particular time period e.g. only request updates once every 24 hours)

Ideally, I would be able to get the data in JSON format which I can then manage locally.

Alternative would be to call the API for every product to get the product data for each product. But this would also require that I know all of the products in the first place which given the dynamic nature of the data isn't very attractive.

After some various attempts, I finally found a Kaggle based solution.

I wanted the data to:

- 👐 Be easy to share

- ✅ Rely on the official API

- 🔁 Up-to date (without any effort)

- 🔗 Easy to integrate with third party products

- 🧑🔬 Be deployed on a datacentric/datascience platform

- 🤓 Show source code (Open Source)

- 🚀 Be easily extensible

Therefore I created a Notebook that does the following things once a week:

- Queries the API

- Load & store data in a DuckDb database

-

Export resulting database in

sqlancsv -

Export database a

Apache Parquetfiles

🧰 Tools

All you need is Python and DuckDB json functions:

🎯 Result

As you can see, for now, the only input is the API:

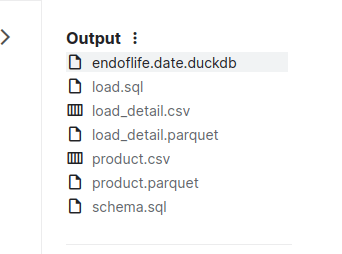

... while we have fresh output files:

🗣️ Conclusion

Finally I delivered the following solution to the community:

🎁 Weekly Scheduled offline exports on Kaggle ♾️

#2633

🎁 Weekly Scheduled offline exports on Kaggle ♾️

#2633

❔ About

Getting an easy to use offline copyof endoflife.date would be very convenient to be able to produce data-analysis.

endoflife.date API to get an automated offline copy of the datas.

🎁 The Notebook

Below are the very portable outputs :

💰 Benefits

Weekly:

-

csvexports - DuckDB exports

- Apache Parquet exports