1. Introduction

In today's rapidly evolving tech world, the efficient integration of design and development processes has become key to enhancing productivity and the speed of innovation, especially in the realm of front-end and client-side application development. With the advancement of artificial intelligence and machine learning technologies, the technology of "image to design draft and code" has emerged, greatly simplifying this process. This article provides a detailed overview of the principles behind the technology of converting images to design drafts and code, the implementation steps, and how to achieve fully automatic conversion from any image to Figma design drafts and then to code through Codia AI(Website:https://codia.ai/).

2. Image to Design Draft

2.1. AI Recognition

AI recognition is the core step in converting image content into Figma design drafts, referring to the use of artificial intelligence algorithms, such as machine learning and deep learning, to recognize and understand image content. It involves multiple sub-tasks, including image segmentation, object recognition, and text recognition (OCR). The processes and technical implementations of these sub-tasks are detailed below.

2.1.1. AI Recognition Flowchart

Below is the AI recognition flowchart for converting images into Figma design drafts:

Image Input

│

├───> Image Segmentation ────> Recognize Element Boundaries

│

├───> Object Recognition ────> Match Design Elements

│

└───> Text Recognition ────> Extract and Process Text

│

└───> Figma Design Draft Generation

Through the above process, AI can effectively convert the design elements and text in images into corresponding elements in Figma design drafts. This process significantly simplifies the work of designers, improving the efficiency and accuracy of design.

2.2. Image Segmentation

Image segmentation is the process of identifying and separating each independent element in the picture. This is typically achieved through deep learning, such as Convolutional Neural Networks (CNNs). A popular network architecture for this task is U-Net, which is particularly suitable for image segmentation tasks.

2.2.1. Technical Implementation:

- Data Preparation: Collect a large number of annotated design element images, with annotations including the boundaries of

each element.

- Model Training: Train a model using the U-Net architecture to recognize different design elements.

- Segmentation Application: Apply the trained model to new images, outputting the precise location and boundaries of each element.

2.2.2. Code Example (Using Python and TensorFlow):

import tensorflow as tf

from tensorflow.keras.layers import Input, Conv2D, MaxPooling2D, UpSampling2D, concatenate

from tensorflow.keras.models import Model

def unet_model(input_size=(256, 256, 3)):

inputs = Input(input_size)

# U-Net architecture

# ... (specific U-Net construction code omitted)

outputs = Conv2D(1, (1, 1), activation='sigmoid')(conv9)

model = Model(inputs=inputs, outputs=outputs)

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

return model

# Code to load the dataset, train the model, and apply the model will be implemented here

2.3. Object Recognition

Object recognition involves identifying specific objects in the picture, such as buttons, icons, etc., and matching them with a predefined library of design elements.

2.3.1. Technical Implementation:

- Data Preparation: Create a dataset containing various design elements and their category labels.

- Model Training: Use pre-trained CNN models like ResNet or Inception for transfer learning to recognize different design elements.

- Object Matching: Match the identified objects with elements in the design element library for reconstruction in Figma.

2.3.2. Code Example (Using Python and TensorFlow):

from tensorflow.keras.applications.resnet50 import ResNet50, preprocess_input

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.models import Model

# Load the pre-trained ResNet50 model

base_model = ResNet50(weights='imagenet', include_top=False)

# Add custom layers

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(1024, activation='relu')(x)

predictions = Dense(num_classes, activation='softmax')(x)

# Construct the final model

model = Model(inputs=base_model.input, outputs=predictions)

# Freeze the layers of the ResNet50

for layer in base_model.layers:

layer.trainable = False

# Compile the model

model.compile(optimizer='rmsprop', loss='categorical_crossentropy')

# Code to train the model will be implemented here

2.4. Text Recognition (OCR)

Text recognition (OCR) technology is used to extract text from images and convert it into an editable text format.

2.4.1. Technical Implementation:

- Use OCR tools (such as Tesseract) to recognize text in images.

- Perform post-processing on the recognized text, including language correction and format adjustment.

- Import the processed text into Figma design drafts.

2.4.2. Code Example (Using Python and Tesseract):

import pytesseract

from PIL import Image

# Configure the Tesseract path

pytesseract.pytesseract.tesseract_cmd = r'path'

# Load the image

image = Image.open('example.png')

# Apply OCR

text = pytesseract.image_to_string(image, lang='eng')

# Output the recognized text

print(text)

# Code to import the recognized text into Figma will be implemented here

2.5. Figma Design Draft Generation

2.5.1. Detailed Introduction to Figma Design Draft Generation

Converting images into Figma design drafts involves reconstructing the AI-recognized elements into objects in Figma and applying the corresponding styles and layouts. This process can be divided into several key steps: design element reconstruction, style matching, and layout intelligence.

2.5.2. Flowchart

Below is the flowchart for converting images into Figma design drafts:

AI Recognition Results

│

├───> Design Element Reconstruction ──> Create Figma Shapes/Text Elements

│ │

│ └───> Set Size and Position

│

├───> Style Matching ───────> Apply Colors, Fonts, etc., Styles

│

└───> Layout Intelligence ────> Set Element Constraints and Layout Grid

2.5.3. Design Element Reconstruction

In the AI recognition phase, we have already obtained the boundaries and categories of elements in the image. Now, we need to reconstruct these elements in Figma.

Technical Implementation:

- Use the Figma API to create corresponding shapes and text elements.

- Set the size and position of elements based on AI-recognized information.

- If the element is text, also set the font, size, and color.

Code Example (Using Figma REST API):

// Assuming we already have information about an element, including its type, position, size, and

style

const elementInfo = {

type: 'rectangle',

x: 100,

y: 50,

width: 200,

height: 100,

fill: '#FF5733'

};

// Use the fetch API to call Figma's REST API to create a rectangle

fetch('https://api.figma.com/v1/files/:file_key/nodes', {

method: 'POST',

headers: {

'X-Figma-Token': 'YOUR_PERSONAL_ACCESS_TOKEN'

},

body: JSON.stringify({

nodes: [

{

type: 'RECTANGLE',

x: elementInfo.x,

y: elementInfo.y,

width: elementInfo.width,

height: elementInfo.height,

fills: [{ type: 'SOLID', color: elementInfo.fill }]

}

]

})

})

.then(response => response.json())

.then(data => console.log(data))

.catch(error => console.error('Error:', error));

2.5.4. Style Matching

Style matching involves applying AI-recognized style information to Figma elements, including colors, margins, shadows, etc.

Technical Implementation:

- Parse AI-recognized style data.

- Use the Figma API to update the style properties of elements.

Code Example (Continuing to Use Figma REST API):

// Assuming we already have style information

const styleInfo = {

color: { r: 255, g: 87, b: 51 },

fontSize: 16,

fontFamily: 'Roboto',

fontWeight: 400

};

// Update the style of text elements

fetch('https://api.figma.com/v1/files/:file_key/nodes/:node_id', {

method: 'PUT',

headers: {

'X-Figma-Token': 'YOUR_PERSONAL_ACCESS_TOKEN'

},

body: JSON.stringify({

nodes: [

{

type: 'TEXT',

characters: 'Example Text',

style: {

fontFamily: styleInfo.fontFamily,

fontWeight: styleInfo.fontWeight,

fontSize: styleInfo.fontSize,

fills: [{ type: 'SOLID', color: styleInfo.color }]

}

}

]

})

})

.then(response => response.json())

.then(data => console.log(data))

.catch(error => console.error('Error:', error));

2.5.5. Layout Intelligence

Layout intelligence refers to intelligently arranging elements in Figma based on the relative positional relationships between elements.

Technical Implementation:

- Analyze the spatial relationships between elements.

- Use the Figma API to set constraints and layout grids for elements.

Code Example (Using Figma Plugin API):

// Assuming we already have information about the spatial relationships between elements

const layoutInfo = {

parentFrame: 'Frame_1',

childElements: ['Rectangle_1', 'Text_1']

};

// Set the constraints for elements in the Figma plugin

const parentFrame = figma.getNodeById(layoutInfo.parentFrame);

layoutInfo.childElements.forEach(childId => {

const child = figma.getNodeById(childId);

if (child) {

child.constraints = { horizontal: 'SCALE', vertical: 'SCALE' };

parentFrame.appendChild(child);

}

});

3. Design Draft to Code

3.1. What is Screenshot to Code

Screenshot to code is a process that uses machine learning and artificial intelligence technologies to automatically convert design images (such as Figma files or screenshots) into usable front-end or client-side code. This technology can recognize design elements, such as layout, colors, fonts, and other visual features, and convert them into design drafts or front-end and client-side code. The screenshot to code technology typically involves the following steps:

3.1.1. Image Preprocessing

In the image preprocessing stage, the system prepares the input design image for better subsequent analysis and feature extraction. This stage may include the following steps:

- Image Cleaning: Removing noise and unnecessary information from the image.

- Image Enhancement: Adjusting the image's contrast and brightness to highlight key visual elements.

- Size Adjustment: Scaling the image to a standard size for more consistent processing by the model.

- Color Normalization: Ensuring color consistency so the model can accurately recognize and classify design elements.

3.1.2. Feature Extraction

Feature extraction is the key step in identifying and parsing design elements. At this stage, computer vision technology is used to detect different components in the image, such as:

- Element Detection: Identifying buttons, input boxes, images, lists, and text areas, etc.

- Edge Detection: Determining the boundaries and shapes of elements.

- Text Recognition (OCR): Extracting text content from the design.

- Color and Style Analysis: Identifying the colors, backgrounds, fonts, and other style properties of elements.

3.1.3. Layout Understanding

In the layout understanding stage, the AI model needs to comprehend the overall structure and the relationships between elements in the design. This typically involves:

- Hierarchy Analysis: Determining the parent-child relationships and stacking order of elements.

- Spatial Relationships: Analyzing the positional relationships between elements, such as alignment, spacing, and overlap.

- Layout Pattern Recognition: Identifying common layout patterns, such as grid systems, flexbox layouts, etc.

3.1.4. Code Generation

Code generation is the process of converting extracted design features and layout information into actual code. This is typically achieved through:

- Neural Networks: Using deep learning models, such as Convolutional Neural Networks (CNNs) or Recurrent Neural Networks (RNNs), to generate the structure and content of the code.

- Semantic Understanding: Using natural language processing technologies to understand and generate the semantic structure of the code, ensuring the generated code meets logical and functional requirements.

3.2. Generation Flowchart

graph LR

A[Figma Design File] --> B[Parse Design]

B --> C[Convert to DSL, Autolayout, Smart Naming, etc.]

C --> D[Generate Front-end Code]

C --> E[Generate Client-side Code]

3.2.1. Convert to DSL

The process of converting to DSL involves parsing each element in the design file and converting it into a more general and structured format. Below is a simplified example, showing how to convert a button in Figma design into DSL.

// Assuming we have a Figma button object

const figmaButton = {

type: 'RECTANGLE',

name: 'btnLogin',

cornerRadius: 5,

fill: '#007BFF',

width: 100,

height: 40,

text: 'Login'

};

// Convert to DSL

function convertToDSL(figmaObject) {

return {

elementType: 'Button',

properties: {

id: figmaObject.name,

text: figmaObject.text,

style: {

width: figmaObject.width + 'px',

height: figmaObject.height + 'px',

backgroundColor: figmaObject.fill,

borderRadius: figmaObject.cornerRadius + 'px'

}

}

};

}

const dslButton = convertToDSL(figmaButton);

console.log(dslButton);

3.2.2. Generate Front-end Code

Once we have the DSL representation, we can convert it into front-end code. Below is an example of converting DSL into HTML and CSS.

<!-- HTML -->

<button id="btnLogin" style="width: 100px; height: 40px; background-color: #007BFF; border-radius: 5px;">

Login

</button>

/* CSS */

#btnLogin {

width: 100px;

height: 40px;

background-color: #007BFF;

border-radius: 5px;

}

3.2.3. Generate Client-side Code

Similarly, we can convert the DSL into client-side code, such as React components.

// React Component

function LoginButton(props) {

return (

<button

id="btnLogin"

style={{

width: '100px',

height: '40px',

backgroundColor: '#007BFF',

borderRadius: '5px'

}}

>

Login

</button>

);

}

4. Codia AI Supports Automatic Generation of Figma Design Drafts and Code from Any Image

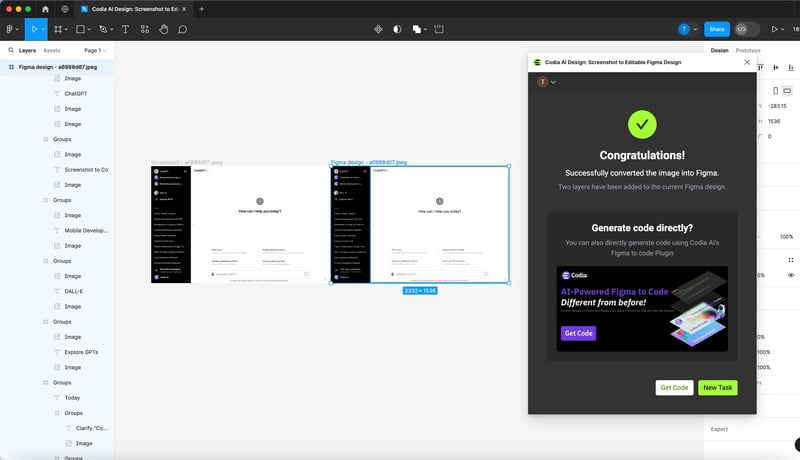

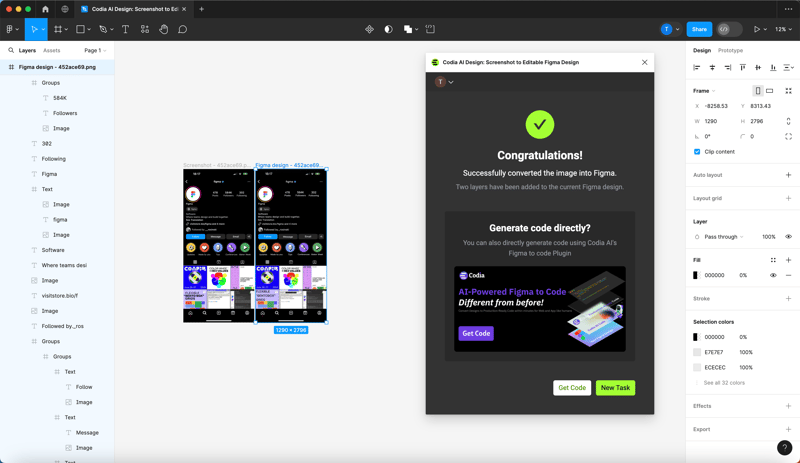

1.First, use any image (supported on both mobile and web) to generate a design draft with one click at Codia AI Design (Website:https://codia.ai/d/5ZFb).

Web example with ChatGPT:

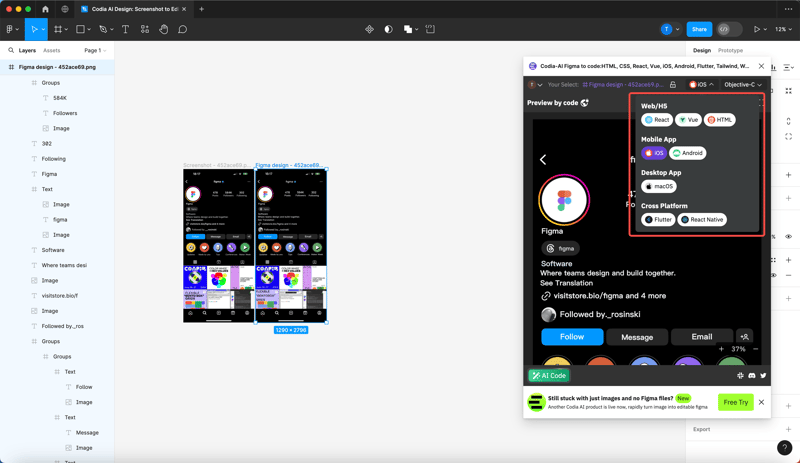

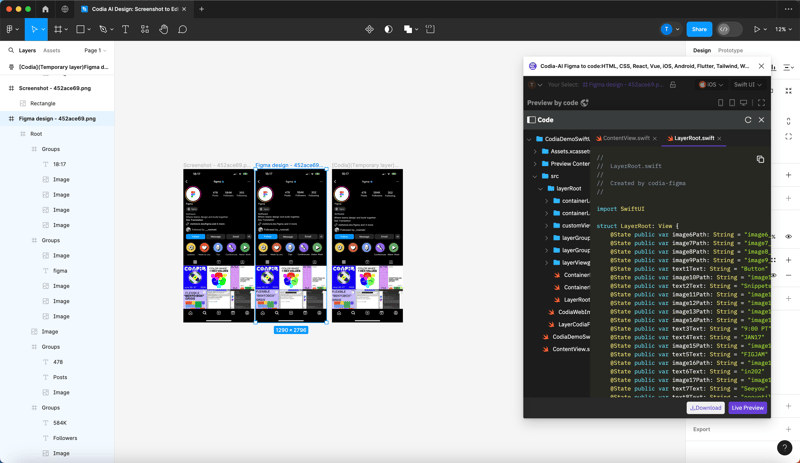

Mobile example with Figma:

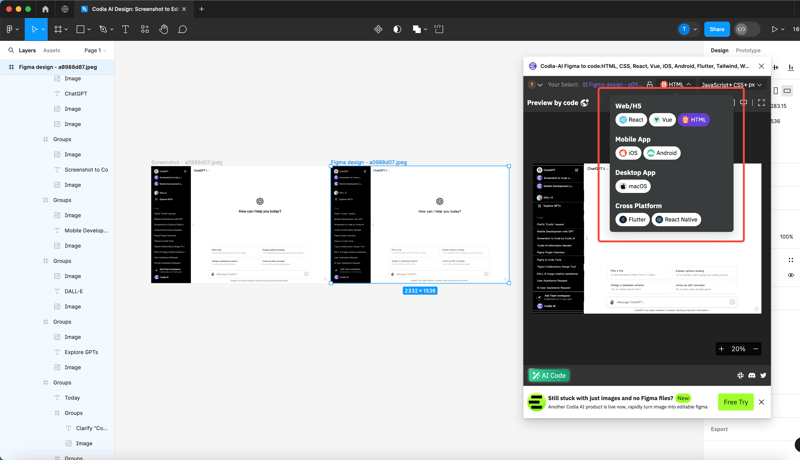

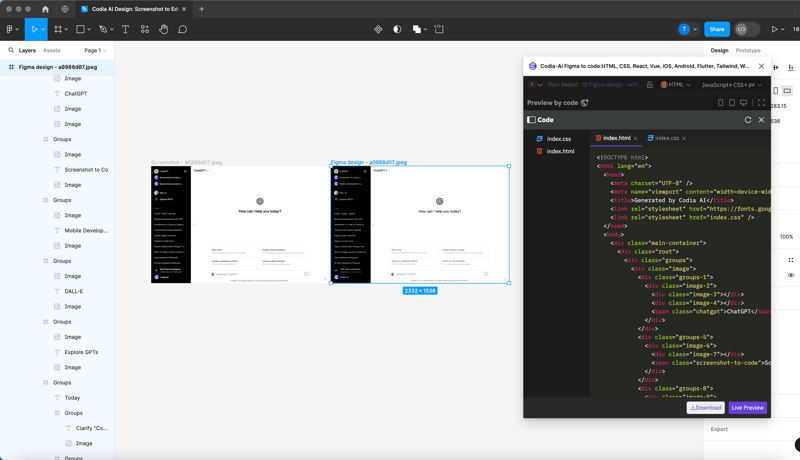

2.Then, open Codia AI Code (Website:https://codia.ai/s/YBF9) to generate code with one click. Codia AI Code currently supports ReactNative, Flutter, React, Vue, HTML, CSS, JavaScript, TypeScript, and iOS, Android, macOS, Swift, SwiftUI, Objective-C, Java, Kotlin, Jetpack Compose UI.

Generate web code for ChatGPT:

Generate iOS code for Figma:

5. Conclusion

Through a detailed exploration of the technology process of "image to design draft and code," we understand that these technologies not only significantly improve the work efficiency of designers and developers but also promote close collaboration between design and development. From image preprocessing, feature extraction, layout understanding to code generation, each step demonstrates how AI plays an indispensable role in understanding design intentions and automating code generation. Especially with the advent of Codia AI, the process from any image to Figma design drafts and then to code generation has become unprecedentedly simple and efficient. Whether it's front-end or client-side code, Codia AI can provide support, which is undoubtedly a great boon for teams pursuing rapid iteration and product launch. As these technologies continue to mature and develop, we have reason to believe that future design and development work will be more seamless and collaborative, further accelerating the speed of innovation.