1. Preface

Memory management is a critical aspect of C++ programming, directly affecting the performance and stability of programs. Traditional memory allocation methods, such as using new and malloc, are simple and easy to use but may encounter performance bottlenecks and memory fragmentation issues in high-performance and concurrent environments. To address these issues, memory pool technology has emerged.

2. Introduction to Memory Pools

2.1. Why Use Memory Pools

Memory pools (Memory Pool) are a memory allocation strategy that pre-allocates a large block of memory and divides it into fixed-size chunks for program use, thereby reducing memory fragmentation and improving allocation efficiency. Memory pool use cases include:

- Memory Fragmentation Issues: Frequent memory allocation and release can lead to memory fragmentation, reducing memory utilization. Memory pools reduce memory fragmentation by allocating fixed-size memory blocks.

- Allocation Efficiency Issues: Direct memory requests to the operating system involve system call overhead. Memory pools reduce this overhead by batch requesting and managing memory.

2.2. Memory Pool Principles

Memory pools manage memory through the following steps:

- Pre-allocate a large memory chunk and divide it into multiple fixed-size memory blocks.

- Maintain a free memory block list, connecting each block with pointers.

- When the program requests memory, remove a memory block from the free list and return it to the program.

- When memory is released, re-add the memory block to the free list.

- If memory blocks are insufficient, request a new memory area, and continue to divide it into blocks.

3. Memory Pool Implementation Schemes

3.1. Design Issues

When designing a memory pool, consider the following issues:

- Automatic Growth of the Memory Pool: Whether the memory pool needs to grow automatically when memory blocks are exhausted.

- Memory Usage Increase and Decrease: Whether the total memory usage of the memory pool only increases and how to handle idle memory.

- Fixed Size of Memory Chunks: Whether the size of memory chunks in the pool is fixed and how to handle different sizes of memory requirements.

- Thread Safety: Whether the memory pool supports thread-safe operations in a multi-threaded environment.

- Memory Content Clearance: Whether the memory chunks need to clear their contents upon allocation and release.

3.2. Common Memory Pool Implementation Schemes

- Fixed-Size Buffer Pool: Suitable for scenarios where fixed-size objects are frequently allocated and released.

- dlmalloc: A highly efficient memory allocator written by Doug Lea.

- SGI STL Memory Allocator: Manages memory blocks of different sizes, using linked lists for connections.

- Loki Small Object Allocator: Uses vectors to manage arrays, supports automatic growth and reduction of memory blocks.

- Boost object_pool: Allocates memory blocks based on the size of the user class, managing free nodes lists.

- ACE_Cached_Allocator and ACE_Free_List: Maintains fixed-size memory block allocators.

- TCMalloc: A memory pool implementation provided by Google's open-source project gperftools.

3.3. STL Memory Allocators

The C++ standard library provides a default memory allocator std::allocator, but also allows users to define custom allocators. GNU STL offers various memory allocators, such as __pool_alloc, __mt_alloc, etc.

4. Memory Pool Design

4.1. Why Use Memory Pools

The use of memory pools can solve memory fragmentation issues, improve memory allocation efficiency, and reduce interactions with the operating system after allocating large blocks of memory at once, thereby enhancing performance.

4.2. Evolution of Memory Pools

The design of memory pools has evolved from the simplest linked list management of free memory to complex FreeList pools with hash mapping, and to the segregated fit method that understands the underlying principles of malloc. Each design has its advantages and disadvantages, suitable for different scenarios.

5. Specific Implementation of Memory Pools

An efficient memory pool should have the following features:

- Automatically growing total size.

- Fixed-size memory chunks.

- Thread safety.

- Clearing content after memory chunks are returned.

- Compatibility with

std::allocator.

The implementation of a memory pool can use linked lists to manage memory chunks, including lists for allocated and unallocated chunks. Thread safety can be ensured with locks (such as std::mutex) and interfaces for allocating and releasing memory.

5.1. Usage Example: Application of C++ Memory Pools

To better understand the use of memory pools, we will demonstrate how to implement and use a memory pool in C++ through a simple example. This example will simulate a scenario where multiple threads frequently need to allocate and release memory.

5.2. Example Code

First, we define a simple memory pool class MemoryPool, which will manage fixed-size memory blocks. To simplify the example, we assume that the objects managed by the memory pool are of type char.

#include <iostream>

#include <mutex>

#include <vector>

class MemoryPool {

public:

MemoryPool(size_t blockSize, size_t blockCount)

: blockSize_(blockSize), blockCount_(blockCount) {

// Pre-allocate a certain number of memory blocks

for (size_t i = 0; i < blockCount_; ++i) {

freeBlocks_.push_back(new char[blockSize_]);

}

}

~MemoryPool() {

// Release all memory blocks

for (auto block : freeBlocks_) {

delete[] block;

}

}

void* allocate() {

std::lock_guard<std::mutex> lock(mutex_);

if (freeBlocks_.empty()) {

throw std::bad_alloc();

}

void* block = freeBlocks_.back();

freeBlocks_.pop_back();

return block;

}

void deallocate(void* block) {

std::lock_guard<std::mutex> lock(mutex_);

freeBlocks_.push_back(static_cast<char*>(block));

}

private:

size_t blockSize_;

size_t blockCount_;

std::vector<char*> freeBlocks_;

std::mutex mutex_;

};

Now, we create an instance of the memory pool and use it to allocate and release memory blocks in multiple threads.

#include <thread>

void threadFunction(MemoryPool& pool) {

for (int i = 0; i < 100; ++i) {

void* block = pool.allocate();

// Simulate some work

std::this_thread::sleep_for(std::chrono::milliseconds(10));

pool.deallocate(block);

}

}

int main() {

const size_t blockSize = 1024; // Size of each memory block

const size_t blockCount = 10; // Number of memory blocks in the pool

MemoryPool pool(blockSize, blockCount);

// Create multiple threads to simulate a concurrent environment

std::thread threads[5];

for (int i = 0; i < 5; ++i) {

threads[i] = std::thread(threadFunction, std::ref(pool));

}

// Wait for all threads to complete

for (int i = 0; i < 5; ++i) {

threads[i].join();

}

return 0;

}

In this example, we created a memory pool that can manage 10 memory blocks, each 1024 bytes in size. Then, we started 5 threads, each of which will attempt to allocate and release memory blocks from the pool 100 times. We use std::mutex to ensure the thread safety of the memory pool.

5.3. Considerations

- In practical applications, memory pools may require more complex management strategies, such as supporting memory blocks of different sizes, automatic growth, and merging and splitting of memory blocks.

- Ensuring the thread safety of the memory pool is crucial, especially in a high-concurrency environment.

- When objects managed in the memory pool are destructed, it is necessary to ensure that their destructors are called correctly, which is usually achieved by providing a special

deleteElementmethod in the memory pool.

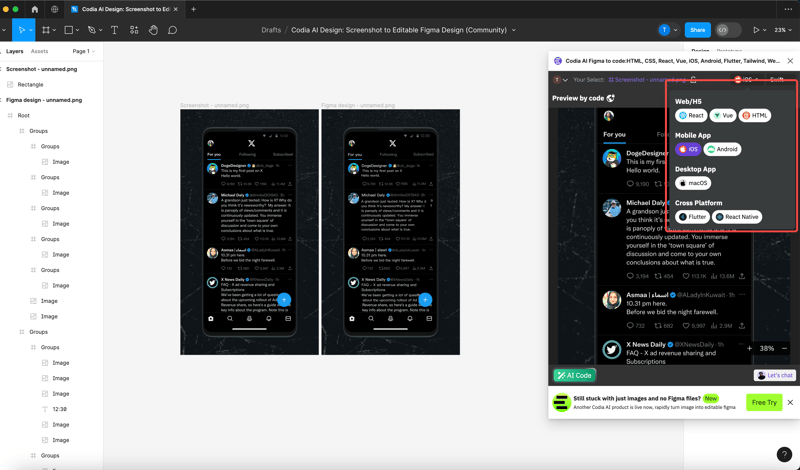

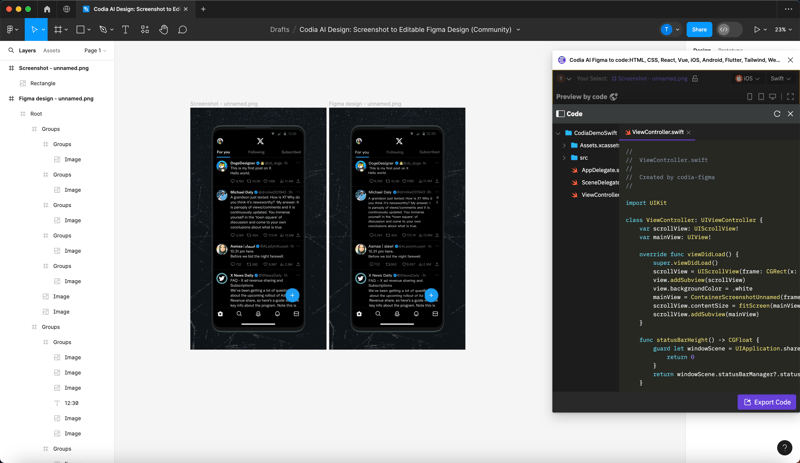

6. Developing any platform from Scratch with Codia AI Code

To integrate Codia AI into your Figma to any platform such as frontend, mobile, and Mac development process, follow these instructions:

Open the link: Codia AI Figma to code: HTML, CSS, React, Vue, iOS, Android, Flutter, ReactNative, Tailwind, Web, App

- Install the Codia AI Plugin: Search for and install the Codia AI Figma to Flutter plugin from the Figma plugin store.

- Prepare Your Figma Design: Arrange your Figma design with clearly named layers and components to ensure the best code generation results.

- Convert with Codia AI: Select your design or component in Figma and use Codia AI to instantly

generate any platform code.

7. Codia AI's products

1.Codia AI DesignGen: Prompt to UI for Website, Landing Page, Blog

2.Codia AI Design: Screenshot to Editable Figma Design

3.Codia AI VectorMagic: Image to Full-Color Vector/PNG to SVG

8. Conclusion

Memory management is an important aspect of C++ program design, with a direct impact on the performance and stability of programs. To address the potential performance bottlenecks and memory fragmentation issues of traditional memory allocation methods (such as new and malloc), memory pool technology was proposed. Memory pools reduce memory fragmentation and improve allocation efficiency by pre-allocating a large block of memory and dividing it into fixed-size chunks. When designing memory pools, issues such as the pool's automatic growth, memory usage increase and decrease, fixed size of memory chunks, thread safety, and memory content clearance need to be considered.

There are a variety of memory pool implementation schemes, including fixed-size buffer pools, dlmalloc, SGI STL memory allocators, Loki small object allocators, Boost object_pool, ACE_Cached_Allocator and ACE_Free_List, and TCMalloc. The C++ standard library also provides a default memory allocator std::allocator and allows users to define custom allocators. An efficient memory pool should have features such as an automatically growing total size, fixed-size memory chunks, thread safety, clearing content after memory chunks are returned, and compatibility with std::allocator. The specific implementation of a memory pool can use linked lists to manage memory chunks and ensure thread safety with locks (such as std::mutex).

Through a simple example, we have demonstrated how to implement and use a memory pool in C++. In this example, a memory pool is used to manage fixed-size memory blocks and perform allocation and release operations across multiple threads. This example emphasizes that in practical applications, memory pools may require more complex management strategies, as well as the importance of ensuring thread safety and correctly calling object destructors.

Memory pools are a valuable tool in C++ programming, offering a solution to overcome the limitations of traditional memory allocation methods. By providing a pre-allocated block of memory divided into fixed-size chunks, memory pools can significantly reduce fragmentation and improve allocation efficiency. As with any advanced technique, proper design and implementation are key to reaping the benefits of memory pools without introducing new complexities or issues. Whether you are working on a high-performance application or simply looking to optimize your memory management, memory pools are worth considering as part of your C++ development toolkit.