Using DJL.AI For Deep Learning Based Sentiment Analysis in NiFi DataFlow

Introduction:

I will be talking about this processor at Apache Con @ Home 2020 in my "Apache Deep Learning 301" talk with Dr. Ian Brooks.

Sometimes you want your Deep Learning Easy and in Java, so let's do that with DJL in a custom Apache NiFi processor running in CDP Data Hubs.

Grab the Source:

https://github.com/tspannhw/nifi-djlsentimentanalysis-processor

Grab the Recent Release NAR to install to your NiFi lib directories:

https://github.com/tspannhw/nifi-djlsentimentanalysis-processor/releases/tag/1.2

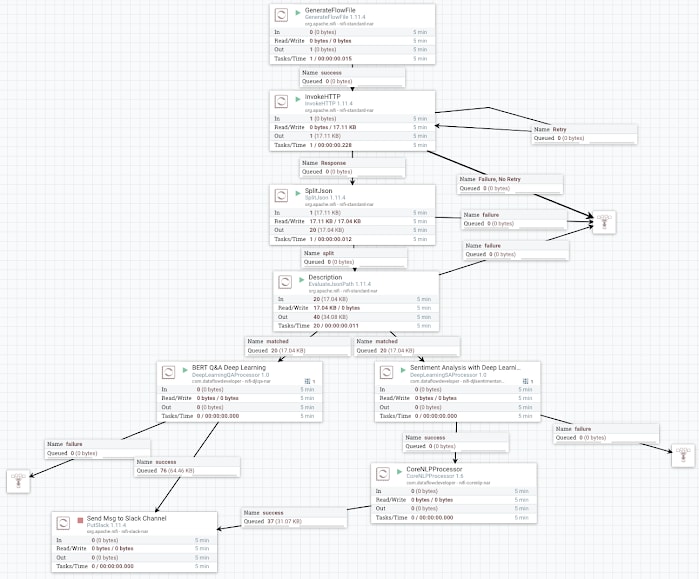

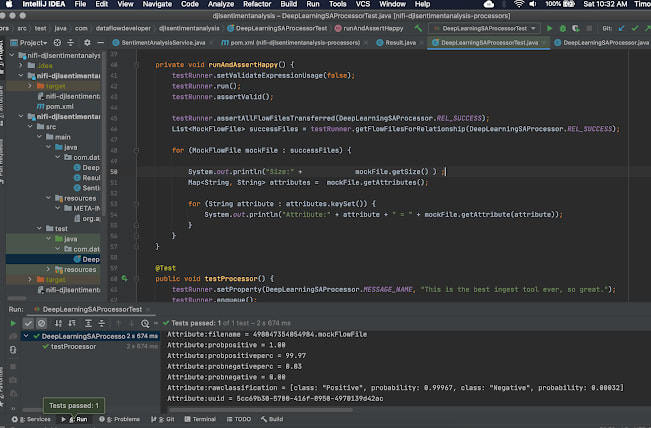

Example Run

probnegative

0.99

No value set

probnegativeperc

99.44

No value set

probpositive

0.01

No value set

probpositiveperc

0.56

No value set

rawclassification

[class: "Negative", probability: 0.99440, class: "Positive", probability: 0.00559]

Demo Data Source

https://newsapi.org/v2/everything?q=cloudera&apiKey=REGISTERFORAKEY

Reference:

- Deep Learning Sentiment Analysis with DJL.ai

- https://github.com/awslabs/djl/blob/master/mxnet/mxnet-engine/README.md

- https://github.com/aws-samples/djl-demo/tree/master/flink/sentiment-analysis

- https://github.com/awslabs/djl/releases

Deep Learning Note:

The pretrained model is DistilBERT model trained by HuggingFace using PyTorch.

Tip

Make sure you have 1-2 GB of RAM extra for your NiFi instance for running each DJL processor. If you have a lot of text, run more nodes and/or RAM. Make sure you have at least 8 cores per Deep Learning process. I prefer JDK 11 for this.

See Also: https://www.datainmotion.dev/2019/12/easy-deep-learning-in-apache-nifi-with.html