I have recently been looking into AWS Step Functions. For those not familiar with them, Step Functions are Amazon’s way of providing a state machine as a service.

If you have used AWS you have probably used Lambda functions. Lambda functions allow you to easily put a bit of functionality into a stateless scalable component.

Of course, the issue with this is generally most business applications require some form of state to be useful. Business processes generally follow a workflow where it is only possible to complete one task once another has been completed.

AWS Step Functions allows you to easily set up scalable workflows for your serverless components.

Features of Step Functions

The main reason for choosing Step Functions rather than implementing your own is all the functionality you get out of the box. I have implemented workflows before and they can get complicated quickly.

- Error Handling - AWS has error handling built-in and you can easily specify various catch blocks and tell what action you want to perform if something fails.

- Retry Handling - Step Functions support retries as well to cope with the inevitable transient error.

- Multiple ways to Trigger workflow step - there are a few ways you can kick off a workflow such as via API or a CloudWatch event. I will cover them in more detail later.

- Workflow Progress Visualisation - The AWS Console will show you a visual representation of your workflow as well as showing the progress and whether a step has failed.

Main Benefits of Step Functions

Using AWS State Functions has many benefits:

- Handles all error handling and retry logic - as mentioned in the features, all this work is already built-in.

- Can handle complex workflows with hundreds of steps - Step Functions can cope with hundreds if not thousands of steps.

- Copes with manual steps - many business processes require a manual review at some point.

- Handles long-lived workflows up to 1 year - workflows using the standard type can last for as long as a year. So you don’t have to worry about your workflow timing out if there is a manual approval step.

- Manage state between executions of stateless functions

- Decouple the application work from business logic

- Scales well and can perform parallel executions

Drawbacks & Limitations of Step Function

Of course, no technology is perfect and there are a few limitations you need to be aware of.

- Decoupling all the workflow logic might make your application difficult to understand. Once you start linking together numerous individual Lambda components you need to makes sure that the workflow is well understood and documented.

- Need to learn the AWS States Language which is based on JSON.

- Locked into an AWS specific technology - Step Functions are unique to AWS and therefore if you plan on moving to a different cloud provider you will need to come up with a different solution.

- Execution History limited to 90 days - if you need longer execution history you will need to rely on the CloudWatch logs.

- Currently, only a few ways to trigger workflow transitions - not all the AWS services support triggering a workflow. The notable ones that are missing are from DynamoDB and Kinesis.

- Data sent between workflow steps are not encrypted - if you want to pass sensitive data between steps you need to store it encrypted in either DynamoDB or S3 and provide a pointer in your step output.

- Workflows can last up to 1 year on Standard and 5 mins on Express.

- Execution History has a 25,000 hard limit - if you think the number of executions (including retries) is likely to go above this you will need to split up your workflow into multiple workflows. Luckily you can kick off other step functions from a step function.

- Payload size limited to 32Kb - if you want to store above this amount you will need to use some other storage such as S3 or DynamoDB.

- Only works natively with a handful of AWS services - if you need other applications to be triggered this will need to be done with a lambda wrapper.

AWS Step Function Workflow Types

When you are setting up a step function you will be given 2 options for the type of workflow you want, Standard or Express.

Standard

This is the default and is best if you have any long-running or manual steps in your workflow. This is best for low volume systems (requests measured per minute rather than per second).

- Workflows can run for up to one year

- 2,000 executions per second

- 4,000 transitions per second per AWS account

- Priced per state transition

- Supports Activities & Callbacks - these are for long-running services that you don’t want in a lambda.

Express

If you have a high throughput system then you need the express workflow type. The express type is priced differently and does not support long-running processes.

- Workflows can run for up to 5 minutes

- 100,000 executions per second

- Nearly unlimited state transitions

- Priced on the number of executions, duration and memory consumption

- Doesn’t support Activities or Callbacks

AWS Step Function Pricing

The cost of using step functions will depend on whether you have chosen Standard or Express as your workflow type.

Standard Pricing

Standard workflows are priced at $0.025 per 1,000 state transitions. So a workflow with 10 states, executed 100 times will cost you $0.025.

If you have a high throughput system that executes your workflow 1 million times a day. That is 10 million state transitions so would cost $250 a day (or $91,250 a year!).

Express Pricing

Express workflows have 2 levels of pricing. They are charged at $1.00 per 1 million workflow executions ($0.000001 per request) and you are also charged for the workflow duration.

- $0.00001667 per GB-Second ($0.0600 per GB-hour) for the first 1,000 hours GB-hours

- $0.00000833 per GB-Second ($0.0300 per GB hour) for the next 4,000 hours GB-hours

- $0.00000456 per GB-Second ($0.01642 per GB-hour) beyond that

Calculating pricing for express workflows is a bit harder but depending on your throughput it could save you a lot of money.

AWS give a few pricing examples on their website which might help you work out the pricing for your application.

Overview of Step Functions

Now you have seen some of the benefits of step functions, let’s have a look at how we set one up.

Triggering a Step Function

The whole point of having step functions is being able to automate a manual workflow. So naturally, you aren’t going to want to trigger these workflows manually via the AWS Console.

Luckily, AWS comes with several ways that you can trigger Step Functions:

- API Gateway - using StartExecution from an API Gateway and pass in the payload from a POST call to your step function.

- Cloudwatch/EventBridge Events - using EventBridge or Cloudwatch you can trigger step functions when another event occurs. Such as a file being uploaded to S3.

- Amazon SDK - You can also trigger StartExecution using the SDK either from a Lambda function or another application.

- Other Step Functions - it is good practice to split up large workflows into smaller ones that you can trigger using the task state.

Amazon States Language

To be able to write step functions you need to learn Amazon’s States Language. Don’t worry it is basically just JSON.

Here is a simple one state hello world example.

{

"StartAt": "HelloWorld",

"States": {

"HelloWorld": {

"Type": "Pass",

"Result": "Hello World!",

"End": true

}

}

}

The workflow starts with StartAt which indicates which step it is going to first.

You then have all of your states for your workflow inside the states property. Note, even though this has multiple items it isn’t an array.

You can indicate whether a state is an end state or passes on to another state using End and Next. For example, we could split this state up into a Hello state which then passes on to a World state.

{

"StartAt": "Hello",

"States": {

"Hello": {

"Type": "Pass",

"Result": "Hello",

"Next": "World"

},

"World": {

"Type": "Pass",

"Result": "World!",

"End": true

}

}

}

This isn’t a great example as we aren’t doing anything with the output of the first state. The result of this step function will just be “World!“. I will get into how we can make this better using States.

Step Function States

As we saw from our Hello World example a state is made up of a Type, and either Next or End. The other parameters are dependent on the state Type.

The other fields you can use on any state are:

-

Comment- you can add comments so others know what your workflow is doing (and you in a few weeks) -

InputPath&OutputPath- these allow you to select a portion of the input to pass on to your state or output to pass on to the next state. I will cover these in more detail in the Inputs and Outputs section below.

There are 8 different states we can use to make up our workflow.

Pass

The pass state we saw in our hello world example. As the name suggests this state doesn’t do any work but can be used to manipulate the data from the last state before passing it on to the next.

As we did with our example you can hard code results using the Result parameter. This can be an object as well as a string.

Such as:

"Result": {

"x-datum": 0.381018,

"y-datum": 622.2269926397355

},

More useful is the Parameters option which allows you to construct an object using values from the previous state result.

For example, if you have the following output of your last state:

{

"product": {

"name": "T-Shirt",

"details": {

"color": "green",

"size": "large",

"material": "nylon"

},

"availability": "in stock",

"cost": "$23"

}

}

But your next state needed the information in this format:

{

"product": "T-Shirt",

"colour": "green",

"size": "large",

"availability": "in stock"

}

You can do this using the Parameters field as follows:

"MapValues": {

"Type": "Pass",

"Parameters": {

"product.$": "$.product.name",

"colour.$": "$.product.details.color",

"size.$": "$.product.details.size",

"availability.$": "$.product.availability"

}

"Next": "AnotherState"

},

You can use the Parameters field in the Task state as well to change the details you pass on.

Task

This is the state you are probably going to be using the most as it is the one that you need to interact with other AWS services.

You need to provide the Resource field which is usually the ARN of your AWS service.

"LambdaState": {

"Type": "Task",

"Resource": "arn:aws:lambda:us-east-1:123456789012:function:HelloWorld",

"Next": "NextState"

}

At the time of writing AWS natively supports the following AWS services:

- Lambda

- AWS Batch

- DynamoDB

- Amazon ECS/AWS Fargate

- Amazon SNS

- Amazon SQS

- Amazon Glue

- SageMaker

- Amazon EMR

- CodeBuild

- Athena

- Amazon EKS

- API Gateway

- AWS Glue DataBrew

- AWS Step Functions

You can find an up to date list of supported services on Amazon’s developer guide. If your service isn’t listed here than the easiest thing to do is to write a lambda function that calls it for you.

Choice

A workflow isn’t complete without some decisions being made. The choice state lets you specify conditions that need to be met to go to a particular state. For example, you might require manual approval if the value of the item is over a certain amount.

Given step functions are essentially infrastructure, you might not want to put too much logic into them. If you find yourself trying to write complex conditions it would be better to write this in a lambda function. At least that way you can write some unit tests around it (you do write unit tests don’t you?).

"ToBeOrNotToBe": {

"Type": "Choice",

"Choices": [

{

"Variable": "$.amount",

"NumericGreaterThan": 1000

"Next": "CEOApproval"

},

{

"Variable": "$.amount",

"NumericGreaterThan": 500

"Next": "ManagerApproval"

}

],

"Default": "Approved"

},

Wait

Wait step does what you would expect. It tells the workflow to wait a certain number of seconds or until a specified timestamp.

"wait_ten_seconds": {

"Type": "Wait",

"Seconds": 10,

"Next": "NextState"

}

"wait_until" : {

"Type": "Wait",

"Timestamp": "2021-03-14T01:59:00Z",

"Next": "NextState"

}

To be honest, I can’t think of many reasons you might want to do this. If you are waiting for a process to finish you would be better off using a callback or an activity.

One example, could be an ordering system that allows customers to make multiple orders within a 30 minute period. If all the orders are placed within that time then they can all be dispatched as one order. In this case, after receiving an order you could have the system wait 30 minutes and then bundle other orders together as the next step.

Although you would have to stop subsequent orders from kicking off workflows of their own.

Succeed

If you want to complete your workflow early then you can use the succeed state. For example, you might have a choice state that determines nothing else needs to be done if a certain condition has been met.

"SuccessState": {

"Type": "Succeed"

}

As this is a terminal state you do not need to specify a Next or End field.

Fail

Another terminal state you might want to use is when something unexpected happens. For example, a choice state could be used if any of the values coming through are null.

You can also optionally specify a Cause and Error message that will be returned. As with the succeed state, there is no Next or End field.

"FailState": {

"Type": "Fail",

"Cause": "Invalid response.",

"Error": "ErrorA"

}

Parallel

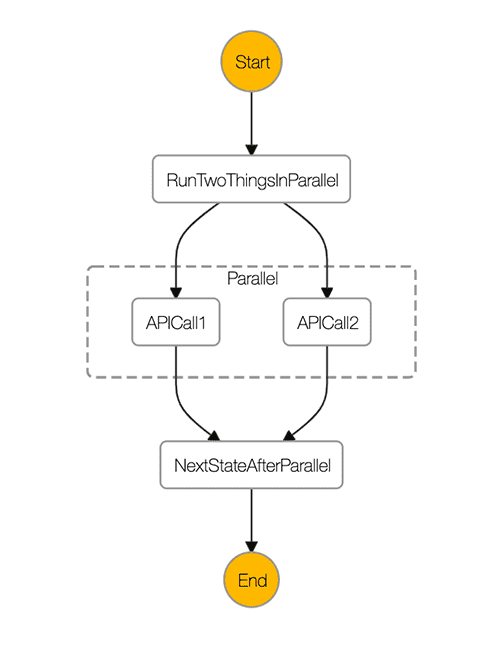

Some steps in your workflow can be run in parallel. If they don’t depend on other steps and take in similar parameters you can run them at the same time with the parallel state. For example, you might want to do two API calls at once.

"RunTwoThingsInParallel": {

"Type": "Parallel",

"Next": "NextStateAfterParallel",

"Branches": [

{

"StartAt": "APICall1",

"States": {

"APICall1": {

"Type": "Task",

"Resource": "arn:aws:lambda:us-east-1:123456789012:function:GetInfo1",

"End": true

}

}

},

{

"StartAt": "APICall2",

"States": {

"APICall2": {

"Type": "Task",

"Resource": "arn:aws:lambda:us-east-1:123456789012:function:GetInfo2",

"End": true

}

}

}

]

}

The states you want to run in parallel get added as branches. Each one has to be marked as an end state and you need to specify in the parallel state which state you want to run next.

Once all the states in your parallel state have finished executing the parallel state will return an array of results. The items in the array are in the order they appear in your parallel state. So in my example, the results of APICall1 will be the first item of the array and the results of APICall2 will be the second item.

Generally, you want to use a Pass state after a Parallel state to turn the array output of a parallel state back into a single JSON object.

Map

The map state is similar to Map in Javascript. It is used to iterate over an array and execute a state for each item in your array.

Taking the example from the AWS docs, if you have an input like this:

{

"ship-date": "2016-03-14T01:59:00Z",

"detail": {

"delivery-partner": "UQS",

"shipped": [

{ "prod": "R31", "dest-code": 9511, "quantity": 1344 },

{ "prod": "S39", "dest-code": 9511, "quantity": 40 },

{ "prod": "R31", "dest-code": 9833, "quantity": 12 },

{ "prod": "R40", "dest-code": 9860, "quantity": 887 },

{ "prod": "R40", "dest-code": 9511, "quantity": 1220 }

]

}

}

We can use the following Map state to iterate over it:

"Validate-All": {

"Type": "Map",

"InputPath": "$.detail",

"ItemsPath": "$.shipped",

"MaxConcurrency": 0,

"Iterator": {

"StartAt": "Validate",

"States": {

"Validate": {

"Type": "Task",

"Resource": "arn:aws:lambda:us-east-1:123456789012:function:ship-val",

"End": true

}

}

},

"ResultPath": "$.detail.shipped",

"End": true

}

Here the InputPath is used to select just detail from the JSON input. Then we use ItemsPath to select the array we want to iterate over. You can then specify in Iterator the task you want to run for each item in your array.

In this case, MaxConcurrency is set to 0, which doesn’t place a quota on tasks being run in parallel. If you need the items in your array to be run in order then you can set MaxConcurrency to 1.

Inputs and Ouputs

There are several ways that you can manipulate the data that is sent as input and received as an output from your states. Not all of these fields are available in every state so you need to check the AWS docs before using them.

The only fields which are common to all states are InputPath and OutputPath.

InputPath

The InputPath is used to select the part of the state input that you want to pass on to your Task. For example given the following input:

{

"result1": {

"val1": 1,

"val2": 2,

"val3": 3

},

"result2": {

"val1": "a",

"val2": "b",

"val3": "c"

}

}

If you set the InputPath to $.result2 it would send the following as the state input.

{

"val1": "a",

"val2": "b",

"val3": "c"

}

OutputPath

As we saw with InputPath the same can be done with the output. If you have a lambda that returns several fields but you are only interested in one of them then you can use the OutputPath to select the piece you are interested in.

Parameters

The Parameters field is the most useful one of all as it allows you to create new JSON inputs from your existing fields. I often use the Parameters field on the Pass state just to change the output of the previous state.

I gave one example of using Parameters when describing the Pass state. The other option is to use Parameters to turn the array output of the Map or Parallel state into a single object.

Take this output from a Parallel state that called two different APIs to get information.

[

{

"product": "T-Shirt",

"color": "green",

"size": "large"

},

{

"product": "T-Shirt",

"stock": 100

}

]

You can use the parameters field to turn this into a single object.

"Parameters": {

"product.$": "$[0].product",

"color.$": "$[0].color",

"size.$": "$[0].size",

"stock.$": "$[1].stock"

}

ResultPath

You can use ResultPath to specify how the output of your state is going to appear. The default behaviour of the states is to just return the output. This means the original input to your state is lost.

This is the equivalent of having "ResultPath": "$" which means the output of your state is the whole result and replaces input.

This isn’t always useful. Instead, you could append the result of your lambda on to the input. Let’s say we want the output to be added to a result field. This can be doing using "ResultPath": "$.result".

Or if you want to completely ignore the output of your state and just return the input you can do "ResultPath": null.

ResultSelector

The ResultSelector is similar to the Parameters field but works on the output instead of the input. It allows you to create a new object from the output of your state. The ResultSelector is executed before the ResultPath.

For example, say we have a lambda that gets the stock for a particular product.

We have this input:

{

"product": "T-Shirt",

"color": "green",

"size": "large"

}

And the output of our lambda function is:

{

"product": "T-Shirt",

"stock": 100

}

But we want to get an output that looks like this:

{

"product": "T-Shirt",

"color": "green",

"size": "large",

"availability": {

"stock": 100

}

}

To do that we need to have the following set:

"ResultSelector": {

"stock.$": "$.stock"

},

"ResultPath": "$.availability"

Handling Errors in Step Functions

One of the main reason for using step functions is all the hard work of building a workflow engine is done for you. This also includes retries and error handling.

Retries

If a transient error occurs you generally want to retry a few times before raising an error. Adding in retries will make your application more resilient to failure.

"Retry": [ {

"ErrorEquals": ["States.ALL"],

"IntervalSeconds": 3,

"MaxAttempts": 2,

"BackoffRate": 2

} ]

If an error occurs the result of the above will be the following:

- Error occurs

- Waits 3 seconds

- Retries the function. If it fails again then:

- Wait 6 seconds

- Retries the function. If it fails again then raise an error.

The ErrorEquals can be an exception raised from your application or one of the AWS error names which are: States.ALL, States.DataLimitExceeded, States.Runtime, States.Timeout, States.TaskFailed, or States.Permissions. You can read more about these in the AWS docs.

You can set MaxAttempts to 0 if you don’t want a particular error to be retried. For example, if your step function hasn’t got enough permissions to run then a retry probably isn’t going to help.

"Retry": [ {

"ErrorEquals": ["States.Permissions"],

"MaxAttempts": 0

}, {

"ErrorEquals": ["States.ALL"]

} ]

If you only specify ErrorEquals and none of the other fields then it will retry 3 times with a 1 second interval and a backoff rate of 2.

Note: States.ALL has ALL in capitals, unlike the other error names. If you use States.All it won’t error it will just ignore it.

Catch blocks

Once the retries have been tried, then the execution will fail unless you set a catch block. The catch block lets you direct the workflow to an error handling state if a particular error is thrown. For example, you might want to send a message to say the workflow has failed.

"Catch": [ {

"ErrorEquals": ["States.ALL"],

"Next": "ErrorState"

} ]

As with retries you can add multiple errors and have them go to different states if you want.

Activities & Callbacks

If you use the standard workflow you can use activities and callbacks. There is a subtle difference between activities and callbacks but each allows you to perform some work in your workflow asynchronously.

Activities

Activities were added first to step functions. Once you create an activity you can use the Task state to send work to your activity using the activity ARN.

The activity doesn’t do anything it acts as a queue of tasks that need to be picked up. To use activities you need to have a process that polls GetActivityTask. When an activity task is available you will get back a task token.

Once work has completed you can use the Amazon SDK or an API Gateway to call SendTaskSuccess or SendTaskFailure with your task token.

Callbacks

Callbacks work similarly but you don’t have to poll for a task token it is given to you when your task is called. Instead, you add .waitForTaskToken on the end of the ARN in your Task. The catch is that not all AWS services support callbacks. At the time of writing only the following AWS services support callbacks:

- Lambda

- Amazon ECS/Fargate

- Amazon SNS

- Amazon SQS

- API Gateway

- AWS Step Functions

As with Activities you then need to use Amazon SDK or an API Gateway to call SendTaskSuccess or SendTaskFailure with your task token once work has completed.

Callbacks and Activities can be used for manual approval processes or tasks that take a long time to run.

To implement a manual approval step you could do the following:

- Get your step function to send a message to SQS with

.waitForTaskToken - Set up an API Gateway that has approve and decline endpoints that send

SendTaskSuccessorSendTaskFailure. - Write a lambda that picks up the SQS message and then sends an email via SES with links to your approve and decline endpoints with the taskToken added as a query string parameter.

Best Practices when using AWS Step Functions

AWS have a list of best practices that you should follow when writing step functions, I recommend you give those a read before trying out step functions in production.

In addition to Amazon’s own best practices, I have a few of my own after having some experience with step functions.

States should be idempotent

Chances are if a workflow fails you are going to want to replay it. Unfortunately, step functions don’t give you the ability to replay a workflow from a particular step.

There is a blog post on the AWS website that outlines how you can do it. However, the approach they outline involves dynamically creating a step function that has a choice state at the start that bypasses other steps. I don’t think that is a practical solution for a production environment.

You could add a choice state at the beginning of your workflow that has branches to every other state in your workflow. However, not only is this quite messy, you can’t isolate individual states in a parallel state.

Therefore the preferred approach is to ensure your states are idempotent and therefore can be run multiple times without any adverse effects.

Pass a unique id to your lambda functions

Trying to stitch together logs in a serverless system can be a nightmare if not done correctly. Especially if your lambdas are used outside of your step function. If a lambda raises an error how do you know what step function execution it relates too?

When you execute a step function it requires a unique execution id (unique within 90 days) to be sent through. You need to keep in mind that you may need to replay the workflow in which case you can’t use just the unique id from another system. You will need to append something else to it.

You can however pass this unique id down into your state input. The execution id is available in the context object.

Final Thoughts

Step functions are a great tool to have in your toolbox if you are working with a serverless architecture. I am certainly going to be using them on a few projects in the future.

I will set up a working example at some point in the future and write about it in another blog post.