We all know how important it is to test our code but there are so many different types of tests that it can be difficult to work out which ones you should be focusing on.

In this article, I will be going over the 5 main types of testing that you should be aware of and when to use each of them.

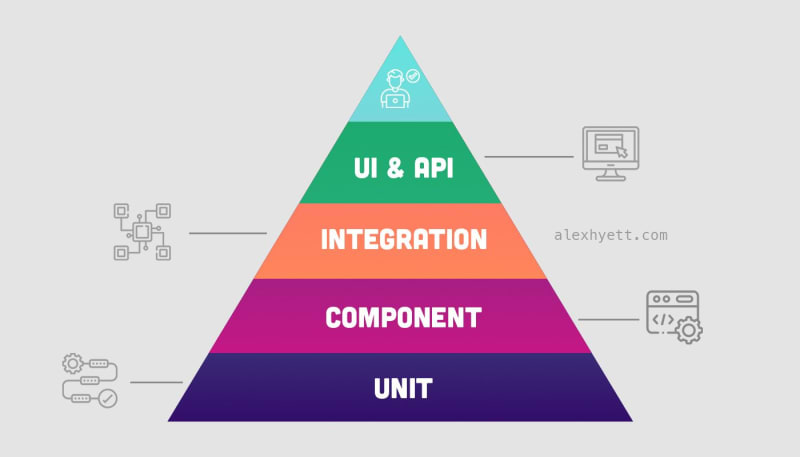

Testing Pyramid

You may have encountered the testing pyramid before but have never really thought about the significance of its shape.

Tests at the bottom of the pyramid should make up most of the tests in your test suite. This is where you should be focusing most of your efforts.

As we move up the pyramid the tests become slower, more complex and take more time to maintain.

Unit Tests

At the bottom of our pyramid, we have the unit tests. Most developers understand the importance of unit testing.

We write unit tests for all the methods and functions in our code to make sure that our code is working at the lowest level.

The number of unit tests that you should write is dependent on what your goal is.

Should aim to try and test every line of code in your functions. If you are not able to do that it is usually a sign that your function is doing too much or you haven’t written it with testability in mind.

When we write unit tests to test every line this is what we refer to as code coverage. Typically 100% refers to line coverage but depending on the industry you work in this will vary.

For the military and aviation industries you will generally need what they refer to as Modified Condition/Decision Coverage or MC/DC for short.

For MC/DC you not only test every line but you also need to make sure you get every possible decision branch.

If you have an IF statement with 3 different conditions then that means there will be 8 different scenarios that you need to cater for in your unit tests.

You would assume that if everything is working at the lowest level that it should also work when you put everything together but that isn’t always the case.

Component Tests

The next level up in our testing pyramid is the Component Tests. This is where you test a complete section of your application.

For example, if you are writing a Web Application you might have a frontend, an API and a Database.

A component test for the API would test the API in isolation from all the other components.

We wouldn’t include the front end in the test and we would also mock out the database and any other external components that get called.

The purpose of the component test is to make sure that your application is working as you expect given certain inputs.

By mocking out the database we can test both the happy path and the unhappy path of the application. We can see how your application will behave under certain conditions such as if the database is unavailable or if you send in a bad request.

Component tests make sure that all those units that you tested in the previous level also work when you put them together.

Integration Tests

The next level of testing is the integration test. In the previous level, we were mocking out the database and other external APIs. The purpose of these tests is to make sure that those integrations work.

This is usually where you find out that the team you have been working with have decided to use camelCase instead of snake case for their API, the monsters.

Or you find out that you have the connection string wrong for your database or you have written one of your SQL queries incorrectly.

For all of these tests so far we generally aren’t doing any testing on an actual environment. Unit Tests, Component Tests and Integration Tests are typically included in the build process or at the very least the release process.

Thanks to Docker it is fairly easy to spin up test databases and use them in your test suite when running on your CI/CD server.

A lot of developers get confused by integration tests thinking they need to test the whole of their application however that isn’t the case. The integration test as the name implies should only test the integration between components.

Depending who writes your integration tests will determine whether they are considered white box testing or black box testing.

If the integration tests are written by a developer and they are writing tests against a repository to make sure that data is being written to a database then this would be considered a white box test.

If your integration test is written by a tester however, who calls the API and then tests that data is written to a database then this is more of a black box test as the tester doesn’t need to understand the inner workings of the application.

It is not just calling the database that you might test. You might also test the calls to other APIs or even message queues.

End-to-End Tests

So we have tested the individual functions, we have tested the components, and we have tested the interactions between the components the next step is to test the application from end to end.

If you are writing a web application then these will typically be in the form of UI tests using tools such as Selenium or Cypress to drive the UI through a web browser.

The goal here is to test that everything works as expected. End-to-end tests will typically be a mix of functionality testing such as making sure that a login works or a list is populated but it can also include acceptance testing to make sure the application meets the business requirements.

Typically end to end tests will be written in what we call Gherkin language which looks something like this, and follows the Given, When, Then pattern.

Given I am a user with edit permissions

And I am logged in

And I am on the View Post page

When I click Edit Post

Then I should be on the Edit Post page

Frameworks such as Cucumber and SpecFlow are used so that we call execute code that performs these functions while still being understandable to the business stakeholders.

End-to-end tests can take a long time to run so they are not typically run on every build. Once you have written a lot of them then you generally need to run them overnight.

This isn’t ideal if you want to be releasing multiple times a day so some teams will split their end-to-end tests into groups and have a critical test group that is run before each deployment.

Unlike the other tests, end-to-end tests need all the components together so they are typically run on a test environment such as QA or UAT.

It can take a while to have a stable test suite of end-to-end tests especially if you are running them in a browser. Subtle things such as your application taking a little longer to load can cause tests to break, so you generally need someone working full-time on your automated tests.

Many of the frameworks allow you to take screenshots when tests fail which can be helpful to see what caused the failure.

There are quite a lot of different tests that fall under this bracket including:

- Performance Tests

- Regression Tests

- Security Tests

Manual Tests

At the very top of the pyramid are manual tests. Some features might be too hard to automate or not worth the time in doing it.

Usually, it is a case of not having enough testers to developers which results in tests needing to be run manually instead of being automated.

Ideally, you want the majority of your tests to be automated otherwise you are always going to be in this vicious cycle of not having enough time to test your application before each release.

If you find a bug in your application it is always better to find it lower down the pyramid than near the top.

If you find a bug through manual testing then you need to hunt through the logs and try and work out where the application went wrong which can be really difficult to do.

Compare that to finding a bug in your unit tests and you will be given a stack trace pointing to the exact line of code that had the issues.