Starting anything from scratch is a challenge. With Tracetest reaching its one year birthday today, this seemed a good chance to look back on the first year of the project. Hopefully this information will be of interest to other founders considering starting a new open-source project.

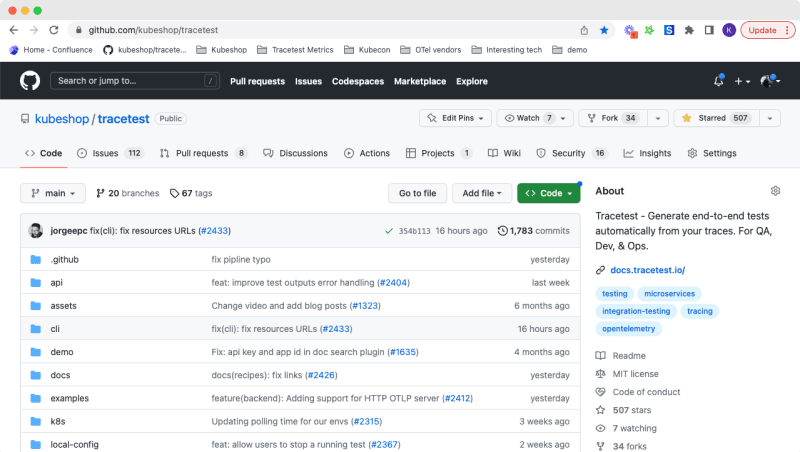

It’s been a rollercoaster ride with amazing highs. In the first year, Tracetest reached 500 stars, integrated with 9 different products, including leading tracing provides like Datadog, Lightstep, and many more. Let’s celebrate the year, and welcome the next with open arms. Here’s our story of how Tracetest was born and the challenges seen in our first year.

Building the Product from Scratch

First, what is Tracetest? Tracetest is a testing tool that leverages the data in a distributed trace to enable integration, system and end-to-end tests to be created in a fraction of the time. End-to-end tests are typically difficult to write. They can take 3 to 10 days to build across a complex distributed system. With Tracetest you can build the same test in 20 minutes. That is 24 to 72 times faster. Seriously!

How? We use a new test type called trace-based testing. Most of the time and complexity involved in building an end-to-end test is in adding code to provide visibility into the systems under test. You have to understand the complete flow, set up connections and permissions to various data stores you may want to inspect to ensure proper operation and find means to introspect components such as microservices and key infrastructure so you can ensure what you believe is happening actually is. Creating the actual assertions, such as “status code should equal 200”, is a trivial part of the work.

With trace-based testing and Tracetest, the observability of the distributed system under test is provided by the distributed trace you have already instrumented your system to provide. Tracetest can “see” not only the response from API calls to your application, but the full flow and each system involved as documented by the captured distributed trace. With that data, the work to build a test is to simply decide the assertions you want to add.

Tracetest is open source, MIT licensed, and the first MVP release occurred April 26th, 2022. Let's look at some of the challenges and questions faced in this first year, and how we addressed them.

Building a Fully Remote Team

My first two startups were bootstrapped, with only myself doing the initial development on the first, and only myself and two co-founders, who were part time, working on the second. It was a great approach in the day, but I believe it is less applicable today. More skill sets are needed to develop a system, from back end, front end, UX, and product management, and it is harder to be able to cover all these talents. There is also a higher level of expectation in the market - we are used to lovely products that are intuitive to use, and it is hard for a generalist to have this level of talent.

There is also a time to market constraint, as good ideas are quickly copied by well funded potential competitors. Tracetest is fortunate enough to be an incubator project under Kubeshop, so this affords us the luxury of recruiting a full development team.

We began Tracetest last year with resources that were already on staff at Kubeshop, “borrowing” three developers based in Europe. Jasmin, Povilas, and Mahmoud got the project off the ground and the first MVP released in only 3 months. Working with a European-based development team while acting as the product manager was, however, hard, as I was based in Germantown, TN in the CST time zone. With a 6 to 7 hour time difference, the commitment to wake up at 4 or 5 am most days so there was overlap was just not sustainable.

We made the decision to try to recruit a development team in the Americas. While I had worked with remote developers in Europe before and had seen both the advantages and disadvantages, I did not have experience hiring and working with a team in Central and Latin America. With the help of our recruiter, Julian at Aura Recruit, we were able to build a team in just 2 months. This team is a pleasure to work with - Sebastian, Matheus, Oscar, Jorge, and Daniel!

They are mature, focused on the product in addition to the technology, and are each a leader in their own right. Each acts as a product manager and as a developer relations person, while still holding down their “day job” of being a back-end or front-end developer. If you have ever wondered about hiring a team in the Americas, I can highly recommend it.

Developing Software Based on a New Concept

Testing tools are hardly new. Testing tools that execute against a back-end application have existed since the 1990s. They typically involve defining a method to trigger a test, perhaps hitting an API, and then creating assertions against the response data.

Trace-based testing has some entirely new challenges, as you are asserting not only against the response data, but against the distributed trace. The trace is a tree like structure, with activities branching off from the main call.

One of the main challenges we had was how to write assertions against this tree, as it was not flat like simple response data is. We came up with a solution which will be familiar to front end developers - using a selector. We modeled our selectors on the CSS selector language, and the assertions in Tracetest, called test specs, have two components:

- A selector specifying what span or spans to apply the assertions against.

- One or more assertions to apply against any of the spans matching the selector.

This decision created some options for very powerful assertions. In one test spec, we can specify that any gRPC process in the system should return a status code of zero. This singular check applies across every call involved in the trace. It is also self maintaining, as it will test any new gRPC calls that are added into the flow for that operation.

Another decision we faced this year was how to write the tests. We knew we wanted a graphical interface to see the trace and build tests from it. We also knew we needed a way to express the test in code so it could be versioned and used in a CI/CD/GitOps flow. We debated using a language such as JavaScript or Go to express it, but these would not easily handle our assertions which apply against multiple spans. The team decided on a YAML representation, and it was a great choice. It is clean, concise and easily diffed in Git. We express the entire test in this format and the test trigger and test specs are clearly shown.

type: Test

spec:

id: phAZcrT4B

name: Books list with availability

description: Testing the books list and availability check

trigger:

type: http

httpRequest:

url: http://app:8080/books

method: GET

headers:

- key: Content-Type

value: application/json

specs:

- selector: span[tracetest.span.type="http" name="GET /books" http.target="/books"

http.method="GET"]

assertions:

- attr:tracetest.span.duration < 500ms

- selector: span[tracetest.span.type="general" name="Books List"]

assertions:

- attr:books.list.count = 3

- selector: span[tracetest.span.type="http" name="GET /availability/:bookId" http.method="GET"]

assertions:

- attr:http.host = "availability:8080"

- selector: span[tracetest.span.type="general" name="Availablity check"]

assertions:

- attr:isAvailable = "true"

A third challenge was the user interface. With a trace-based test, there is a lot going on! In addition to the normal flows in a test tool, such as seeing a list of tests and test runs and defining a test and seeing the response and having assertions applied against it, we had an entire trace to show and enable test specs to be created against. We have kept Olly, our UX designer, busy. We opted to create our initial versions ‘wrong’, accepting that we needed to see and use it before we could hope to get an elegant flow. It has taken multiple tries, but we now have a version that ‘flows’ as you create tests and view the results.

UX - April 26th, 2022 - v0.1 MVP

UX - August 26th, 2022 - v0.6

UX - v0.11, April 26th, 2023

Getting the Word Out

New concepts are, by definition, new. There is no existing model, and potential users have no prior experience with which to base understanding the new concept. My previous startup, which we began in 2008 and sold in 2016, was named CrossBrowserTesting.com. As you may imagine, it was used to do cross browser testing, providing the ability to do live testing, take screenshots, and do automated selenium testing across a wide range of browsers and devices. It was simple to explain, and with several competitors in the marketplace there was a general understanding of what to expect from the product.

Tracetest is different. While the name is, once again, very direct, what exactly does it mean to test based on a trace? In addition to this, distributed tracing, upon which it is based, is a concept that is newer and many teams do not have exposure to. This results in a need to spend more energy explaining the problem space and particularly our approach to solving it. Personally, it has resulted in my developing new skills, as explaining and promoting the use of the project fell to me for most of the year.

In my previous startup, we wrote only 20 blog posts in the first 8 years, at Tracetest we have written 38 in the past year alone, a pace of almost one per week. We have also been producing videos, which was a real challenge as a newcomer to the medium, resulting in many, many retakes. Like any skill, practice makes you better, and while I will be getting no Emmy’s awarded, time at task has made the process easier. We are fortunate to have added a professional developer relations expert to the team, Adnan, so the pace and quality of the work has increased.

Conferences and speaking engagements are also a new approach for me personally, and we have been to several this year. Adnan recently spoke at FOSDEM, and we both were present at KubeCon + CloudNativeCon Europe in Amsterdam just last week. It is very motivating to present the project in person and receive direct feedback from real experts in the distributed, cloud-native world. We were overwhelmed by the response and crowds at the booth last week, and are reenergized to keep paving a way to making trace-based testing mainstream.

Wrapping up the First Year

Wow. It has been a busy, exciting year. As we were demoing Tracetest to hundreds of interested people last week at KubeCon, I was proud of the work the team had done. Tracetest works, and it will revolutionize testing for distributed systems. It flows well and is a pleasure to use. We have had 1000s of downloads, and https://github.com/kubeshop/tracetest reached 500 stars on Wednesday at the conference! Getting to 500 stars before the one year anniversary was a goal, and we were excited to exceed expectations. There is still work to be done. We are focused on adding new integrations so the tool works with any distributed tracing solution, whether vendor based such as Datadog, New Relic, Elastic or Lightstep, or open source such as OpenSearch or Jaeger. We are also excited to begin work on some new capabilities being suggested by our users to extend the capabilities of Tracetest, such as the Ability to export application behavior as policies for runtime or Define common trace "patterns / issues / problems".

Like what we are doing and want to help make our second year even better than the first? Give us a star on Github, create an issue there with your thoughts on the direction to take the product, or talk to us on our Discord channel. Looking forward to year #2!