KubeCon + CloudNativeCon Europe 2023 was the most highly anticipated conference for Kubernetes and cloud-native technologies this spring held in Amsterdam from April 17-21. That might also be the reason why it was sold out! Thousands of developers, engineers, architects, and executives attended the conference to discuss the latest trends, best practices, and innovations in cloud-native computing.

In this blog post, I'll highlight some of the key takeaways from the conference, including updates from Tracetest, a leading cloud-native testing platform.

Tracetest's Presence at KubeCon + CloudNativeCon 2023

What is Tracetest?

It’s an open-source test tool designed for any application using a distributed, cloud-native architecture. Tracetest uses the visibility exposed by your existing instrumentation, namely distributed tracing, to allow you to build integration, system, and end-to-end tests that can reach across the entire system. This technique, known as trace-based testing, enables you to assert the proper behavior of all the different microservices, lambda functions, asynchronous processes, and infrastructure components involved in a modern application. It simplifies your full system tests, allowing you to create tests in orders of magnitude less time, increase your test coverage, and improve quality in today's increasingly fast-paced development and deployment cycles.

The CNCF community's reception to this entirely new way of testing cloud-native apps was inspiring. Tracetest founder, Ken and I had hundreds of interactions with visitors, sharing knowledge and answering questions about Tracetest. The first day was so busy, our first opportunity to grab lunch came at 3:30 in the afternoon! We continued into the evening with the “Booth Crawl” event. It was a VERY long, but rewarding day!

Thursday and Friday saw even more people, with deep conversations with both current and prospective users, companies we integrate with, and a couple of recorded interviews, including one with Henrik Rexed of Is it Observable. We continued to discuss and show the Tracetest project up until the show floor closed Friday afternoon.

We hope our presence at KubeCon helped build a more cohesive Tracetest community. Check out our Twitter feed for photos of the team with numerous integration partners. The biggest highlights were contributions from our integration partners and community members, which will be discussed in the next section.

Best new issues!

After an awesome discussion with Jordan from Atlassian, he opened this issue.

Quote: I'm looking to use Tracetest as a way to analyze our traces and determine trace data quality. As part of doing this, I think it would be super useful to have the ability to not just assert the value of a trace attribute, but also to be able to verify that certain attributes exist.

For example: Writing an assertion to verify thatparentIdis set for a span attribute.

An amazing idea and an awesome initiative. We’re thrilled Jordan opened the issue and immediately started a discussion about how it would be possible right away before we start working on official support.

Sebastian, from the Tracetest back-end team, answered how it can be done right now.

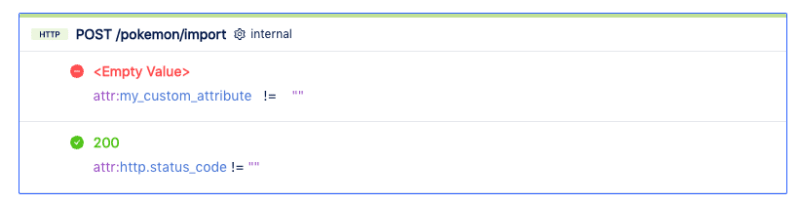

Quote: A thing to note about the assertions is that if an attribute doesn't exists, it's flagged as failed, regardless of the comparison being made. For example:

If you don't care about the values, just that the attribute exists, you can assert that the values IS NOT something that you know it shouldn't be. In this example, I took a custom attribute and asserted that it DOES NOT EQUAL to""(literally two quotes). The spans I'm asserting don't contain that attribute, so the test fails.

In the second assertion, I check that the http status code DOES NOT EQUAL to""(literally two quotes) again. In this case the attribute exists, and it has an actual value of200. Since200does not equal"", the assertion passes.

With this temporary solution currently solving the issue, we are actively working on implementing an official solution for the issue.

The second awesome issue we had was from Mark from ING. He had a great idea and opened this issue related to policy rulesets.

Quote: I would like to be able to export a policy ruleset based on a successful test in order to use that ruleset as input for anomaly detection and runtime enforcement in the production environment. For example as Falco ruleset or OPA policies.

Because a defined test set is probably either a preferred/allowed or forced fail/denied flow of your application, the output of any test can be used as an application dependency map or behavior map, this can be used in your production environment as security/anomaly rules of allowed flows or anomalous behavior, therefore being able to block any activity that has not been observed in your test environment. Creating a more secure deployment defined already from your build/test flow (shift-left).

Mark’s idea has rooted in finding abnormal behavior in APIs. If the API test generates a particular distributed trace every time it runs, it would be amazing to have Tracetest define a happy path. It could then generate a policy that looks for abnormalities and strange behavior.

Key Themes and Announcements from KubeCon and CloudNativeCon 2023

KubeCon and CloudNativeCon 2023 was all about Kubernetes and cloud-native tech just getting bigger and more popular. They talked about what's hot right now and how to do things right when it comes to security, observability, and scalability.

Oh, and they also said it's really important to use open-source software for cloud-native computing. Lots of speakers talked about how important it is to work together and get everyone involved in development, no matter what organization or industry they're in.

We focused on the observability ecosystem and took the time to interact with our friends from Lightstep, New Relic, Honeycomb, Dynatrace, Instana, and many more. With that in mind, keep an eye out for more integrations coming to Tracetest!

The Future of Cloud-Native Computing

KubeCon and CloudNativeCon 2023 was a huge success! It's clear that cloud-native computing is the future of computing. Kubernetes and other cloud-native technologies are important for organizations to build and scale modern applications. And with such a cool and supportive community, we can expect even more amazing developments and ideas in the years to come.

But as cloud-native computing evolves, testing and optimization are still critical. Tracetest made it clear at the event how important testing and optimization are in this rapidly evolving ecosystem. Looking ahead, we're going to see even more growth and innovation in the cloud-native ecosystem. And that's all thanks to the incredible developers, engineers, and industry leaders who are all about building a better way of testing cloud-native apps. So exciting!

Conclusion

Alright folks, let's wrap it up! KubeCon and CloudNativeCon 2023 was a killer event that brought together tons of cloud-native computing fanatics from all over the globe. We at Tracetest were there too, showing attendees how to test and optimize their Kubernetes-based, and cloud-native apps with trace-based testing. We can't wait to help make Tracetest a staple in the CNCF testing tool belt.

Like what we are doing and want to help make cloud-native testing better? Give us a star on Github, create an issue there with your thoughts on the direction to take the product, or talk to us on our Discord channel.