"I want to put my code in a Google Cloud function and send my messages to a message broker".

A serverless application deployed in the cloud provider's network that needs to communicate with an infrastructure application deployed in a private network. It's not that easy to set up and maintain!

Serverless and infrastructure, two worlds initially designed to not work together?

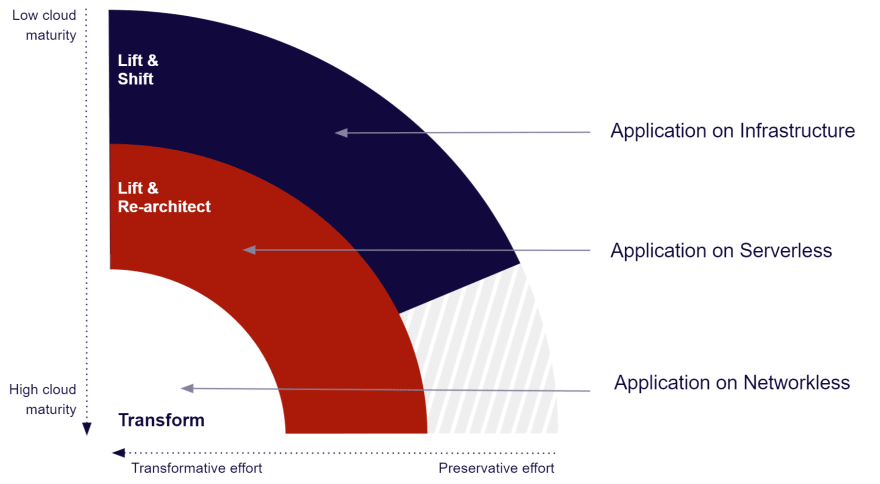

Many customers on Google Cloud use Serverless services to get away from server management, network infrastructure management, and some layers of security. This is a good approach, but not always the best solution. When we start from scratch in the Cloud, choosing a service based on the Google Cloud network is indeed the best approach, but when we come from the world of private infrastructure and want to communicate the two, we will quickly realize that they are different. So when we come across a Serverless solution that requires a private network, one of the biggest challenges begins, that of making the hidden world (Networkless) communicate with the revealed world (The infrastracture).

Cloud computing services

We often group public cloud services into five parts: Infrastructure (IaaS), Container (CaaS), Platform (PaaS), Function (FaaS), and Software as a Service (SaaS).

Public cloud services that rely on these cloud computing services always try to stick to the concepts. These concepts are not always understood by customers. Some users try to mirror their legacy to these services and very often find themselves faced with security and network challenges that they did not anticipate.

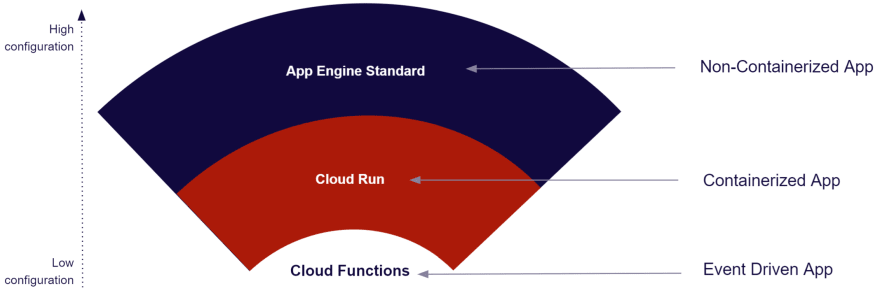

To help customers guarantee this communications between these different levels of cloud computing, cloud providers have developed a specific service to establish this link. For example, Google Cloud provides a Serverless VPC Connector to allow Google Cloud Functions (FaaS), App Engine (PaaS) and Cloud Run (PaaS) to communicate privately with Kubernetes Engine containers (CaaS) and Compute Engine instances (IaaS). You will find same service for Amazon Web Services with VPC Endpoint Services or Microsoft Azure with Virtual Network service endpoints.

If you are migrating to the cloud to move away from infrastructure management and your business uses only cloud functions but needs to communicate privately with a legacy application deployed in a virtual private network (VPC), you're going to have to maintain, operate and secure a private network over the long term, and it's not that simple. The network is an important part of an infrastructure.

If you really want to move away from infrastructure management, you will also need to be Networkless.

Serverless vs Networkless

What is Serverless?

Serverless computing is a cloud computing execution model in which the cloud provider allocates machine resources on demand, taking care of the servers on behalf of their customers. [1]

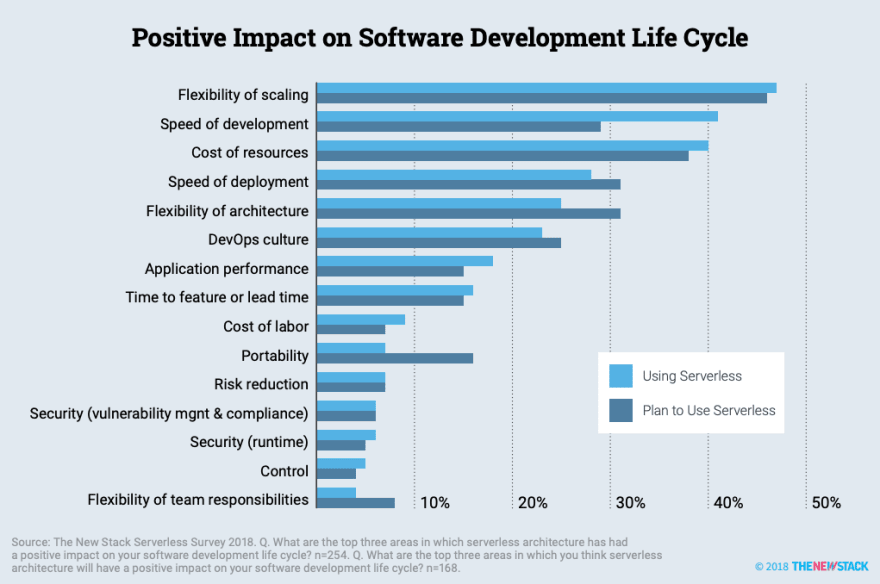

Serverless has positive impacts on the software development lifecycle while simplifying the developer experience by eliminating server management. Serverless lets you focus on business development.

Google Cloud’s serverless platform lets you build, develop, and deploy functions and applications, as source code or containers, while simplifying the developer experience by eliminating all infrastructure management. [2]

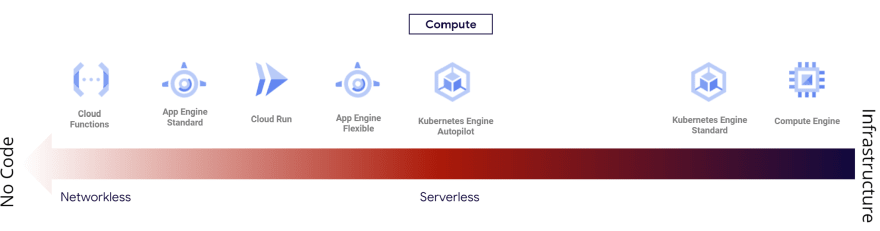

Most Google Cloud serverless services eliminate the infrastructure management like Cloud Functions, App Engine Standard or Cloud Run but not always.

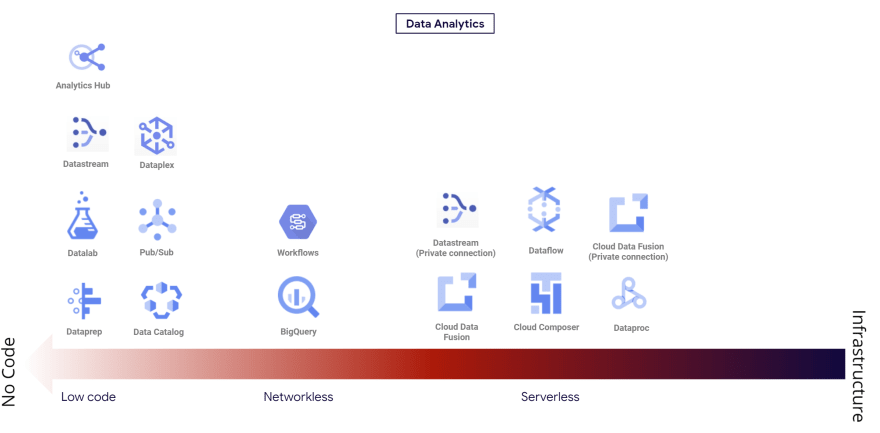

Cloud Dataflow as an example is presented as a serverless service.

Dataflow: Unified stream and batch data processing that's serverless, fast, and cost-effective. [3]

For a new or not yet mature customer on Google Cloud, he will think that all the infrastructure is managed by the cloud provider, this will be the case for server mangement but not always for the network. I see many customers who use serverless for computing (Cloud functions, Cloud Run, etc.) and use Cloud Dataflow for data processing without knowing that they are building their jobs in the default network (which not recommended for production). In Google Cloud, network configuration is always presented as optional.

This is also the case for Cloud Composer, Cloud Dataproc, Cloud Kubernetes Engine Autopilot or App Engine flexible. Google Cloud will manage the servers for you, but network management will be your responsibility. Serverless services but not Networkless.

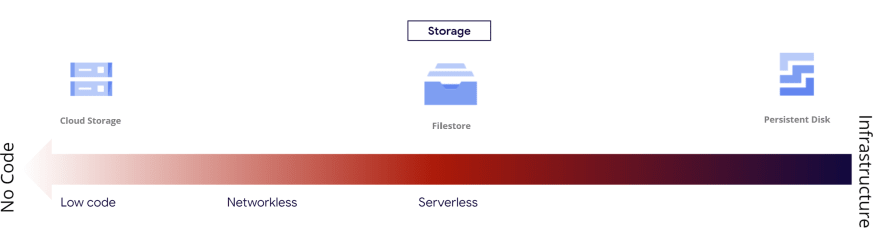

We can represent Google Cloud services in 2 categories:

- Services deployed on the Google Cloud network (networkless)

- Services deployed in your private network (infrastructure)

It can be illustrated like that:

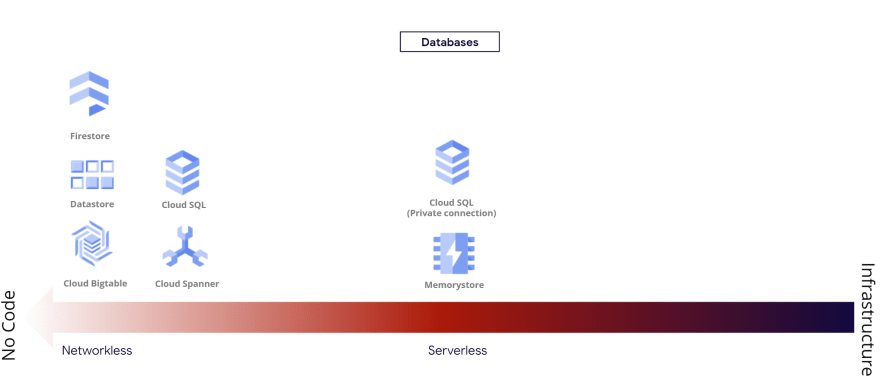

You notice that a service may move from one location to another depending on the network configuration. Cloud SQL is a good example.

In the security overview, it makes no sense to configure a private Cloud SQL instance if you are only using Cloud functions or Cloud Run services. A public Cloud SQL instance already has two strong protections: Cloud IAM permissions and database credentials, in addition to authorized networks.

Don't be intimidated by the word private. I remind you that you are on a public cloud... ;-)

Recommendations

If you're moving to the cloud and some apps you depend on need to stay on-premise or in a Google Virtual Private Cloud (VPC), migrate all your apps to Google Compute Engine or Google Kubernetes Engine and use only Serverless services that need a private network. This design ensures an isolated network, which is a network security best practice.

So migrate your workloads in the right service with the right network.

If you are a new company (no legacy), choose only networkless services. Focus on your business and let Google Cloud manage the infrastructure for you.

I see a lot of new companies, where developers who build a new app in Google Cloud are reusing their existing knowledge of open source tools and frameworks to deploy it on a Google Cloud infrastructure. This strategy is not the best. If you leave the company someone else will have to maintain your infrastructure. The infrastructure is only there for existing companies and it costs!

For example, I saw a company set up a standard regional Kubernetes Engine cluster, deploy an Apache Kafka, Elasticsearch, and MongoDB containers in addition to their frontend and backend applications.

If they had deployed this to avoid the vendor lock-in (Personally against this motivation), I wouldn't have taken it as an example.

For this company's business use case, it could have used Cloud Pub / Sub, BigQuery, Firestore, Cloud Storage, and Cloud Run instead. It would have been less expensive and certainly less complex to maintain and secure!

So deploy your workloads in the right service with the right options.

That's all folks!

Thanks for reading this post :-)

Documentation

[1] https://en.wikipedia.org/wiki/Serverless_computing

[2] https://cloud.google.com/serverless

[3] https://cloud.google.com/dataflow