Rancher is a popular Kubernetes management platform that simplifies the deployment and management of Kubernetes clusters. Rancher offers built-in monitoring capabilities to help you gain insights into your cluster's performance and health. However, like any software, issues may arise. In this document, we'll explore one main problem encountered when upgrading a cluster related to Rancher, which is Cluster Agent is not connected and how to troubleshoot it.

Rancher Cluster Agent Issues

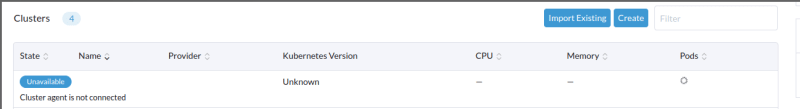

In this case, when trying to upgrade the cluster in Rancher to a newest version of kubernetes using terraform, the cluster enters to an unavailable state and our upgrade ends with error, so we must solve this issue to continue.

The error was that the cluster_agent component was not connected.

1. Troubleshooting Steps

In order to resolve the issue we passed several steps of troubleshooting:

1.1. Verify that the Rancher cattle-cluster-agent deployment in your cluster is running:

kubectl get deployments cattle-cluster_agent -namespace cattle-system -o yaml

The result shows that the pods in the deployment cattle-cluster agent were correctly in the state running 😟 .

1.2. Lets now check the logs of the cluster agent pods:

kubectl logs cattle-cluster-agent-748cc64689-kr6k8 -n cattle-system

INFO: Using resolv.conf: search cattle-system.svc.cluster.local svc.cluster.local cluster.local nameserver xxxxxxxx options ndots:5

INFO: https://rancher.k3s.cn/ping is accessible

INFO: rancher.k3s.cn resolves to xxxxxxxx

INFO: Value from https://rancher.k3s.cn/v3/settings/cacerts is an x509 certificate

time="2023-09-21T10:51:09Z" level=info msg="Listening on /tmp/log.sock"

time="2023-09-21T10:51:09Z" level=info msg="Rancher agent version v2.6.13 is starting"

time="2023-09-21T10:51:09Z" level=info msg="Connecting to wss://rancher.k3s.cn/v3/connect/register with token starting with xxxxxxxxxxxx"

time="2023-09-21T10:51:09Z" level=info msg="Connecting to proxy" url="wss://rancher.k3s.cn/v3/connect/register"

time="2023-09-21T10:51:09Z" level=info msg="Starting /v1, Kind=Service controller"

So, the logs does not really show a significants informations to resolve the issue 😟.

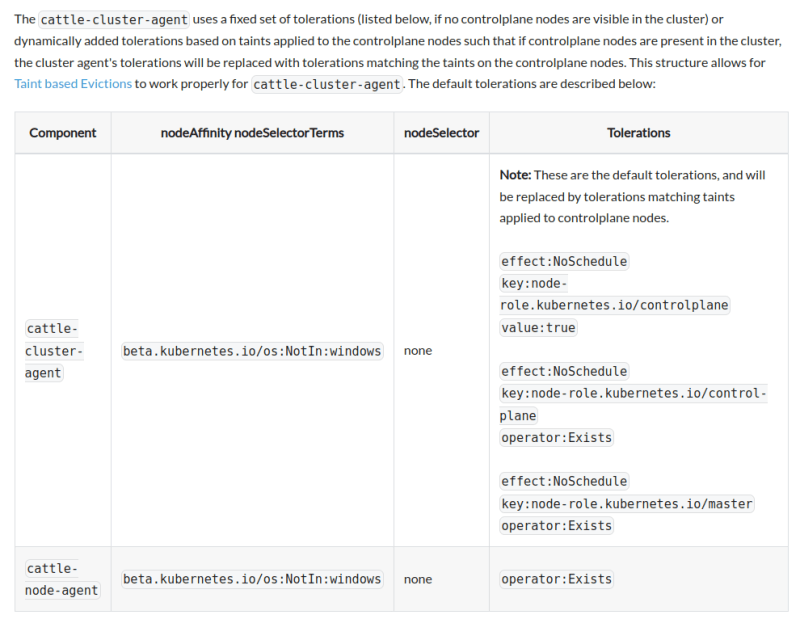

1.3. We must understand how the cattle-cluster-agent works and how it must be deployed on the cluster. To find that, we check the documentation on Rancher and we found that the cattle-cluster-agent deployment uses a set of tolerations to be deployed on master node and not a worker node, these tolerations on cattle-monitoring-system deployment will dynamically trigger and take the same tolerations based on taints used on master nodes of the cluster and it must be deployed on these master nodes. Otherwise the cattle-cluster-agent deployment will be deployed with a default tolerations:

1.4. If we check the pods of our cattle-cluster-agent deployement:

kubectl get pods -n cattle-system -o wide

We see that cattle-cluster-agent is deployed on the worker nodes not on the master nodes and if we check the toleration present on the deployment, we found the default tolerations, we talked about in 1.3, and not the tolerations based on taints of our master nodes.

The tolerations on the deployment:

- effect: NoSchedule

key: node-role.kubernetes.io/controlplane

value: "true"

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

Tolerations based on taints present on the master nodes:

- effect: NoSchedule

key: node-role.kubernetes.io/controlplane

value: "true"

- effect: NoExecute

key: node-role.kubernetes.io/etcd

value: "true"

So this is why our cattle-cluster-agent deployment is considered as unconnected, when we modify the cluster for the upgrade, the cluster-agent is not triggered dynamically with the correct tolerations to be deployed on the master nodes 👌.

2. Solutions

To resolve this problem, we consider two suggestions:

2.1. Now we know that the cattle-cluster-agent deployment must trigger on the taints present on the master nodes. To do that, we apply a new taint on all the master nodes (in our case three master nodes):

kubectl taint nodes master-km01 test=true:NoSchedule

kubectl taint nodes master-km02 test=true:NoSchedule

kubectl taint nodes master-km03 test=true:NoSchedule

wait a few seconds and we check the cattle-cluster-agent again, we see that the deployment is now trigger on the tolerations of the master nodes and it is running on the them:

- effect: NoSchedule

key: test

value: "true"

- effect: NoSchedule

key: node-role.kubernetes.io/controlplane

value: "true"

- effect: NoExecute

key: node-role.kubernetes.io/etcd

value: "true"

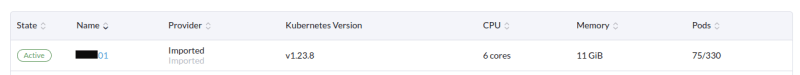

And the cluster is now on the active state:

After that we can remove the added taint with:

kubectl taint nodes master-km01 test=true:NoSchedule-

kubectl taint nodes master-km02 test=true:NoSchedule-

kubectl taint nodes master-km03 test=true:NoSchedule-

2.2. Before modifying the cluster for upgrade, make sure to ignore the import manifest of the cattle-cluster-agent deployment so it will not be re-installed. In our case using terraform, we have to comment the line on terraform code which is responsible of the import manifest and apply the terraform after:

#rke_addons =https://url_to_your_manifest/v3/import/xxxxxxxxxxx_c-jpg8f.yaml

3. Conclusion

Troubleshooting Rancher Cluster Agent issue can be challenging, but by following the steps outlined in this document, you can identify and resolve common problems that may arise. If the issues persist, consider consulting Rancher's official documentation or seeking help from the Rancher community for more specific guidance.

Thanks for reading! I’m Bilal, Cloud DevOps consultant at Stack Labs.

If you want to join an enthusiast Infra cloud team, please contact us.