This is a Plain English Papers summary of a research paper called Direct Nash Optimization: Teaching Language Models to Self-Improve with General Preferences. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper introduces a novel approach called "Direct Nash Optimization" (DNO) for teaching language models to self-improve with general preferences.

- The key idea is to formulate the model training process as a game between the language model and a reward model, where the language model tries to generate outputs that maximize the reward model's score.

- This approach is designed to be more flexible and scalable than existing techniques like Reinforcement Learning from Human Feedback (RLHF), which rely on hand-crafted reward functions.

Plain English Explanation

The researchers have developed a new method called "Direct Nash Optimization" (DNO) to help language models like GPT-3 or GPT-4 get better at tasks over time.

The basic idea is to set up a "game" between the language model and another model called a "reward model". The reward model's job is to evaluate how good the language model's outputs are, based on some general preferences or goals. The language model then tries to generate outputs that maximize the reward model's score.

This is different from other approaches like Reinforcement Learning from Human Feedback (RLHF), which rely on humans manually defining reward functions. With DNO, the reward model can learn more general preferences, making the system more flexible and scalable.

The key advantage of this approach is that it allows the language model to keep improving itself, without needing constant human oversight or intervention. The language model essentially learns to "self-improve" by optimizing for the reward model's preferences.

Technical Explanation

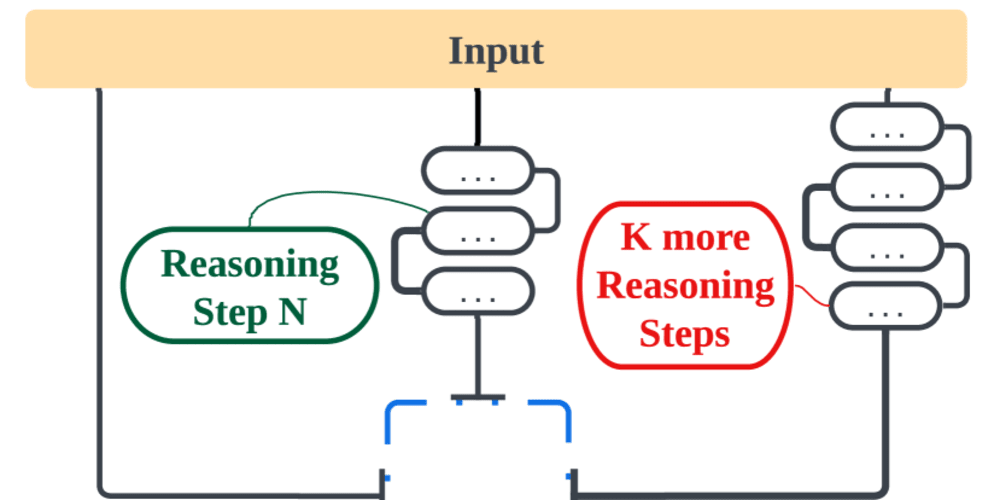

The paper introduces a new training framework called "Direct Nash Optimization" (DNO) that aims to teach language models to self-improve according to general preferences, rather than relying on hand-crafted reward functions.

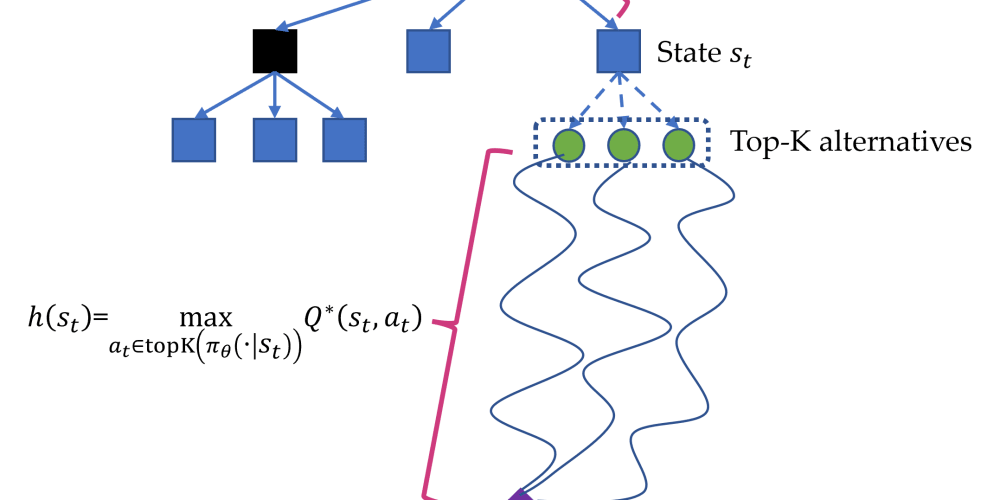

The core idea is to formulate the model training process as a game between the language model and a reward model. The language model tries to generate outputs that maximize the reward model's score, while the reward model tries to accurately capture the desired preferences. This "Nash equilibrium" leads the language model to learn to generate outputs that align with the general preferences encoded in the reward model.

The authors show that this approach has several advantages over existing techniques like Reinforcement Learning from Human Feedback (RLHF). First, the reward model can learn more general preferences, rather than being limited to specific reward functions. Second, the language model can keep improving itself through this optimization process, without needing constant human oversight.

Theoretically, the authors provide convergence guarantees for this approach under certain assumptions, and demonstrate its robustness to noise in the reward model.

Critical Analysis

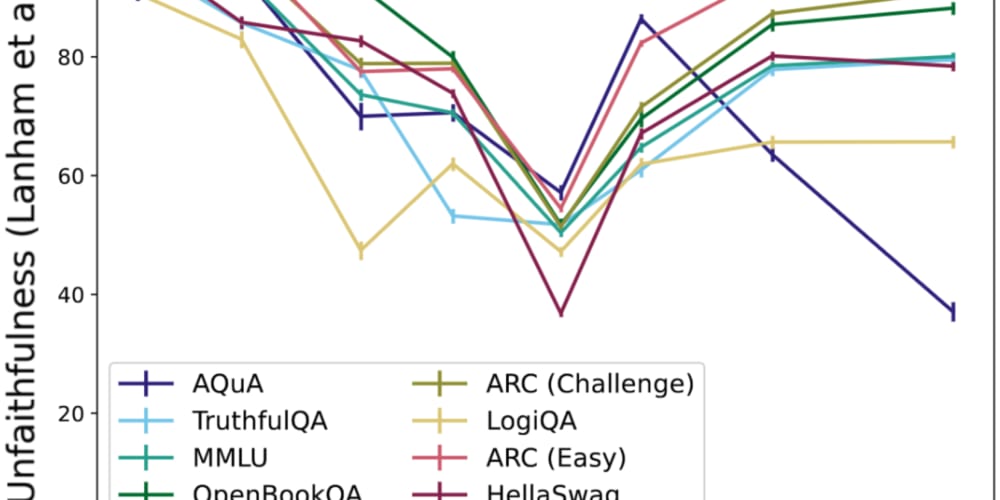

The paper presents a compelling and well-grounded framework for teaching language models to self-improve according to general preferences. The theoretical analysis and empirical results suggest that DNO has the potential to be a more flexible and scalable alternative to RLHF.

However, the authors acknowledge several limitations and areas for further research. For example, the current formulation assumes that the reward model is static, whereas in practice, it may need to adapt and evolve over time. Additionally, the authors note that the performance of DNO may depend on the specific architecture and training procedures used for the language model and reward model.

Another potential concern is the risk of reward model misspecification, where the preferences encoded in the reward model may not fully align with the desired outcomes. This could lead to unintended consequences or behaviors from the language model. Careful monitoring and evaluation of the reward model's performance would be crucial in such cases.

Finally, the authors do not address the potential computational and resource requirements of the DNO approach, which could be a practical concern for deploying these systems at scale. Techniques like Online Control and Adaptive Large Neighborhood Search may be helpful in addressing these challenges.

Conclusion

The "Direct Nash Optimization" framework introduced in this paper represents a significant step forward in teaching language models to self-improve according to general preferences. By formulating the training process as a game between the language model and a reward model, the authors have developed a more flexible and scalable approach than existing techniques like RLHF.

While the paper highlights several promising theoretical and empirical results, it also acknowledges important limitations and areas for further research. Careful attention to reward model specification, computational efficiency, and potential unintended consequences will be crucial as this approach is further developed and deployed in real-world applications.

Overall, the DNO framework is a valuable contribution to the field of language model optimization, and it will be exciting to see how it evolves and is applied to address the growing demand for highly capable and aligned AI systems.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.