This is a Plain English Papers summary of a research paper called Visibility into AI Agents. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

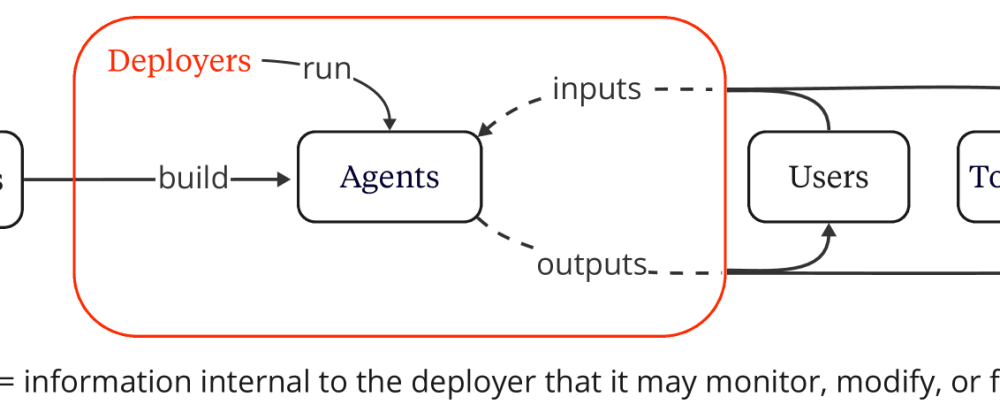

- This paper discusses the need for monitoring the deployment of AI agents to ensure transparency and oversight.

- It examines the risks associated with AI agents, such as malicious use, unintended consequences, and lack of accountability.

- The paper proposes a framework for monitoring AI agents throughout their deployment to mitigate these risks and maintain public trust.

Plain English Explanation

As artificial intelligence (AI) systems become more prevalent in our lives, it's crucial to ensure they are being used responsibly and safely. This paper focuses on the need to closely monitor the deployment of AI "agents" - autonomous programs that can take actions on our behalf.

The researchers outline several key risks associated with AI agents. They could potentially be used for malicious purposes, like manipulating information or exploiting vulnerabilities. Even when deployed with good intentions, AI agents may have unintended consequences that harm individuals or society. And without proper oversight, there may be a lack of accountability when things go wrong.

To address these concerns, the paper proposes a framework for continuously monitoring AI agents throughout their deployment. This would involve tracking the agents' actions, outputs, and impact, and making this information transparent to the public. The goal is to maintain visibility into how AI systems are being used in the real world, empowering people to understand and trust the technology.

By establishing clear monitoring practices, the researchers hope to enable more responsible development and deployment of AI agents. This could help build public confidence in AI and ensure these powerful technologies are used to benefit humanity, rather than cause harm.

Technical Explanation

The paper presents a framework for monitoring the deployment of AI agents to address concerns around transparency, accountability, and unintended consequences. It begins by outlining several key risks associated with the use of AI agents:

Malicious Use: AI agents could be exploited for harmful or deceptive purposes, such as manipulating information, violating privacy, or targeting vulnerabilities.

Unintended Consequences: Even well-intentioned AI agents may have unforeseen impacts that negatively affect individuals or society.

Lack of Accountability: Without proper oversight, it may be difficult to assign responsibility when AI agents cause harm or make mistakes.

To mitigate these risks, the researchers propose a framework for continuously monitoring the deployment of AI agents. This would involve tracking the agents' actions, outputs, and impacts, and making this information publicly available. The goal is to maintain visibility into how these AI systems are being used in the real world, empowering people to understand and trust the technology.

The paper discusses various technical approaches for implementing this monitoring framework, such as using distributed AI agents as a means to achieve transparency and leveraging AI agents to enhance biomedical discovery. The researchers also highlight the importance of designing AI agents with transparency and accountability in mind from the outset, as part of a broader pursuit of trustworthy AI.

Critical Analysis

The paper makes a compelling case for the need to closely monitor the deployment of AI agents to ensure transparency and oversight. The researchers have identified several important risks that must be addressed, and their proposed framework for continuous monitoring is a promising approach.

However, the paper does not delve deeply into the technical and practical challenges of implementing such a system. Questions remain about the feasibility and scalability of continuously tracking and reporting on the actions and impacts of AI agents in complex, real-world environments.

Additionally, the paper does not explore potential unintended consequences or limitations of the monitoring framework itself. There may be concerns around privacy, security, or the potential for the monitoring system to be misused or gamed by bad actors.

Further research and pilot studies would be needed to fully validate the effectiveness and practicality of the proposed framework. The researchers should also consider engaging with a broader range of stakeholders, including AI developers, policymakers, and the general public, to gather feedback and address any ethical or societal concerns.

Conclusion

This paper highlights the critical need for increased transparency and oversight in the deployment of AI agents. By proposing a framework for continuous monitoring, the researchers aim to mitigate the risks of malicious use, unintended consequences, and lack of accountability.

Implementing such a monitoring system could help build public trust in AI technologies and ensure they are used to benefit society. However, significant technical and practical challenges remain, and further research is needed to fully validate the feasibility and effectiveness of the approach.

Ongoing dialogue and collaboration between AI developers, policymakers, and the public will be essential to navigate the complex issues surrounding the responsible development and deployment of AI agents.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.