This is a Plain English Papers summary of a research paper called Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper proposes a framework for ensuring the robustness and reliability of AI systems, with the goal of achieving guaranteed safe AI.

- The framework involves a comprehensive approach to evaluating the safety and security of advanced AI models, covering technical, ethical, and social considerations.

- Key aspects include holistic safety and responsibility evaluations, a journey towards trustworthy AI, redefining safety for autonomous vehicles, and addressing security vulnerabilities in semantic AI systems.

Plain English Explanation

The paper presents a framework for developing safe and reliable AI systems that can be trusted to operate in the real world. The core idea is to take a comprehensive, holistic approach to evaluating the safety and security of advanced AI models, looking at not just the technical aspects but also the ethical and social implications.

Some key elements of the framework include:

- Holistic safety and responsibility evaluations: Thoroughly assessing AI models for potential risks, harms, and unintended consequences, beyond just technical performance.

- A journey towards trustworthy AI: Establishing a clear process and set of principles for developing AI systems that are transparent, accountable, and aligned with human values.

- Redefining safety for autonomous vehicles: Expanding the traditional notion of safety to include considerations like data security, privacy, and fairness when it comes to self-driving cars.

- Addressing security vulnerabilities in semantic AI systems: Identifying and mitigating weaknesses in the underlying AI architectures, particularly when it comes to high-stakes applications like autonomous driving.

The overall goal is to create a rigorous, multi-faceted approach to ensuring the robustness and reliability of AI systems, so that we can have greater confidence in their safe deployment in the real world.

Technical Explanation

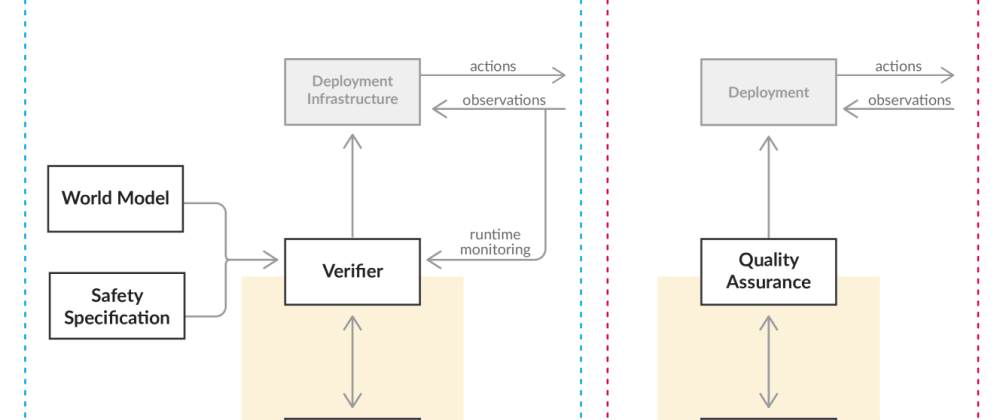

The paper proposes a comprehensive framework for ensuring the safety and security of advanced AI systems, with the ultimate aim of achieving "guaranteed safe AI." This framework encompasses a range of technical, ethical, and social considerations that must be addressed to build truly trustworthy and reliable AI.

At the core of the framework are holistic safety and responsibility evaluations for AI models. This involves thoroughly assessing potential risks, harms, and unintended consequences that could arise from the deployment of these systems, going beyond just evaluating their technical performance.

The framework also outlines a journey towards trustworthy AI, which includes establishing clear principles, processes, and governance structures to ensure AI systems are transparent, accountable, and aligned with human values.

When it comes to specific applications like autonomous vehicles, the framework calls for redefining traditional notions of safety to include considerations around data security, privacy, and fairness.

Additionally, the paper delves into the security vulnerabilities of semantic AI systems used in high-stakes domains like autonomous driving. It identifies potential weaknesses in the underlying AI architectures and proposes strategies for mitigating these vulnerabilities.

Critical Analysis

The paper presents a well-rounded and ambitious framework for ensuring the safety and reliability of advanced AI systems. Its holistic approach, which considers technical, ethical, and social factors, is a significant step forward in addressing the complex challenges associated with deploying AI in the real world.

However, the authors acknowledge that fully realizing this framework will be a substantial undertaking, requiring significant research, coordination, and collaboration across multiple stakeholders, including policymakers, industry, and the broader AI community. The level of effort and resources required to implement this framework could be a potential limitation.

Additionally, while the paper provides a comprehensive outline of the framework, it does not delve deeply into the specific methods and techniques that would be used to implement each component. Further research and validation would be necessary to ensure the efficacy and practicality of the proposed approaches.

Despite these limitations, the framework presented in this paper represents an important step towards redefining the notion of safety for advanced AI systems and paving the way for their safe and responsible deployment in the real world.

Conclusion

This paper presents a comprehensive framework for ensuring the robustness and reliability of AI systems, with the ultimate goal of achieving "guaranteed safe AI." The framework takes a holistic approach, considering technical, ethical, and social factors to thoroughly evaluate the safety and security of advanced AI models.

Key elements of the framework include holistic safety and responsibility evaluations, a journey towards trustworthy AI, redefining safety for autonomous vehicles, and addressing security vulnerabilities in semantic AI systems. While the implementation of this framework will be a significant undertaking, it represents an important step forward in addressing the complex challenges associated with deploying AI in the real world.

By taking a comprehensive and rigorous approach to ensuring the safety and reliability of AI systems, this framework has the potential to build greater trust and confidence in the use of these technologies, paving the way for their safe and responsible deployment across a wide range of applications.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.