This is a Plain English Papers summary of a research paper called Stream of Search (SoS): Learning to Search in Language. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper introduces a novel approach called "Stream of Search" (SoS) that enables language models to learn how to search effectively within their own language space.

- The key idea is to train the model to generate a stream of search queries that incrementally refine an original query, rather than just producing a single output.

- This allows the model to actively explore and navigate the language space to find the most relevant information, similar to how humans search on the internet.

Plain English Explanation

The paper presents a new way for language models, like the ones that power chatbots and virtual assistants, to improve their search capabilities. Integrating Hyperparameter Search into GRAM and Can Small Language Models Help Large Language Models? have explored related ideas.

Traditionally, these models would simply generate a single response to a query. But the "Stream of Search" (SoS) approach trains the model to instead produce a series of refined search queries. This allows the model to explore the space of possible responses, much like how humans might refine their searches on the internet to find the most relevant information.

By learning to search within its own language abilities, the model can better understand the nuances and context of the original query. This is similar to how Dwell: Beginning How Language Models Embed Long-Term Memory explored how language models can build up an understanding over multiple interactions.

The key insight is that training the model to actively search, rather than just produce a single output, can lead to more accurate and useful responses. This could have interesting applications for virtual assistants, chatbots, and other language-based AI systems.

Technical Explanation

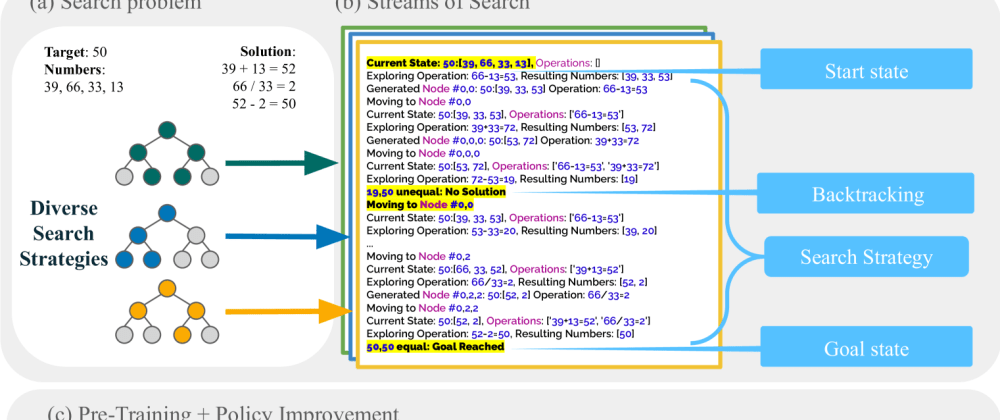

The core of the SoS approach is to train the language model to generate a "stream" of search queries, where each query iteratively refines the previous one. This is done by structuring the training process as a multi-step search task.

The model is first given an initial query, and then tasked with producing a sequence of refined queries that gradually hone in on the most relevant information. The quality of the final query in the sequence is then used to provide feedback and update the model's parameters.

This training process encourages the model to explore the space of possible queries, rather than simply outputting a single fixed response. The authors show that this leads to better performance on a range of language understanding and retrieval tasks, compared to standard language models.

The Solving Ability Amplification Strategy (SAAS) paper explored a related idea of using iterative refinement to improve model performance. The SoS approach builds on these insights, applying them specifically to the domain of language search and retrieval.

Critical Analysis

One key limitation of the SoS approach is that it relies on being able to provide high-quality feedback on the final query in the sequence. In real-world applications, obtaining such detailed feedback may be challenging.

The paper also does not address how the SoS approach would scale to more complex, open-ended search tasks. The experiments focus on relatively constrained, factual retrieval scenarios. Applying the technique to more ambiguous, exploratory search tasks may require further innovations.

Additionally, the computational overhead of generating and evaluating multiple search queries may limit the practical deployment of SoS in some settings. The tradeoffs between search quality and efficiency would need to be carefully considered.

Overall, the SoS approach represents an interesting step towards improving the search capabilities of language models. However, further research is needed to fully understand its strengths, weaknesses, and potential real-world applications. Readers are encouraged to Do Sentence Transformers Learn Quasi-Geospatial Concepts? and form their own conclusions about the merits of this work.

Conclusion

The "Stream of Search" (SoS) approach introduced in this paper offers a novel way for language models to learn how to search effectively within their own language space. By training the model to generate a sequence of refined search queries, rather than a single output, the authors demonstrate improvements in language understanding and retrieval tasks.

This work represents an interesting step towards more sophisticated language-based AI systems that can actively explore and navigate information, similar to how humans search the internet. While the current implementation has some limitations, the core ideas behind SoS could have important implications for the development of virtual assistants, chatbots, and other language-based applications.

As the field of language AI continues to evolve, techniques like SoS that enhance the search and exploration capabilities of models will likely become increasingly important. Readers are encouraged to stay up-to-date with the latest advancements in this rapidly advancing area of research.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.