This is a Plain English Papers summary of a research paper called Transformers Can Do Arithmetic with the Right Embeddings. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper investigates the ability of Transformer language models to perform simple arithmetic operations on numerical values embedded within text.

- The researchers explore how the choice of numerical embedding can impact the model's numeric reasoning capabilities.

- They find that Transformers can indeed learn to perform basic arithmetic when provided with appropriate numerical embeddings, but struggle with more complex operations or generalization beyond the training distribution.

Plain English Explanation

The researchers in this paper wanted to see if large language models like Transformers can do simple math when they encounter numbers in the text they're reading. Language models are AI systems that are trained on huge amounts of text data to understand and generate human language.

The key question the researchers explored is: if you give a Transformer model numbers embedded in text, can it learn to do basic arithmetic operations like addition and multiplication on those numbers? The researchers tried different ways of representing the numbers within the Transformer's inputs and found that the choice of numerical embedding can make a big difference in the model's ability to reason about the numbers.

When the Transformers were given the right kind of numerical embeddings, they were able to learn how to do simple arithmetic. However, the models still struggled with more complex math or with generalizing their numerical reasoning skills beyond the specific examples they were trained on. The paper provides insights into the strengths and limitations of Transformers when it comes to learning to work with numerical information in text.

Technical Explanation

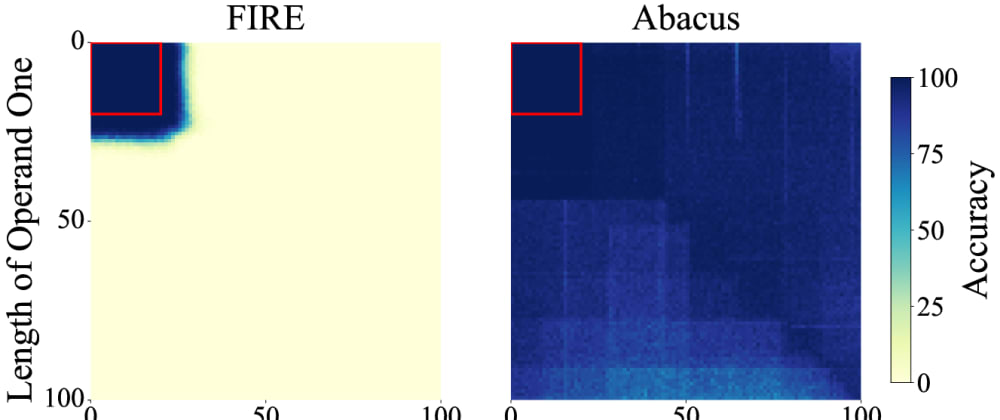

The researchers investigated the numeric reasoning capabilities of Transformer language models by designing a suite of arithmetic tasks. They explored how the choice of numerical embedding - the way the model represents numbers in its internal computations - impacts the model's ability to perform basic arithmetic operations.

The researchers experimented with several different numerical embedding schemes, including linear scaling, logarithmic scaling, and learnable embeddings. They found that the choice of embedding had a significant effect on the model's arithmetic performance. Linear scaling, for example, allowed the model to learn addition and subtraction, while logarithmic scaling enabled it to also learn multiplication and division.

Further experiments revealed the limitations of the Transformer models. While they could learn to perform basic arithmetic when given the right numerical representations, they struggled to generalize this numeric reasoning beyond the specific training distributions. The models also had difficulty with more complex operations involving multiple steps or more abstract mathematical concepts.

The paper provides valuable insights into the inner workings of Transformer language models and their ability to reason about numerical information. The results suggest that these models can be trained to exhibit basic "number sense", but significant challenges remain in developing their full arithmetic and mathematical reasoning capabilities.

Critical Analysis

The paper makes a valuable contribution by systematically exploring the numeric reasoning abilities of Transformer language models. The experimental setup and analysis are rigorous, and the findings offer important insights into the strengths and limitations of these models when it comes to working with numerical information.

That said, the paper acknowledges several caveats and areas for further research. For example, the arithmetic tasks examined in the study are relatively simple, and it remains to be seen whether Transformers can handle more complex mathematical operations or reasoning. Additionally, the paper does not address the practical implications of these findings for real-world applications of language models.

One potential concern is the reliance on specific numerical embedding schemes. While the researchers demonstrate the importance of this design choice, it's unclear how these embedding strategies would scale or generalize to more diverse numerical data encountered in real-world settings. Further work is needed to develop more robust and flexible numerical representations for Transformer models.

Additionally, the paper does not explore the potential role of pretraining or fine-tuning in enhancing the numeric reasoning capabilities of Transformers. Exploring Internal Numeracy: A Case Study of Language Models has shown that some degree of numeric reasoning can emerge during standard language model pretraining, suggesting that more targeted training approaches may lead to further improvements.

Overall, this paper provides a valuable foundation for understanding the numeric reasoning abilities of Transformer language models. The findings highlight the importance of considering numerical representations and the limitations of current approaches, paving the way for future research to address these challenges and unlock the full mathematical potential of these powerful language models.

Conclusion

This paper investigates the numeric reasoning capabilities of Transformer language models, exploring how the choice of numerical embedding can impact their ability to perform basic arithmetic operations. The researchers find that Transformers can learn to do simple math when provided with the right numerical representations, but struggle with more complex operations or generalization beyond their training data.

The results offer important insights into the inner workings of these language models and the critical role of numerical representations in enabling numeric reasoning. While the findings suggest that Transformers can exhibit a basic "number sense", significant challenges remain in developing their full mathematical reasoning capabilities.

Future research should explore more advanced numerical representations and training approaches to further enhance the Transformers' ability to work with numerical information in practical applications. By addressing these challenges, the field can unlock the full potential of large language models to engage in more sophisticated mathematical reasoning and problem-solving.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.