This is a Plain English Papers summary of a research paper called Evaluating the Performance of ChatGPT for Spam Email Detection. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper evaluates the performance of the large language model ChatGPT for the task of spam email detection.

- The researchers investigate ChatGPT's ability to accurately classify emails as spam or not, and compare its performance to traditional machine learning models.

- The study aims to assess the potential of using large language models like ChatGPT for cybersecurity applications, specifically in the context of email-based threats.

Plain English Explanation

This research paper looks at how well the AI system called ChatGPT can detect spam emails. Spam emails are messages that are unwanted or try to scam people, and being able to identify them is important for cybersecurity.

The researchers wanted to see if ChatGPT, a powerful language model that can understand and generate human-like text, could accurately classify emails as spam or not. They compared ChatGPT's performance to traditional machine learning models that are commonly used for spam detection.

The goal was to understand if large language models like ChatGPT could be useful for protecting against email-based threats and cyberattacks. If ChatGPT can reliably identify spam emails, it could be a valuable tool for improving email security and protecting people from scams and other online dangers.

Technical Explanation

The researchers designed experiments to evaluate ChatGPT's spam email detection capabilities. They used a benchmark dataset of spam and non-spam emails to test ChatGPT's classification performance.

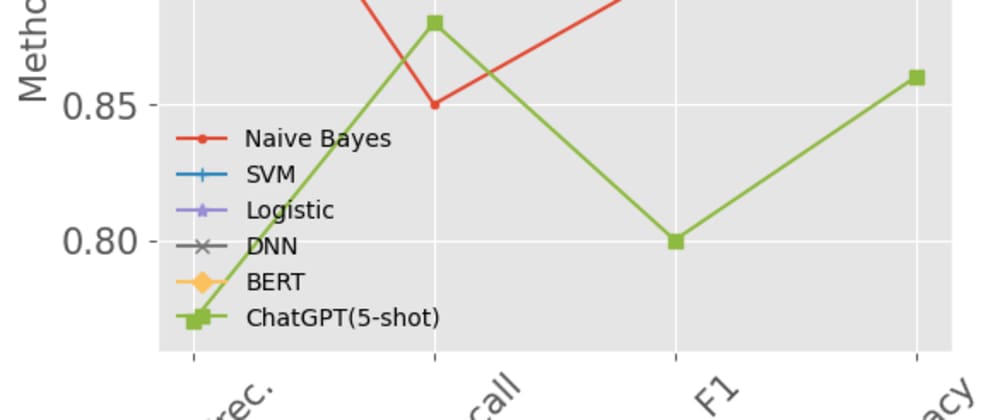

They prompted ChatGPT to analyze each email and determine if it was spam or not. ChatGPT's predictions were then compared to the ground truth labels in the dataset. The researchers also tested traditional machine learning models like Support Vector Machines and Naive Bayes on the same dataset to provide a baseline for comparison.

The results showed that ChatGPT was able to achieve competitive accuracy in distinguishing spam from non-spam emails, performing on par with or better than the traditional models. This suggests that large language models like ChatGPT have the potential to be effective for spam detection tasks.

Critical Analysis

The paper acknowledges some limitations of the study, such as the use of a single dataset and the lack of testing on real-world, dynamic email streams. There are also concerns about the interpretability and transparency of ChatGPT's decision-making process, which could be important for security applications.

Additionally, the researchers note that further research is needed to understand the generalization capabilities of large language models like ChatGPT and their robustness to evolving spam tactics. Incorporating adversarial examples or out-of-distribution data into the evaluation could provide a more comprehensive assessment of their spam detection capabilities.

Conclusion

This study demonstrates that the large language model ChatGPT can be a promising tool for spam email detection, potentially outperforming traditional machine learning approaches. The findings suggest that further research into the use of large language models for cybersecurity applications could be valuable.

However, the limitations and open questions identified in the paper highlight the need for continued exploration and careful consideration of the practical deployment of these models in real-world security scenarios. Ongoing research and development in this area could lead to more effective and robust email protection systems.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.